1. Introduction

In the present day, with many countries experiencing aging populations, more elders tend to live alone and are often unable to receive care from family members. It is a recognized fact that elders are subject to falls and accidents when carrying out the activities of daily life. To help single elders live safely and happily, through the Internet of Things (IoT), smart home equipment has been developed to identify the daily activities of elders. In fact, activity recognition is a vital objective in a smart home situation [

1]. The ML society is intrigued by Human Activity Recognition (HAR) [

2] due to its availability in real-world applications such as fall detection for elderly healthcare monitoring, exercise tracking in sport science [

3,

4], surveillance systems [

5,

6,

7], and preventing office work syndrome [

8]. Currently, HAR is becoming a challenging research topic due to the accessibility of sensors in wearable devices (e.g., smartphone, smartwatch, etc.) which are cost-effective and consume less power, including live cascading of time-series data [

9].

Recent research, involving both dynamic and static HAR, uses sensor data collected from wearable devices to better understand the relationship between health and behavioral biometric information [

10,

11]. The HAR methods can be categorized into two categories according to data sources: visual-based and sensor-based [

12]. With visual-based HAR, video or image data are recorded and processed using computer vision techniques [

13]. The authors [

14] proposes a new approach for identifying sport-related events with video data based on fusion and multiple features. This work achieves high recognition rates in the video-based HAR. Whereas sensor-based HAR works on time-series data captured from a wide range of sensors embedded in wearable devices [

15,

16]. In [

17], the authors researches a context-aware HAR system and notices that an accelerometer is mostly adequate to detect simple activities including walking, sitting, and standing. As adding gyroscope data, the system recognition performance will be increased for employing more complex activities such as drinking, eating, and smoking. However, there are some works to explore other sensors. Fu et al. [

18] indicate to improve a HAR framework by using a sensor data of air pressure system along with inertial measurement unit (IMU). This HAR model shows at least 1.78% higher recognition performance than others that is not appliable to sensor data. In the last decade, there is a generational shift in HAR study from device-bound strategies to device-free approaches. Cui et al. [

19] introduce a WiFi-based HAR framework by using channel state data to recognize common activities. However, WiFi-based HAR is capable of detecting basic behaviors only, such as running and standing. The cause is that CSI cannot have enough knowledge to understand dynamic events [

20].

Sensor-based HAR is becoming more commonly used in smart devices since, with the advancement of pervasive computer and sensor automation, smartphones and their privacy are well protected. Therefore, smartphone sensor-based HAR is the focus of this study. As a wearable device, modern smartphones are becoming increasingly popular. Furnished with an assortment of implanted sensors such as accelerometers, gyroscopes, Bluetooth, and ambient sensors, smartphones also allow researchers to study the activities of daily life. Sensor-based HAR on a device can be considered as an ML model, built to constantly track the actions of the user, despite being connected to a person’s body. Traditional methods have made major strides through the implementation of state-of-the-art deep-learning techniques, including decision tree, naïve Bayes, support vector machine [

21], and artificial neural networks [

22]. Nevertheless, these traditional ML methods may eventually focus on heuristic, handcrafted feature extraction, which is typically constrained by human domain expertise. However, the efficiency of traditional ML methods is constrained in terms of sorting accuracy and other measurements.

Here, Deep Learning (DL) methods are employed to moderate the previously mentioned limitations. Using multiple hidden layers instead of manual extraction through human domain knowledge allows raw sensor data features to be learned spontaneously. The mining of appropriate in-depth, high-level features for dealing with complex issues such as HAR is facilitated by the deep architecture of these approaches. These DL approaches are now being used to construct a resilient smartphone-based HAR [

23,

24].

The Convolutional Neural Network (CNN) is a potential DL approach which has achieved favorable results in speech recognition, image classification, and text analysis [

25]. When applied to time-series classification-related HAR, the CNN has superiority over other conventional ML approaches, due to its local dependency and scale invariance [

26]. Studies on one-dimensional CNNs have shown that these DL models are more effective in solving the HAR problem with performance metrics than conventional ML models [

27]. Due to the temporal dependency of sensor time-series data, LSTM networks are introduced to tackle the issue. The LSTM network can identify relationships in the temporal knowledge dimension without combining the time steps as in the CNN network [

28].

Ullah et al. [

29] proposed a Stacked LSTM network, trained along with accelerometer and gyroscope data, inspired by the emerging DL techniques for sensor-based HAR. These researchers found that recognition efficiency could be enhanced using the Stacked LSTM network to repeatedly extract temporal features. Zhang et al. [

30] proposed a Stacked HAR model based on an LSTM network. The findings revealed that with no extra difficulty in training, the Stacked LSTM network could enhance recognition accuracy. Better recognition performance was achieved by combining the CNN network with the LSTM network, based on the study by Mutegeki et al. [

31] who used the robustness of CNN network feature extraction while taking advantage of the LSTM model for the classification of time series. To provide promising results in recognition performance, Ordóñez and Roggen [

32] combined the convolutional layer with LSTM layers. In order to capture diverse data during training, Hammerla et al. [

33] compared different deep neural networks in HAR, including CNN and LSTM, and significantly improved the performance and robustness of recognition. However, existing practices have their own weaknesses and involve various sample generation methods and validation protocols, making them unsuitable for comparison.

To better understand LSTM-based networks for solving HAR problems, this research aims to study LSTM-based HAR using smartphone sensor data. Five LSTM networks were comparatively researched to evaluate the impact of using different kinds of smartphone sensor data from a public dataset called UCI-HAR. Moreover, Bayesian optimization is utilized to manipulate LSTM hyperparameters. Therefore, the primary contributions of this research are as follows:

A 4-layer CNN-LSTM is proposed: a hybrid DL network consisting of CNN layers and an LSTM layer with the ability to automatically learn spatial features and temporal representation.

The various experimental results demonstrate that the proposed DL network is suitable for HAR through smartphone sensor data.

The proposed framework can improve the recognition operation and outperform other baseline DL networks.

The remainder of the paper is structured as follows.

Section 2 provides details of the preliminary concept and background theory used in this study.

Section 3 presents the proposed HAR framework for obtaining smartphone sensor data.

Section 4 shows the experimental conditions and results. The derived results are then discussed in

Section 5. Finally,

Section 6 presents the conclusion.

3. Proposed Methodology

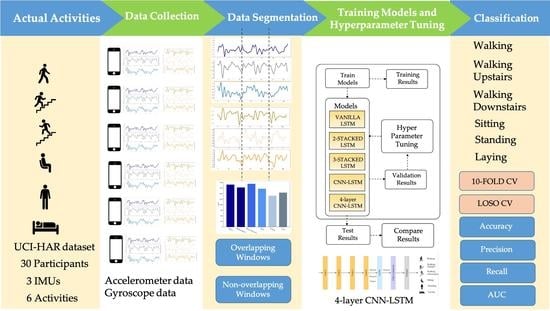

The proposed LSTM-based HAR framework in this study enables the sensor data captured from the smartphone sensor to classify the activity performed by the smartphone user.

Figure 4 illustrates the overall methodology used in this study to achieve the research goal. To enhance the recognition efficiency of LSTM-based DL networks, the proposed LSTM-based HAR is presented. Raw sensor data is split into two main subsets during the first stage: raw training data and test data. In the second stage of model training and hyperparameter tuning, the raw training data is further split into 75% for training and 25% for validating the trained model. Five LSTM-based models are tested by the validation data, and the Bayesian optimization approach then tunes the hyperparameters of the trained models. Finally, the hyperparameter-tuned models will be checked against the test results and their recognition performance compared.

3.1. UCI-HAR Smartphone Dataset

The recommended system in this work uses UCI human behavior recognition through a mobile dataset [

36] to monitor community activities. Activity data gained from 30 participants of varying ages, races, heights, and weights (aged between 18 and 48 years) was included in the UCI-HAR dataset. While holding a Samsung Galaxy S-II smartphone (Suwon, Korea) at waist level, the subjects carried out everyday tasks. Each person conducted six tasks (i.e., walking, walking upstairs, walking downstairs, sitting, standing, and lying down). The combined tri-axial values of the smartphone accelerometer and gyroscope were used to record sensor data, while the six preset tasks were performed by each of the participants. At a steady rate of 50 Hz, tri-axial values of linear acceleration and angular velocity data were obtained. A detailed description of the UCI-HAR dataset is provided in

Table 1.

Figure 5 and

Figure 6 show the accelerometer and gyroscope data samples, respectively.

An intermediate filter was applied for sound quality pre-processing of the sensor data in the UCI-HAR dataset. A third-order Butterworth low-pass filter with a cutoff frequency of 20 Hz is sufficient for capturing human body motion since 99% of its energy is contained below 15 Hz [

46]. The sensor information was then sampled in 2.56 s fixed-width sliding windows with a 50% overlap between them as shown in

Figure 7. The four reasons for choosing this window size and overlapping proportion [

36] are as follows: (1) The rate of walking of an average person is between 90 and 130 steps/min [

47], i.e., a minimum of 1.5 steps/s. (2) For each window study, at least one complete walking period (two steps) is desired. (3) This approach can also help people with a slower cadence, such as the elderly and those with disabilities. A minimum speed equal to 50% of the average human cadence was assumed by the researchers [

36]. (4) Signals were also mapped via the Fast Fourier Transform (FFT) in the frequency domain, optimized for two-vector control (2.56 s × 50 Hz = 128 cycles). The available dataset contains 10,299 samples, split into two classes (i.e., two sets of training and testing). The former has 7352 samples (71.39%), while the latter has the remaining 2947 samples (28.61%). The dataset is imbalanced, as shown in

Figure 8. Since the use of accuracy only is insufficient for analysis and fair comparison, we additionally apply the F1-score to compare the performance of LSTM-based networks in this work.

To evaluate the DL models, the dataset is first standardized. After employing the normalization approach, the dataset shows zero mean and unit variance.

Figure 9 displays the histogram activity data from the tri-axial values of both the accelerometer and gyroscope.

3.2. LSTM Architectures

The following LSTM network architectures are used in this work: Vanilla LSTM network, 2-Stacked LSTM network, and 3-Stacked LSTM network, as illustrated in

Figure 10,

Figure 11 and

Figure 12, respectively. The original LSTM model (or Vanilla LSTM network) comprises an individual hidden layer of LSTM, followed by a common feedforward output layer. The Stacked LSTM networks are upgraded versions of the original model with multiple hidden LSTM layers. Each layer of the Stacked LSTM network contains multiple memory cells. A Stacked LSTM structure can be technologically defined as an LSTM model, consisting of multiple LSTM layers to take advantage of the temporal feature extraction obtained from each LSTM layer.

The CNN-LSTM architecture employs CNN layers in the feature extraction process of input data incorporated with LSTMs to support sequence forecasting, as shown in

Figure 13. The CNN-LSTMs are built to solve forecasting problems in visual time series and applications to achieve textual descriptions from image sequences. This architecture is appropriate for issues involving a temporal input structure or requiring output generation with a temporal structure. In this work, an LSTM network called 4-layer CNN-LSTM is proposed to improve recognition performance. The architecture of the proposed 4-layer CNN-LSTM is illustrated in

Figure 14.

The network architecture of the proposed 4-layer CNN-LSTM network is shown in

Figure 14. The tri-axial accelerometer and tri-axial gyroscope data segments were used as network inputs. To extract feature maps from the input layer, four one-dimensional convolutional layers were used for the activation feature in ReLU. Then, to summarize the feature maps provided by the convolution layers and reduce the computational costs, a max-pooling layer is also added to the proposed network. Their dimensions also need to be reduced after reducing the size of the function maps to allow the LSTM network to operate. For this reason, the flattened layer converts each function map’s matrix representation into a vector. In addition, several dropouts are inserted on top of the pooling layer to decrease the risk of overfitting.

The output of the pooling layer is processed by an LSTM layer after the dropout function is applied. This models the temporal dynamics to trigger the feature maps. A fully connected layer, followed by a SoftMax layer to return identification, is the final layer. Hyperparameters such as filter number, kernel size, pool size, and dropout ratio were determined by Bayesian optimization, as shown in

Figure 3.

3.3. Tuning Hyperparameter by Bayesian Optimization

Hyperparameters are essential for DL approaches since they directly manipulate the actions of training algorithms and have a crucial effect on the performance of DL models. Bayesian optimization is a practical approach for solving the function problems prevalent in computing for finding the extrema. This approach is suitably employed for solving a related-function problem where the expression has no closed form. Bayesian optimization can also be applied to related-function problems such as extravagant computing, hard derivative evaluation, or a non-convex function. In this work, the optimization goal is to discover the maximum value for an unknown function

f at the sampling point:

where

A represents the search space of

x. Given evidence data

E are derived from Bayes’ theorem of Bayesian optimization. Then, the posterior probability

P(M|E) of a model

M is comparable to the possibility

P(E|M) of over-serving

E given model

M multiplied by the prior probability of

P(M):

The Bayesian optimization technique has recently become popular as a suitable approach for tuning deep network hyperparameters by reason of their capability in administering multi-parametric issues with valuable objective functions when the first-order derivative is not applicable.

3.4. Sampling Generation Process and Validation Protocol

The first process in sensor-based HAR is to create raw sensor data for the samples. This procedure involves separating it into small windows of the same size, called temporal windows. The raw sensor data is then interpreted as time-series data. Then, as the data samples are separated into training data, the temporal windows from the signals are used to learn a model and test the data to validate the learned model.

There are many strategies for using temporal windows to obtain data segments. The overlapping temporal window (OW), whereby a fixed-size window is applied to the input data sequence to provide data for training and test samples using certain validation protocol, is the most generally used window in sensor-based HAR studies (e.g., 10-fold cross validation). However, this technique is highly biased since there is a 50% overlap between subsequent sliding windows. Another method called the non-overlapping temporal window (NOW) can prevent this bias. As opposed to the OW technique, the NOW has the disadvantage of only a limited number of samples since the temporal windows no longer overlap.

Evaluating the prediction metrics of the trained models is a critical stage of the process. Cross Validation (CV) [

48] is used as the standard technique, whereby the data is separated into training and test data. Various approaches, such as leave-one-out, leave-p-out, and k-fold cross validation, can be used to separate the data for training and testing [

49,

50]. The objective of this process is to evaluate the ability of the learning algorithm to generalize new data [

50].

In sensor-based HAR, the purpose is to generalize a model for a different subject. The cross-validation protocol should also be subject-specific, meaning that the training and testing data contain records of various subjects. Cross validation of this protocol is called Leave-One-Subject-Out (LOSO). In order to create a model, the LOSO employs samples of all subjects but leaves one out to shape the training data. Then, using the samples of the excluded subject, the trained model is examined [

51].

In this work, four combinations of sample generation processes and validation protocols evaluate the output recognition of LSTM networks, as shown in

Table 2.

3.5. Performance Evaluation Metrics

The LSTM network classifiers employed in HAR can be measured using a few performance assessment indices. Five assessment metrics: accuracy, precision, recall, F1-score, and AUC are chosen for performance evaluation.

The accuracy metric represents the corrected ratio of forecasting samples to total samples. It is suitable for assessing the classification performance in the case of balanced data. On the other hand, the F1-score represents the weighted average of both precision and recall. Therefore, this metric can be properly applied in the event the data is imbalanced. Six metrics can be mathematically defined as the following expressions.

The accuracy is the evaluation ratio metric to all true assessment results of summarize the total grouping achievement for all types:

The precision represents the ratio of positive samples classified correctly to total positive samples:

The recall represents the ratio of positive samples classified correctly to total positive samples:

The F1-score represents the union of the recall value and the precision value in a separated value:

Receiver Operating Characteristics (ROC) Curve, known as precision-recall rate, presents an approach for determining the true positive rate (TPR) against the false positive rate (FPR):

The false positive rate refers to the proportion of positive data points which are inappropriately claimed to be negative in comparison to all negative data points.

where

means the number of true positives,

means the number of true negatives,

means the number of false positives, and

means the number of false negatives.

Not only Area Under the Curve (AUC) indicates the overall efficiency of classifiers, but it also describes the likelihood that positive cases selected will be ranked higher than negative cases [

34].

5. Discussion of Results

The results derived from the experiments presented in

Section 4 are the main contributions of this research, and the following discussion is provided to ensure the consequences are clear.

LSTM-based DL networks determined in this study could be employed to precisely categorize activities, specifically typical human daily living activities. The categorized process used tri-axial data on both accelerometers and gyroscopes embedded in smartphones. The categorized accuracy and other advanced metrics were considered to evaluate the sensor-based HAR of five LSTM-based DL architectures. A publicly available dataset of previous studies [

25,

31,

36,

45,

54] was applied to compare the generality of DL algorithms with the 10-fold cross-validation technique.

The UCI-HAR raw smartphone sensor data were evaluated with the Vanilla LSTM network, 2-Stacked LSTM network, 3-Stacked LSTM network, CNN-LSTM network, and the proposed 4-layer convolutional LSTM network (4-layer CNN-LSTM network). First, activity classification based on sensors with raw tri-axial data from both the accelerometer and gyroscope has been demonstrated.

Figure 18a illustrates the process of learning raw sensor data with the Vanilla LSTM network. The loss rate decreased gradually and the accuracy rate increased slowly without any appearance of dilemma. This indicates that the network learns appropriately without overfitting problems. The final result shows an average accuracy of 96.54% with the testing set.

Figure 18b shows the training result of the 2-Stacked LSTM network. With the training set, both loss and accuracy were thoroughly trained and provide a decent performance. However, during the testing set process, some bouncing occurred. Specifically, when the epoch was 23, bouncing in both loss and accuracy could obviously be observed. Fortunately, the final average accuracy result is still better than that for the Vanilla LSTM network. During the testing set process, the loss rate was 0.1394 and the average accuracy 97.32%. The training result of the 3-Stacked LSTM network in

Figure 18c shows a satisfactory learning process, but the testing set was significantly unstable. There are many bouncing spots in the middle and final epochs. While the epoch was repeated, the loss rate appeared to fluctuate. The process appears in worse shape when considering it as a graph. Nonetheless, the results in the actual testing set did not change for the worse with a loss rate of 0.189 and an accuracy rate of 96.59%. In

Figure 18d, the training process of the CNN-LSTM network is shown to generate unstable parts in the validation set. On the other hand, the accuracy is generally higher than when training with the baseline LSTM model, including the 2-Stacked LSTM network and 3-Stacked LSTM network, which achieved 98.49% in the testing set.

Figure 19 shows the learning process of the proposed 4-layer CNN-LSTM. In the testing set, with the epoch at 50, the loss rate decreased significantly and the accuracy increased significantly. Both the loss rate and accuracy quickly stabilized. Finally, the accuracy was 99.39% in the testing set.

The derived classification results in this study reveal that hybrid DL architectures can improve the prediction performance of the baseline LSTM. When associating two DL architectures (CNN and LSTM), the hybrid architecture could be the dominant reason for the increased scores, since the CNN-LSTM network produced higher average accuracy and F1-score than the baseline LSTM networks. Therefore, it can be inferred that the hybrid model delivers the advantages of both CNN and LSTM in terms of extracting regional features within short time steps and a temporal structure across a sequence.

A limitation of this study is that the algorithms for deep learning are trained and tested using laboratory data. Previous studies have shown that the performance of learning algorithms could not accurately reflect performance in everyday life under laboratory conditions [

55]. Another constraint is that when looking at real-world situations, this study does not discuss the issue of transitional behaviors (Sit-to-Standing, Sit-to-Lay, etc.), which is a challenge goal. However, the proposed HAR architecture can be applicable to many realistic applications in smart homes with high performance deep learning networks including optimal human movement in sports, healthcare monitoring and safety surveillance for elderly people, and baby and child care.

6. Conclusions and Future Works

In this study, the LSTM-based framework explores the LSTM network, providing high performance in addressing the HAR problem. Four LSTM networks were selected to study their recognition performance using different smartphone sensors, i.e., tri-axial accelerometer and tri-axial gyroscope. These LSTM networks were evaluated using a publicly available dataset called UCI-HAR by considering predictive accuracy and other performance metrics such as precision, recall, F1-score, and AUC. The experimental results show that the 4-layer CNN-LSTM network proposed in this study outperforms the other baseline LSTM networks with a high accuracy rate of 99.39%. Moreover, the proposed LSTM network was compared to previous works. The 4-layer CNN-LSTM network could improve the accuracy by up to 2.24%. The advantage of this model is that the CNN layers perform direct mapping in the spatial representation of raw sensor data for feature extraction. The LSTM layers take full advantage of the temporal dependency to significantly improve the extraction features of HAR.

Future work would involve the further development of LSTM models using various hyperparameters, including regularization, learning rate, batch size, and others. Furthermore, the proposed model could be applied to more complicated activities to tackle other DL challenges and HAR by evaluating it on other public activity datasets, such as OPPORTUNITY and PAMAP2.