Advances in Materials, Sensors, and Integrated Systems for Monitoring Eye Movements

Abstract

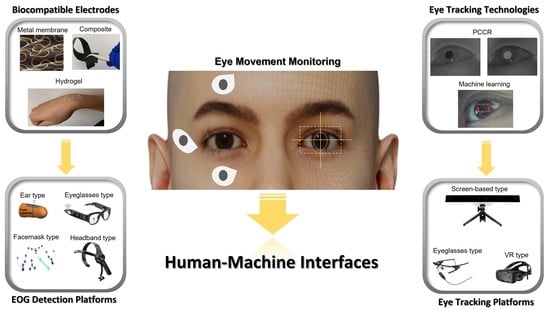

:1. Introduction

1.1. Recent Advances in Eye Movement Monitoring

1.2. Electrooculogram-Based Approaches for Human–Machine Interfaces

1.3. Screen-Based Eye Tracking Technology

2. EOG Signals

2.1. Existing Electrodes

2.1.1. Composite Electrodes

2.1.2. Dry Electrodes

| Electrode Type | Conductive Material | Supporting Substrate | Biopotential | Biocompatibility | Stretchability | Bendability | Fabrication | Size | Modulus | Advantage | Refs. |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Polymer | CNT | PDMS | EOG, EEG | O | O | O | Mix and curing | 20 × 5 × 5 mm3 | Elasticity 4 MPa | Less changes in electrical resistance against mechanical deformation -High signal-to-noise ratio | [5] |

| Ni/Cu | Urethane foam | EOG, EEG | O | X | X | Assembling Metal and foam | 14 × 8 × 8 mm3 | Compression set 5% | Low interference from skin-electrode interface | [38,84] | |

| Ag/AgCl | Parylene | EOG, EEG, EMG | O | X | O | Microfabrication process | 10 × 10 × 0.05 mm3 | - | Ease of thickness control, ultrathin fabrication Well-fitting skin topology | [100] | |

| Graphene | PDMS | EOG | O | 50% | O | APCVD and Coating | 6 × 20 mm2 | - | Ultrathin, ultrasoft, transparent, and breathable. Angular resolution of 4° of eye movement | [11] | |

| Fiber | Graphene | Cotton textile fabrics | EOG | O | X | O | Simple pad-dry technique | 35 × 20 mm2 | - | Simple and scalable production method | [104] |

| Graphene | Textile fabrics | EOG | O | O | O | Dipping and thermal treatment | 30 × 30 mm2 | - | Possibility and adaptability for mass manufacturing | [42,57,96] | |

| Silver | Textile fabrics | EOG, EMG | O | X | O | Embroidering | 20 × 20 mm2 | - | Comfortableness and the usability with the measurement head cap | [89] | |

| CEF | CEF fibers | EOG, ECG | O | 258.12% | O | Industrial knitting machine | 20 × 20 mm2 | Stress 11.99 MPa | Flexible, breathable, and washable dry textile electrodes Unrestricted daily activities | [90] | |

| Silver polymer | Escalade Fabric | EOG, EMG | O | O | O | Screen and Stencil printing | 12 × 12 × 1 mm3 | - | Textile compatible, relatively low cost for a production lineSmaller scale manufacturing | [105,106] | |

| Copper | Omniphobic paper | EOG, ECG, EMG | O | 58% | O | Razor printer | 20 × 15 mm2 | Stress 2.5 MPa | Simple, inexpensive, scalable, and fabrication Breathable Ag/AgCl-based EPEDs | [99] | |

| silver/polyamide | Fabric | EOG | O | O | O | Mix and coating | 10 × 10 mm2 | - | Reduction in noise by appropriate contact | [40] | |

| Hydrogel | PEGDA/AAm | - | EOG, EEG | O | 2500% | O | PμSL-based 3D printer | 15 × 15 mm2 | - | Excellent stability and ultra-stretchability | [98] |

| Starch | Sodium chloride | EOG | O | 790% | O | Gelation process | 30 × 10 mm2 | 4.4 kPa | Adhesion, low modulus, and stretchability No need for crosslinker or high pressure/temperature | [61] | |

| HPC/PVA | PDMS | EOG | O | 20% | O | Coating | 30 × 10 mm2 | 286 kPa | Well-adhered to the dimpled epidermis | [97] | |

| MXene | Polyimide | EOG, EEG, ECG | O | O | O | Mix and Sonicating | 20 × 20 mm2 | - | Low contact impedances and excellent flexibility | [107] | |

| PDMS-CB | - | EOG | O | O | O | Mix and deposition | 15 × 15 mm2 | 2 MPa | Continuous, long-term, stable EOG signal recording | [108] | |

| Metal | Silver | Polyimide | EOG | O | 100% | O | Microfabrication process | 10 × 10 mm2 | - | Highly stretchable, skin-like, and biopotential electrodes | [30] |

| Gold | Polyimide | EOG | O | 30% | O | Microfabrication process | 15 × 10 mm2 | 78 GPa | Comfortable, easy-to-use, and wireless control | [1] |

2.2. Examples of Platforms for EOG Monitoring

2.2.1. Eyeglass Type

| Wearable Platforms | Electrodes | Platforms | Refs. | |||

|---|---|---|---|---|---|---|

| Types | Materials | Counts | Size | Features | ||

| Earplug | Foam | Silver | 2 | 2 × 2 × 1 mm3 | -Stable and comfy during sleep | [44,45] |

| Foam | Conductive cloth | 2 | 2 × 2 × 1 mm2 | -Stable and comfy during sleep | [43] | |

| Eyeglass | Gel | Ag/AgCl | 6 | 15 × 14 × 5 cm3 | -Lots of wires were attached | [37] |

| Metal | Silver | 3 | 15 × 14 × 5 cm3 | -Real-time delivery of feedback in the form of an auditory | [32,33,117] | |

| Metal | Ag/AgCl | 5 | 15 × 14 × 7 cm3, 150 g | -Constant pressure for electrodes | [34,116] | |

| Foam | CNT/PDMS | 5 | 15 × 14 × 5 cm3 | -UV protection via sunglass lens | [5] | |

| Foam | Ni/Cu | 5 | 14 × 12 × 7 cm3 | -Absorbing the motion force via Foam and platform | [38] | |

| Facemask | Fiber | Silver/Polyamide | 3 | 14 × 7 × 2 cm3 | -The wires are embedded in the eye mask platform | [40] |

| Metal | Silver/Carbon | 8 | 20 × 15 cm2 | -Tattoo-based platform-Stable and comfy | [41] | |

| Fiber | Graphene | 5 | 15 × 7 × 2 cm3 | -High degree of flexibility and elasticity | [42] | |

| Headband | Gel | Ag/AgCl | 4 | 15 × 7 cm2 | -Waveforms were well measured on the headband platform | [51] |

| Metal | Ag/AgCl | 4 | 15 × 7 cm2 | -Reduction in the total cost by using disposable Ag/AgCl medical electrodes | [55] | |

| Fiber | Graphene | 3 | 15 × 7 cm2 | -Long-term EOG monitoring applications | [21,57,96] | |

| Fiber | Silver | 5 | 15 × 7 cm2 | -Reusable and easy-to-use electrodes are integrated into the cap. | [89] | |

| Fiber | silver-plated and nylon | 3 | 15 × 7 cm2 | -Long-term EOG monitoring applications | [58] | |

2.2.2. Facemask Type

2.2.3. Headband Type

2.2.4. Earplug Type

2.3. Signal Processing Algorithms and Applications

2.3.1. EOG Signal Processing

2.3.2. Machine Learning

2.3.3. Applications

3. Eye Trackers

3.1. Details of Eye Trackers

3.1.1. Human Eye Movement and Stimuli

3.1.2. Principles of Eye Tracking Technology

3.1.3. Employment of Eye Tracking Technologies for Applications

3.2. Eye Gaze and Movement Estimation

3.2.1. Eye Tracking Techniques and Algorithm

- PCCR—Pupil Center-Corneal Reflection and Bright and dark Pupil Effect

- Time to first Fixation and Object of interest

3.2.2. Visualization and Analysis of Eye Movements

- Gaze Mapping and IR Technology

- Heatmaps

- Area of Interest (AOI) and Dwell Time

3.3. Eye Tracking Platforms

3.3.1. Screen-Based

3.3.2. Glasses Type

3.3.3. Virtual Reality (VR)

3.4. Applications

3.4.1. Cognitive Behavior and Human Recognition

3.4.2. Contents

3.4.3. Guided Operation

| Application | Target User | Platform | Device Info. | Gaze Detection | Processing Method | Refs. | |

|---|---|---|---|---|---|---|---|

| Cognitive Recognition | Autism | Infant | Screen-based | ISCAN, Inc. | -Dark pupil Tracking -Corneal reflection | Customized (Eye position and fixation data identification with MATLAB) | [153] |

| Impact of slippage | Any mobile user | Eyeglasses | -Tobii -SMI -Pupil-labs | -PCCR -Dark pupil Tracking -Corneal reflection | Commercial (Tobii Pro: Process with Two cameras and Six glints per eye, iViewETG: Three makers tracking from SMI) Customized (EyeRecToo: Open-source pupil Grip algorithm) | [160] | |

| The Effects of Mobile Phone Use on Gaze Behavior in Stair Climbing | Any mobile user | Eyeglasses | Tobii Glasses 2.0 | -PCCR -Corneal reflection | Customized (Frame by frame image classification with MATLAB) | [192] | |

| Diagnosis and Measurement of Strabismus | Children | Screen-based | EyeTracker 4C | -PCCR -Corneal reflection | Customized (EyeSwift: IR and Image Processing) | [190] | |

| Measurement of nine gaze directions | Patient with strabismus | Screen-based | OMD | -Pupil and corneal reflection | Customized (Hess screen test) | [182] | |

| ADHD | ADHD Patient | Screen-based | Eyelink 1000 | -Dark Pupil Tracking -Corneal Reflection | Customized (MOXO-dCPT Stimuli, AOI and relative gaze analysis) | [133] | |

| ADHD | ADHD Patient | Screen-based | TX300 | -PCCR | Customized (Logistic regression Classification model for pupil analysis) | [191] | |

| Measurement of pupil size artefact (PSA) | Any mobile user | Screen-based | EyeLink 1000 Plus, Tobii Pro Spectrum (Glasses 2) | -Dark Pupil Tracking, -Corneal Reflection | Commercial (Tobii Pro: Process with Two cameras and Six glints per eye) | [186] | |

| A comparison study of EXITs design in a wayfinding system | Any mobile user | Eyeglasses | Tobii Glasses | -PCCR | Customized (Custom IR Marker, AOI Analysis) | [73] | |

| Contents Creation | Artistic Drawing | Graphic user include people with diabilities | Screen-based | Tobii Eye Tracker 4C | -PCCR | Commercial (Tobii Pro: Process with Two cameras and Six glints per eye) | [193] |

| To Enhance Imagery Base maps | Map User | Screen-based | Tobii Pro Spectrum | -PCCR | Commercial (Tobii pro: Process with Two cameras and Six glints per eye) Customized (AOI statistic and heatmap) | [158] | |

| Guided operation supportive guidance | Semi-Autonomous Vehicles | Driver | Screen-based | faceLAB | -Pupil Tracking | Customized (Markov model, Pattern analysis) | [134] |

| To capture joint visual attention | Co-located collaborative learning groups | Eyeglasses | SMI ETG | -Pupil/CR -Dark pupil tracking | Commercial (Fiducial tracking engine) | [196] | |

| Human robot interaction for laparoscopic surgery | surgeon | Screen-based | Tobii 1750 | -PCCR | Customized (Hidden Markov model) | [13] | |

| Surgical Skills Assessment and Training in Urology | surgeon | Eyeglasses + VR | Tobii Glasses 2.0 | -PCCR | Commercial (UroMentor simulator) | [198] | |

| Architectural Education | Ordinary Users, Students and Lecturers | Eyeglasses Screen-based VR | -Tobii -SMI | - PCCR - pupil/CR, dark pupil tracking | Commercial (BeGaze software) | [151] | |

| Education | Student | VR | Self-made “VR eye tracker” | -Record the condition of the pupils via infrared LED light | Customized (Analysis of regions of interest) | [188] | |

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviation

| ADHD | attention deficit hyperactivity disorder |

| AJP | aerosol jet printing |

| AOI | area of Interest |

| BCI | brain–computer interface |

| CEFs | conductive elastomeric filaments |

| CNN | convolution neural network |

| CNTs | carbon nanotubes |

| CVD | chemical vapor deposition |

| DI | deionized |

| DWT | discrete wavelet transform |

| ECG | electrocardiogram |

| EEG | electroencephalogram |

| EMG | electromyography |

| EOG | electrooculograms |

| EPEDs | epidermal paper-based electronic devices |

| FC | fixation count |

| FFD | first fixation duration |

| GO | graphene oxide |

| HCI | human–computer interaction |

| HMI | human–machine interface |

| HPC | hydroxypropyl cellulose |

| IPD | inter-pupillary distance |

| IR | infrared |

| LDA | linear discriminant analysis |

| MR | mixed reality |

| PCBs | printed circuit boards |

| PCCR | pupil center-corneal reflection |

| PDMS | polydimethylsiloxane |

| PMMA | poly methyl methacrylate |

| POG | point of gaze |

| PVA | polyvinyl alcohol |

| rGO | reduced graphene oxide |

| SNR | signal-to-noise ratio |

| SVM | supporting vector machines |

| TFD | total fixation duration |

| TTFF | time to first fixation |

| UV | ultraviolet |

| VR | virtual reality |

References

- Mishra, S.; Norton, J.J.S.; Lee, Y.; Lee, D.S.; Agee, N.; Chen, Y.; Chun, Y.; Yeo, W.H. Soft, conformal bioelectronics for a wireless human-wheelchair interface. Biosens. Bioelectron. 2017, 91, 796–803. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mahmood, M.; Kim, N.; Mahmood, M.; Kim, H.; Kim, H.; Rodeheaver, N.; Sang, M.; Yu, K.J.; Yeo, W.-H. VR-enabled portable brain-computer interfaces via wireless soft bioelectronics. Biosens. Bioelectron. 2022, 210, 114333. [Google Scholar] [CrossRef] [PubMed]

- Lim, J.Z.; Mountstephens, J.; Teo, J. Emotion recognition using eye-tracking: Taxonomy, review and current challenges. Sensors 2020, 20, 2384. [Google Scholar] [CrossRef]

- Choudhari, A.M.; Porwal, P.; Jonnalagedda, V.; Mériaudeau, F. An electrooculography based human machine interface for wheelchair control. Biocybern. Biomed. Eng. 2019, 39, 673–685. [Google Scholar] [CrossRef]

- Lee, J.H.; Kim, H.; Hwang, J.-Y.; Chung, J.; Jang, T.-M.; Seo, D.G.; Gao, Y.; Lee, J.; Park, H.; Lee, S. 3D printed, customizable, and multifunctional smart electronic eyeglasses for wearable healthcare systems and human–machine Interfaces. ACS Appl. Mater. Interfaces 2020, 12, 21424–21432. [Google Scholar] [CrossRef] [PubMed]

- Goverdovsky, V.; Von Rosenberg, W.; Nakamura, T.; Looney, D.; Sharp, D.J.; Papavassiliou, C.; Morrell, M.J.; Mandic, D.P. Hearables: Multimodal physiological in-ear sensing. Sci. Rep. 2017, 7, 1–10. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Miettinen, T.; Myllymaa, K.; Hukkanen, T.; Töyräs, J.; Sipilä, K.; Myllymaa, S. A new solution to major limitation of HSAT: Wearable printed sensor for sleep quantification and comorbid detection. Sleep Med. 2019, 64, S270–S271. [Google Scholar] [CrossRef]

- Yeo, W.H.; Kim, Y.S.; Lee, J.; Ameen, A.; Shi, L.; Li, M.; Wang, S.; Ma, R.; Jin, S.H.; Kang, Z. Multifunctional epidermal electronics printed directly onto the skin. Adv. Mater. 2013, 25, 2773–2778. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, M.; Yang, B.; Ding, X.; Tan, J.; Song, S.; Nie, J. Flexible, robust, and durable aramid fiber/CNT composite paper as a multifunctional sensor for wearable applications. ACS Appl. Mater. Interfaces 2021, 13, 5486–5497. [Google Scholar] [CrossRef]

- Kabiri Ameri, S.; Ho, R.; Jang, H.; Tao, L.; Wang, Y.; Wang, L.; Schnyer, D.M.; Akinwande, D.; Lu, N. Graphene electronic tattoo sensors. ACS Nano 2017, 11, 7634–7641. [Google Scholar] [CrossRef]

- Ameri, S.K.; Kim, M.; Kuang, I.A.; Perera, W.K.; Alshiekh, M.; Jeong, H.; Topcu, U.; Akinwande, D.; Lu, N. Imperceptible electrooculography graphene sensor system for human–robot interface. Npj 2d Mater. Appl. 2018, 2, 1–7. [Google Scholar] [CrossRef] [Green Version]

- Chen, C.-H.; Monroy, C.; Houston, D.M.; Yu, C. Using head-mounted eye-trackers to study sensory-motor dynamics of coordinated attention. Prog. Brain Res. 2020, 254, 71–88. [Google Scholar] [PubMed]

- Fujii, K.; Gras, G.; Salerno, A.; Yang, G.Z. Gaze gesture based human robot interaction for laparoscopic surgery. Med. Image. Anal. 2018, 44, 196–214. [Google Scholar] [CrossRef]

- Soler-Dominguez, J.L.; Camba, J.D.; Contero, M.; Alcañiz, M. A proposal for the selection of eye-tracking metrics for the implementation of adaptive gameplay in virtual reality based games. In Proceedings of the International Conference on Virtual, Augmented and Mixed Reality, Vancouver, BC, Canada, 9–14 July 2017; pp. 369–380. [Google Scholar]

- Ou, W.-L.; Kuo, T.-L.; Chang, C.-C.; Fan, C.-P. Deep-learning-based pupil center detection and tracking technology for visible-light wearable gaze tracking devices. Appl. Sci. 2021, 11, 851. [Google Scholar] [CrossRef]

- Barea, R.; Boquete, L.; Mazo, M.; López, E. System for assisted mobility using eye movements based on electrooculography. IEEE Trans Neural Syst Rehabil Eng 2002, 10, 209–218. [Google Scholar] [CrossRef] [PubMed]

- Barea, R.; Boquete, L.; Mazo, M.; López, E. Wheelchair guidance strategies using EOG. J. Intell. Robot. Syst. 2002, 34, 279–299. [Google Scholar] [CrossRef]

- Krishnan, A.; Rozylowicz, K.F.; Weigle, H.; Kelly, S.; Grover, P. Hydrophilic Conductive Sponge Electrodes For EEG Monitoring; Sandia National Lab.(SNL-NM): Albuquerque, NM, USA, 2020. [Google Scholar]

- Xu, T.; Li, X.; Liang, Z.; Amar, V.S.; Huang, R.; Shende, R.V.; Fong, H. Carbon nanofibrous sponge made from hydrothermally generated biochar and electrospun polymer nanofibers. Adv. Fiber Mater. 2020, 2, 74–84. [Google Scholar] [CrossRef] [Green Version]

- Lin, S.; Liu, J.; Li, W.; Wang, D.; Huang, Y.; Jia, C.; Li, Z.; Murtaza, M.; Wang, H.; Song, J. A flexible, robust, and gel-free electroencephalogram electrode for noninvasive brain-computer interfaces. Nano Lett. 2019, 19, 6853–6861. [Google Scholar] [CrossRef]

- Acar, G.; Ozturk, O.; Golparvar, A.J.; Elboshra, T.A.; Böhringer, K.; Yapici, M.K. Wearable and flexible textile electrodes for biopotential signal monitoring: A review. Electronics 2019, 8, 479. [Google Scholar] [CrossRef] [Green Version]

- Lee, J.; Pyo, S.; Kwon, D.S.; Jo, E.; Kim, W.; Kim, J. Ultrasensitive strain sensor based on separation of overlapped carbon nanotubes. Small 2019, 15, 1805120. [Google Scholar] [CrossRef]

- Wan, X.; Zhang, F.; Liu, Y.; Leng, J. CNT-based electro-responsive shape memory functionalized 3D printed nanocomposites for liquid sensors. Carbon 2019, 155, 77–87. [Google Scholar] [CrossRef]

- Sang, Z.; Ke, K.; Manas-Zloczower, I. Design strategy for porous composites aimed at pressure sensor application. Small 2019, 15, 1903487. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Sun, K.; Liu, H.; Chen, X.; Zheng, Y.; Shi, X.; Zhang, D.; Mi, L.; Liu, C.; Shen, C. Enhanced piezoresistive performance of conductive WPU/CNT composite foam through incorporating brittle cellulose nanocrystal. Chem. Eng. J. 2020, 387, 124045. [Google Scholar] [CrossRef]

- Zhou, C.-G.; Sun, W.-J.; Jia, L.-C.; Xu, L.; Dai, K.; Yan, D.-X.; Li, Z.-M. Highly stretchable and sensitive strain sensor with porous segregated conductive network. ACS Appl. Mater. Interfaces 2019, 11, 37094–37102. [Google Scholar] [CrossRef]

- Cai, J.-H.; Li, J.; Chen, X.-D.; Wang, M. Multifunctional polydimethylsiloxane foam with multi-walled carbon nanotube and thermo-expandable microsphere for temperature sensing, microwave shielding and piezoresistive sensor. Chem. Eng. J. 2020, 393, 124805. [Google Scholar] [CrossRef]

- Lee, S.M.; Byeon, H.J.; Lee, J.H.; Baek, D.H.; Lee, K.H.; Hong, J.S.; Lee, S.-H. Self-adhesive epidermal carbon nanotube electronics for tether-free long-term continuous recording of biosignals. Sci. Rep. 2014, 4, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Cai, Y.; Shen, J.; Ge, G.; Zhang, Y.; Jin, W.; Huang, W.; Shao, J.; Yang, J.; Dong, X. Stretchable Ti3C2T x MXene/carbon nanotube composite based strain sensor with ultrahigh sensitivity and tunable sensing range. ACS Nano 2018, 12, 56–62. [Google Scholar] [CrossRef] [PubMed]

- Mishra, S.; Kim, Y.-S.; Intarasirisawat, J.; Kwon, Y.-T.; Lee, Y.; Mahmood, M.; Lim, H.-R.; Herbert, R.; Yu, K.J.; Ang, C.S. Soft, wireless periocular wearable electronics for real-time detection of eye vergence in a virtual reality toward mobile eye therapies. Sci. Adv. 2020, 6, eaay1729. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kosmyna, N.; Morris, C.; Sarawgi, U.; Nguyen, T.; Maes, P. AttentivU: A wearable pair of EEG and EOG glasses for real-time physiological processing. In Proceedings of the 2019 IEEE 16th International Conference on Wearable and Implantable Body Sensor Networks (BSN), Chicago, IL, USA, 19–22 May 2019; pp. 1–4. [Google Scholar]

- Kosmyna, N.; Morris, C.; Nguyen, T.; Zepf, S.; Hernandez, J.; Maes, P. AttentivU: Designing EEG and EOG compatible glasses for physiological sensing and feedback in the car. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Utrecht, The Netherlands, 21–25 September 2019; pp. 355–368. [Google Scholar]

- Kosmyna, N.; Sarawgi, U.; Maes, P. AttentivU: Evaluating the feasibility of biofeedback glasses to monitor and improve attention. In Proceedings of the 2018 ACM International Joint Conference and 2018 International Symposium on Pervasive and Ubiquitous Computing and Wearable Computers, Singapore, 8–12 October 2018; pp. 999–1005. [Google Scholar]

- Hosni, S.M.; Shedeed, H.A.; Mabrouk, M.S.; Tolba, M.F. EEG-EOG based virtual keyboard: Toward hybrid brain computer interface. Neuroinformatics 2019, 17, 323–341. [Google Scholar] [CrossRef]

- Vourvopoulos, A.; Niforatos, E.; Giannakos, M. EEGlass: An EEG-eyeware prototype for ubiquitous brain-computer interaction. In Proceedings of the Adjunct proceedings of the 2019 ACM international joint conference on pervasive and ubiquitous computing and proceedings of the 2019 ACM international symposium on wearable computers, London, UK, 9–13 September 2019; pp. 647–652. [Google Scholar]

- Diaz-Romero, D.J.; Rincón, A.M.R.; Miguel-Cruz, A.; Yee, N.; Stroulia, E. Recognizing emotional states with wearables while playing a serious game. IEEE Trans. Instrum. Meas. 2021, 70, 1–12. [Google Scholar] [CrossRef]

- Pérez-Reynoso, F.D.; Rodríguez-Guerrero, L.; Salgado-Ramírez, J.C.; Ortega-Palacios, R. Human–Machine Interface: Multiclass Classification by Machine Learning on 1D EOG Signals for the Control of an Omnidirectional Robot. Sensors 2021, 21, 5882. [Google Scholar] [CrossRef] [PubMed]

- Lin, C.T.; Jiang, W.L.; Chen, S.F.; Huang, K.C.; Liao, L.D. Design of a Wearable Eye-Movement Detection System Based on Electrooculography Signals and Its Experimental Validation. Biosensors 2021, 11, 343. [Google Scholar] [CrossRef] [PubMed]

- Díaz, D.; Yee, N.; Daum, C.; Stroulia, E.; Liu, L. Activity classification in independent living environment with JINS MEME Eyewear. In Proceedings of the 2018 IEEE International Conference on Pervasive Computing and Communications (PerCom), Athens, Greece, 19–23 March 2018; pp. 1–9. [Google Scholar]

- Liang, S.-F.; Kuo, C.-E.; Lee, Y.-C.; Lin, W.-C.; Liu, Y.-C.; Chen, P.-Y.; Cherng, F.-Y.; Shaw, F.-Z. Development of an EOG-based automatic sleep-monitoring eye mask. IEEE Trans. Instrum. Meas. 2015, 64, 2977–2985. [Google Scholar] [CrossRef]

- Shustak, S.; Inzelberg, L.; Steinberg, S.; Rand, D.; Pur, M.D.; Hillel, I.; Katzav, S.; Fahoum, F.; De Vos, M.; Mirelman, A. Home monitoring of sleep with a temporary-tattoo EEG, EOG and EMG electrode array: A feasibility study. J. Neural Eng. 2019, 16, 026024. [Google Scholar] [CrossRef] [PubMed]

- Garcia, F.; Junior, J.J.A.M.; Freitas, M.L.B.; Stevan Jr, S.L. Wearable Device for EMG and EOG acquisition. J. Appl. Instrum. Control 2019, 6, 30–35. [Google Scholar] [CrossRef]

- Nakamura, T.; Alqurashi, Y.D.; Morrell, M.J.; Mandic, D.P. Automatic detection of drowsiness using in-ear EEG. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–6. [Google Scholar]

- Nguyen, A.; Alqurashi, R.; Raghebi, Z.; Banaei-Kashani, F.; Halbower, A.C.; Dinh, T.; Vu, T. In-ear biosignal recording system: A wearable for automatic whole-night sleep staging. In Proceedings of the 2016 Workshop on Wearable Systems and Applications, Singapore, 30 June 2016; pp. 19–24. [Google Scholar]

- Nguyen, A.; Alqurashi, R.; Raghebi, Z.; Banaei-Kashani, F.; Halbower, A.C.; Vu, T. A lightweight and inexpensive in-ear sensing system for automatic whole-night sleep stage monitoring. In Proceedings of the 14th ACM Conference on Embedded Network Sensor Systems CD-ROM, Stanford, CA, USA, 14–16 November 2016; pp. 230–244. [Google Scholar]

- Manabe, H.; Fukumoto, M.; Yagi, T. Conductive rubber electrodes for earphone-based eye gesture input interface. Pers. Ubiquitous Comput. 2015, 19, 143–154. [Google Scholar] [CrossRef] [Green Version]

- Wang, K.-J.; Zhang, A.; You, K.; Chen, F.; Liu, Q.; Liu, Y.; Li, Z.; Tung, H.-W.; Mao, Z.-H. Ergonomic and Human-Centered Design of Wearable Gaming Controller Using Eye Movements and Facial Expressions. In Proceedings of the 2018 IEEE International Conference on Consumer Electronics-Taiwan (ICCE-TW), Taichung, Taiwan, 19–21 May 2018; pp. 1–5. [Google Scholar]

- English, E.; Hung, A.; Kesten, E.; Latulipe, D.; Jin, Z. EyePhone: A mobile EOG-based human-computer interface for assistive healthcare. In Proceedings of the 2013 6th International IEEE/EMBS Conference on Neural Engineering (NER), San Diego, CA, USA, 6–8 November 2013; pp. 105–108. [Google Scholar]

- Jadhav, N.K.; Momin, B.F. An Approach Towards Brain Controlled System Using EEG Headband and Eye Blink Pattern. In Proceedings of the 2018 3rd International Conference for Convergence in Technology (I2CT), Pune, India, 6–8 April 2018; pp. 1–5. [Google Scholar]

- Minati, L.; Yoshimura, N.; Koike, Y. Hybrid control of a vision-guided robot arm by EOG, EMG, EEG biosignals and head movement acquired via a consumer-grade wearable device. Ieee Access 2016, 4, 9528–9541. [Google Scholar] [CrossRef]

- Heo, J.; Yoon, H.; Park, K.S. A Novel Wearable Forehead EOG Measurement System for Human Computer Interfaces. Sensors 2017, 17, 1485. [Google Scholar] [CrossRef] [Green Version]

- Wei, L.; Hu, H.; Yuan, K. Use of forehead bio-signals for controlling an intelligent wheelchair. In Proceedings of the 2008 IEEE International Conference on Robotics and Biomimetics, Bangkok, Thailand, 22–25 February 2009; pp. 108–113. [Google Scholar]

- Shyamkumar, P.; Oh, S.; Banerjee, N.; Varadan, V.K. A wearable remote brain machine interface using smartphones and the mobile network. Adv. Sci. Technol. 2013, 85, 11–16. [Google Scholar] [CrossRef]

- Roh, T.; Song, K.; Cho, H.; Shin, D.; Yoo, H.-J. A wearable neuro-feedback system with EEG-based mental status monitoring and transcranial electrical stimulation. IEEE Trans. Biomed. Circuits Syst. 2014, 8, 755–764. [Google Scholar] [CrossRef]

- Tabal, K.M.; Cruz, J.D. Development of low-cost embedded-based electrooculogram blink pulse classifier for drowsiness detection system. In Proceedings of the 2017 IEEE 13th International Colloquium on Signal Processing & its Applications (CSPA), Penang, Malaysia, 10–12 March 2017; pp. 29–34. [Google Scholar]

- Ramasamy, M.; Oh, S.; Harbaugh, R.; Varadan, V. Real Time Monitoring of Driver Drowsiness and Alertness by Textile Based Nanosensors and Wireless Communication Plat-form. 2014. Available online: https://efermat.github.io/articles/Varadan-ART-2014-Vol1-Jan_Feb-004/ (accessed on 19 October 2022).

- Golparvar, A.J.; Yapici, M.K. Graphene smart textile-based wearable eye movement sensor for electro-ocular control and interaction with objects. J. Electrochem. Soc. 2019, 166, B3184. [Google Scholar] [CrossRef]

- Arnin, J.; Anopas, D.; Horapong, M.; Triponyuwasi, P.; Yamsa-ard, T.; Iampetch, S.; Wongsawat, Y. Wireless-based portable EEG-EOG monitoring for real time drowsiness detection. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 4977–4980. [Google Scholar]

- Chen, C.; Zhou, P.; Belkacem, A.N.; Lu, L.; Xu, R.; Wang, X.; Tan, W.; Qiao, Z.; Li, P.; Gao, Q. Quadcopter robot control based on hybrid brain–computer interface system. Sens. Mater. 2020, 32, 991–1004. [Google Scholar] [CrossRef] [Green Version]

- López, A.; Fernández, M.; Rodríguez, H.; Ferrero, F.; Postolache, O. Development of an EOG-based system to control a serious game. Measurement 2018, 127, 481–488. [Google Scholar] [CrossRef]

- Wan, S.; Wu, N.; Ye, Y.; Li, S.; Huang, H.; Chen, L.; Bi, H.; Sun, L. Highly Stretchable Starch Hydrogel Wearable Patch for Electrooculographic Signal Detection and Human–Machine Interaction. Small Struct. 2021, 2, 2100105. [Google Scholar] [CrossRef]

- O’Bard, B.; Larson, A.; Herrera, J.; Nega, D.; George, K. Electrooculography based iOS controller for individuals with quadriplegia or neurodegenerative disease. In Proceedings of the 2017 IEEE International Conference on Healthcare Informatics (ICHI), Park City, UT, USA, 23–26 August 2017; pp. 101–106. [Google Scholar]

- Jiao, Y.; Deng, Y.; Luo, Y.; Lu, B.-L. Driver sleepiness detection from EEG and EOG signals using GAN and LSTM networks. Neurocomputing 2020, 408, 100–111. [Google Scholar] [CrossRef]

- Sho’ouri, N. EOG biofeedback protocol based on selecting distinctive features to treat or reduce ADHD symptoms. Biomed. Signal Process. Control 2022, 71, 102748. [Google Scholar] [CrossRef]

- Latifoğlu, F.; Esas, M.Y.; Demirci, E. Diagnosis of attention-deficit hyperactivity disorder using EOG signals: A new approach. Biomed. Eng. Biomed. Tech. 2020, 65, 149–164. [Google Scholar] [CrossRef]

- Ayoubipour, S.; Hekmati, H.; Sho’ouri, N. Analysis of EOG signals related to ADHD and healthy children using wavelet transform. In Proceedings of the 2020 27th National and 5th International Iranian Conference on Biomedical Engineering (ICBME), Tehran, Iran, 26–27 November 2020; pp. 294–297. [Google Scholar]

- Singh, H.; Singh, J. Human eye tracking and related issues: A review. Int. J. Sci. Res. Publ. 2012, 2, 1–9. [Google Scholar]

- Cognolato, M.; Atzori, M.; Müller, H. Head-mounted eye gaze tracking devices: An overview of modern devices and recent advances. J. Rehabil. Assist. Technol. Eng. 2018, 5, 2055668318773991. [Google Scholar] [CrossRef]

- Krafka, K.; Khosla, A.; Kellnhofer, P.; Kannan, H.; Bhandarkar, S.; Matusik, W.; Torralba, A. Eye tracking for everyone. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2176–2184. [Google Scholar]

- Fabio, R.A.; Giannatiempo, S.; Semino, M.; Caprì, T. Longitudinal cognitive rehabilitation applied with eye-tracker for patients with Rett Syndrome. Res. Dev. Disabil. 2021, 111, 103891. [Google Scholar] [CrossRef]

- Hansen, D.W.; Ji, Q. In the eye of the beholder: A survey of models for eyes and gaze. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 478–500. [Google Scholar] [CrossRef]

- Oyekoya, O. Eye Tracking: A Perceptual Interface for Content Based Image Retrieval; University of London, University College London (United Kingdom): London, UK, 2007. [Google Scholar]

- Zhang, Y.; Zheng, X.; Hong, W.; Mou, X. A comparison study of stationary and mobile eye tracking on EXITs design in a wayfinding system. In Proceedings of the 2015 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA), Hong Kong, 16–19 December 2015; pp. 649–653. [Google Scholar]

- Płużyczka, M. The first hundred years: A history of eye tracking as a research method. Appl. Linguist. Pap. 2018, 4, 101–116. [Google Scholar] [CrossRef]

- Stuart, S.; Hickey, A.; Galna, B.; Lord, S.; Rochester, L.; Godfrey, A. iTrack: Instrumented mobile electrooculography (EOG) eye-tracking in older adults and Parkinson’s disease. Physiol. Meas. 2016, 38, N16. [Google Scholar] [CrossRef] [PubMed]

- Boukadoum, A.; Ktonas, P. EOG-Based Recording and Automated Detection of Sleep Rapid Eye Movements: A Critical Review, and Some Recommendations. Psychophysiology 1986, 23, 598–611. [Google Scholar] [CrossRef] [PubMed]

- Lam, R.W.; Beattie, C.W.; Buchanan, A.; Remick, R.A.; Zis, A.P. Low electrooculographic ratios in patients with seasonal affective disorder. Am. J. Psychiatry 1991, 148, 1526–1529. [Google Scholar]

- Bour, L.; Ongerboer de Visser, B.; Aramideh, M.; Speelman, J. Origin of eye and eyelid movements during blinking. Mov. Disord. 2002, 17, S30–S32. [Google Scholar] [CrossRef]

- Yamagishi, K.; Hori, J.; Miyakawa, M. Development of EOG-based communication system controlled by eight-directional eye movements. In Proceedings of the 2006 International Conference of the IEEE Engineering in Medicine and Biology Society, New York, NY, USA, 30 August–3 September 2006; pp. 2574–2577. [Google Scholar]

- Magosso, E.; Ursino, M.; Zaniboni, A.; Provini, F.; Montagna, P. Visual and computer-based detection of slow eye movements in overnight and 24-h EOG recordings. Clin. Neurophysiol. 2007, 118, 1122–1133. [Google Scholar] [CrossRef]

- Yazicioglu, R.F.; Torfs, T.; Merken, P.; Penders, J.; Leonov, V.; Puers, R.; Gyselinckx, B.; Van Hoof, C. Ultra-low-power biopotential interfaces and their applications in wearable and implantable systems. Microelectron. J. 2009, 40, 1313–1321. [Google Scholar] [CrossRef]

- Bulling, A.; Ward, J.A.; Gellersen, H.; Tröster, G. Eye movement analysis for activity recognition using electrooculography. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 741–753. [Google Scholar] [CrossRef]

- Cruz, A.; Garcia, D.; Pires, G.; Nunes, U. Facial Expression Recognition based on EOG toward Emotion Detection for Human-Robot Interaction. In Proceedings of the Biosignals, Lisbon, Portugal, 12–15 January 2015; pp. 31–37. [Google Scholar]

- Lin, C.-T.; Liao, L.-D.; Liu, Y.-H.; Wang, I.-J.; Lin, B.-S.; Chang, J.-Y. Novel dry polymer foam electrodes for long-term EEG measurement. IEEE Trans. Biomed. Eng. 2010, 58, 1200–1207. [Google Scholar]

- Searle, A.; Kirkup, L. A direct comparison of wet, dry and insulating bioelectric recording electrodes. Physiol. Meas. 2000, 21, 271. [Google Scholar] [CrossRef]

- Meziane, N.; Webster, J.; Attari, M.; Nimunkar, A. Dry electrodes for electrocardiography. Physiol. Meas. 2013, 34, R47. [Google Scholar] [CrossRef] [PubMed]

- Marmor, M.F.; Brigell, M.G.; McCulloch, D.L.; Westall, C.A.; Bach, M. ISCEV standard for clinical electro-oculography (2010 update). Doc. Ophthalmol. 2011, 122, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Tobjörk, D.; Österbacka, R. Paper electronics. Adv. Mater. 2011, 23, 1935–1961. [Google Scholar] [CrossRef] [PubMed]

- Vehkaoja, A.T.; Verho, J.A.; Puurtinen, M.M.; Nojd, N.M.; Lekkala, J.O.; Hyttinen, J.A. Wireless head cap for EOG and facial EMG measurements. In Proceedings of the 2005 IEEE Engineering in Medicine and Biology 27th Annual Conference, Shanghai, China, 1–4 September 2005; pp. 5865–5868. [Google Scholar]

- Eskandarian, L.; Toossi, A.; Nassif, F.; Golmohammadi Rostami, S.; Ni, S.; Mahnam, A.; Alizadeh Meghrazi, M.; Takarada, W.; Kikutani, T.; Naguib, H.E. 3D-Knit Dry Electrodes using Conductive Elastomeric Fibers for Long-Term Continuous Electrophysiological Monitoring. Adv. Mater. Technol. 2022, 7, 2101572. [Google Scholar] [CrossRef]

- Calvert, P. Inkjet printing for materials and devices. Chem. Mater. 2001, 13, 3299–3305. [Google Scholar] [CrossRef]

- Bollström, R.; Määttänen, A.; Tobjörk, D.; Ihalainen, P.; Kaihovirta, N.; Österbacka, R.; Peltonen, J.; Toivakka, M. A multilayer coated fiber-based substrate suitable for printed functionality. Org. Electron. 2009, 10, 1020–1023. [Google Scholar] [CrossRef]

- Kim, D.H.; Kim, Y.S.; Wu, J.; Liu, Z.; Song, J.; Kim, H.S.; Huang, Y.Y.; Hwang, K.C.; Rogers, J.A. Ultrathin silicon circuits with strain-isolation layers and mesh layouts for high-performance electronics on fabric, vinyl, leather, and paper. Adv. Mater. 2009, 21, 3703–3707. [Google Scholar] [CrossRef]

- Hyun, W.J.; Secor, E.B.; Hersam, M.C.; Frisbie, C.D.; Francis, L.F. High-resolution patterning of graphene by screen printing with a silicon stencil for highly flexible printed electronics. Adv. Mater. 2015, 27, 109–115. [Google Scholar] [CrossRef]

- Golparvar, A.; Ozturk, O.; Yapici, M.K. Gel-Free Wearable Electroencephalography (EEG) with Soft Graphene Textiles. In Proceedings of the 2021 Ieee Sensors, Online, 31 October–4 November 2021; pp. 1–4. [Google Scholar]

- Golparvar, A.J.; Yapici, M.K. Graphene-coated wearable textiles for EOG-based human-computer interaction. In Proceedings of the 2018 IEEE 15th International Conference on Wearable and Implantable Body Sensor Networks (BSN), Las Vegas, NV, USA, 4–7 March 2018; pp. 189–192. [Google Scholar]

- Wang, X.; Xiao, Y.; Deng, F.; Chen, Y.; Zhang, H. Eye-Movement-Controlled Wheelchair Based on Flexible Hydrogel Biosensor and WT-SVM. Biosensors 2021, 11, 198. [Google Scholar] [CrossRef]

- Wang, Z.; Chen, L.; Chen, Y.; Liu, P.; Duan, H.; Cheng, P. 3D printed ultrastretchable, hyper-antifreezing conductive hydrogel for sensitive motion and electrophysiological signal monitoring. Research 2020, 2020, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Sadri, B.; Goswami, D.; Martinez, R.V. Rapid fabrication of epidermal paper-based electronic devices using razor printing. Micromachines 2018, 9, 420. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Peng, H.-L.; Liu, J.-Q.; Dong, Y.-Z.; Yang, B.; Chen, X.; Yang, C.-S. Parylene-based flexible dry electrode for bioptential recording. Sens. Actuators B Chem. 2016, 231, 1–11. [Google Scholar] [CrossRef]

- Huang, J.; Zhu, H.; Chen, Y.; Preston, C.; Rohrbach, K.; Cumings, J.; Hu, L. Highly transparent and flexible nanopaper transistors. ACS Nano 2013, 7, 2106–2113. [Google Scholar] [CrossRef] [PubMed]

- Blumenthal, T.; Fratello, V.; Nino, G.; Ritala, K. Aerosol Jet® Printing Onto 3D and Flexible Substrates. Quest Integr. Inc. 2017. Available online: http://www.qi2.com/wp-content/uploads/2016/12/TP-460-Aerosol-Jet-Printing-onto-3D-and-Flexible-Substrates.pdf (accessed on 19 October 2022).

- Saengchairat, N.; Tran, T.; Chua, C.-K. A review: Additive manufacturing for active electronic components. Virtual Phys. Prototyp. 2017, 12, 31–46. [Google Scholar] [CrossRef]

- Beach, C.; Karim, N.; Casson, A.J. A Graphene-Based Sleep Mask for Comfortable Wearable Eye Tracking. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 6693–6696. [Google Scholar]

- Paul, G.M.; Cao, F.; Torah, R.; Yang, K.; Beeby, S.; Tudor, J. A smart textile based facial EMG and EOG computer interface. IEEE Sens. J. 2013, 14, 393–400. [Google Scholar] [CrossRef]

- Paul, G.; Torah, R.; Beeby, S.; Tudor, J. The development of screen printed conductive networks on textiles for biopotential monitoring applications. Sens. Actuators A: Phys. 2014, 206, 35–41. [Google Scholar] [CrossRef]

- Peng, H.-L.; Sun, Y.-l.; Bi, C.; Li, Q.-F. Development of a flexible dry electrode based MXene with low contact impedance for biopotential recording. Measurement 2022, 190, 110782. [Google Scholar] [CrossRef]

- Cheng, X.; Bao, C.; Dong, W. Soft dry electroophthalmogram electrodes for human machine interaction. Biomed. Microdevices 2019, 21, 1–11. [Google Scholar] [CrossRef]

- Tian, L.; Zimmerman, B.; Akhtar, A.; Yu, K.J.; Moore, M.; Wu, J.; Larsen, R.J.; Lee, J.W.; Li, J.; Liu, Y. Large-area MRI-compatible epidermal electronic interfaces for prosthetic control and cognitive monitoring. Nat. Biomed. Eng. 2019, 3, 194–205. [Google Scholar] [CrossRef]

- Park, J.; Choi, S.; Janardhan, A.H.; Lee, S.-Y.; Raut, S.; Soares, J.; Shin, K.; Yang, S.; Lee, C.; Kang, K.-W. Electromechanical cardioplasty using a wrapped elasto-conductive epicardial mesh. Sci. Transl. Med. 2016, 8, 344ra86. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Norton, J.J.; Lee, D.S.; Lee, J.W.; Lee, W.; Kwon, O.; Won, P.; Jung, S.-Y.; Cheng, H.; Jeong, J.-W.; Akce, A. Soft, curved electrode systems capable of integration on the auricle as a persistent brain–computer interface. Proc. Natl. Acad. Sci. USA 2015, 112, 3920–3925. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bergmann, J.; McGregor, A. Body-worn sensor design: What do patients and clinicians want? Ann. Biomed. Eng. 2011, 39, 2299–2312. [Google Scholar] [CrossRef] [PubMed]

- Preece, S.J.; Goulermas, J.Y.; Kenney, L.P.; Howard, D.; Meijer, K.; Crompton, R. Activity identification using body-mounted sensors—A review of classification techniques. Physiol. Meas. 2009, 30, R1. [Google Scholar] [CrossRef] [PubMed]

- Kanoh, S.; Ichi-nohe, S.; Shioya, S.; Inoue, K.; Kawashima, R. Development of an eyewear to measure eye and body movements. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 2267–2270. [Google Scholar]

- Desai, M.; Pratt, L.A.; Lentzner, H.R.; Robinson, K.N. Trends in vision and hearing among older Americans. Aging Trends 2001, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Bulling, A.; Roggen, D.; Tröster, G. Wearable EOG goggles: Eye-based interaction in everyday environments. In CHI’09 Extended Abstracts on Human Factors in Computing Systems; Association for Computing Machinery: New York, NY, USA, 2009; pp. 3259–3264. [Google Scholar]

- Kosmyna, N. AttentivU: A Wearable Pair of EEG and EOG Glasses for Real-Time Physiological Processing (Conference Presentation). In Proceedings of the Optical Architectures for Displays and Sensing in Augmented, Virtual, and Mixed Reality (AR, VR, MR), San Francisco, CA, USA, 2 February 2020; p. 113101P. [Google Scholar]

- Bulling, A.; Roggen, D.; Tröster, G. Wearable EOG goggles: Seamless sensing and context-awareness in everyday environments. J. Ambient Intell. Smart Environ. 2009, 1, 157–171. [Google Scholar] [CrossRef] [Green Version]

- Dhuliawala, M.; Lee, J.; Shimizu, J.; Bulling, A.; Kunze, K.; Starner, T.; Woo, W. Smooth eye movement interaction using EOG glasses. In Proceedings of the 18th ACM International Conference on Multimodal Interaction, Tokyo, Japan, 12–16 November 2016; pp. 307–311. [Google Scholar]

- Inzelberg, L.; Pur, M.D.; Schlisske, S.; Rödlmeier, T.; Granoviter, O.; Rand, D.; Steinberg, S.; Hernandez-Sosa, G.; Hanein, Y. Printed facial skin electrodes as sensors of emotional affect. Flex. Print. Electron. 2018, 3, 045001. [Google Scholar] [CrossRef]

- Miettinen, T.; Myllymaa, K.; Hukkanen, T.; Töyräs, J.; Sipilä, K.; Myllymaa, S. Home polysomnography reveals a first-night effect in patients with low sleep bruxism activity. J. Clin. Sleep Med. 2018, 14, 1377–1386. [Google Scholar] [CrossRef]

- Simar, C.; Petieau, M.; Cebolla, A.; Leroy, A.; Bontempi, G.; Cheron, G. EEG-based brain-computer interface for alpha speed control of a small robot using the MUSE headband. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19-24 July 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Balconi, M.; Fronda, G.; Venturella, I.; Crivelli, D. Conscious, pre-conscious and unconscious mechanisms in emotional behaviour. Some applications to the mindfulness approach with wearable devices. Appl. Sci. 2017, 7, 1280. [Google Scholar] [CrossRef] [Green Version]

- Asif, A.; Majid, M.; Anwar, S.M. Human stress classification using EEG signals in response to music tracks. Comput. Biol. Med. 2019, 107, 182–196. [Google Scholar] [CrossRef]

- Merino, M.; Rivera, O.; Gómez, I.; Molina, A.; Dorronzoro, E. A method of EOG signal processing to detect the direction of eye movements. In Proceedings of the 2010 First International Conference on Sensor Device Technologies and Applications, Washington, DC, USA, 18–25 July 2010; pp. 100–105. [Google Scholar]

- Wang, Y.; Lv, Z.; Zheng, Y. Automatic emotion perception using eye movement information for E-healthcare systems. Sensors 2018, 18, 2826. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Soundariya, R.; Renuga, R. Eye movement based emotion recognition using electrooculography. In Proceedings of the 2017 Innovations in Power and Advanced Computing Technologies (i-PACT), Vellore, India, 21–22 April 2017; pp. 1–5. [Google Scholar]

- Kose, M.R.; Ahirwal, M.K.; Kumar, A. A new approach for emotions recognition through EOG and EMG signals. Signal Image Video Process. 2021, 15, 1863–1871. [Google Scholar] [CrossRef]

- Sánchez-Ferrer, M.L.; Grima-Murcia, M.D.; Sánchez-Ferrer, F.; Hernández-Peñalver, A.I.; Fernández-Jover, E.; Del Campo, F.S. Use of eye tracking as an innovative instructional method in surgical human anatomy. J. Surg. Educ. 2017, 74, 668–673. [Google Scholar] [CrossRef]

- Vansteenkiste, P.; Cardon, G.; Philippaerts, R.; Lenoir, M. Measuring dwell time percentage from head-mounted eye-tracking data–comparison of a frame-by-frame and a fixation-by-fixation analysis. Ergonomics 2015, 58, 712–721. [Google Scholar] [CrossRef] [PubMed]

- Mohanto, B.; Islam, A.T.; Gobbetti, E.; Staadt, O. An integrative view of foveated rendering. Comput. Graph. 2022, 102, 474–501. [Google Scholar] [CrossRef]

- Holmqvist, K.; Örbom, S.L.; Zemblys, R. Small head movements increase and colour noise in data from five video-based P–CR eye trackers. Behav. Res. Methods 2022, 54, 845–863. [Google Scholar] [CrossRef] [PubMed]

- Lev, A.; Braw, Y.; Elbaum, T.; Wagner, M.; Rassovsky, Y. Eye tracking during a continuous performance test: Utility for assessing ADHD patients. J. Atten. Disord. 2022, 26, 245–255. [Google Scholar] [CrossRef] [PubMed]

- Wu, M.; Louw, T.; Lahijanian, M.; Ruan, W.; Huang, X.; Merat, N.; Kwiatkowska, M. Gaze-based intention anticipation over driving manoeuvres in semi-autonomous vehicles. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 4–8 November 2019; pp. 6210–6216. [Google Scholar]

- Taba, I.B. Improving Eye-Gaze Tracking Accuracy through Personalized Calibration of a User’s Aspherical Corneal Model; University of British Columbia: Vancouver, BC, Canada, 2012. [Google Scholar]

- Vázquez Romaguera, T.; Vázquez Romaguera, L.; Castro Piñol, D.; Vázquez Seisdedos, C.R. Pupil Center Detection Approaches: A Comparative Analysis. Comput. Y Sist. 2021, 25, 67–81. [Google Scholar] [CrossRef]

- Schwiegerling, J.T. Eye axes and their relevance to alignment of corneal refractive procedures. J. Refract. Surg. 2013, 29, 515–516. [Google Scholar] [CrossRef] [Green Version]

- Duchowski, A.T. Eye Tracking Methodology: Theory and Practice; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Shehu, I.S.; Wang, Y.; Athuman, A.M.; Fu, X. Remote Eye Gaze Tracking Research: A Comparative Evaluation on Past and Recent Progress. Electronics 2021, 10, 3165. [Google Scholar] [CrossRef]

- Mantiuk, R. Gaze-dependent tone mapping for HDR video. In High Dynamic Range Video; Elsevier: Amsterdam, The Netherlands, 2017; pp. 189–199. [Google Scholar]

- Schall, A.; Bergstrom, J.R. Introduction to eye tracking. In Eye Tracking in User Experience Design; Elsevier: Amsterdam, The Netherlands, 2014; pp. 3–26. [Google Scholar]

- Carter, B.T.; Luke, S.G. Best practices in eye tracking research. Int. J. Psychophysiol. 2020, 155, 49–62. [Google Scholar] [CrossRef] [PubMed]

- Noland, R.B.; Weiner, M.D.; Gao, D.; Cook, M.P.; Nelessen, A. Eye-tracking technology, visual preference surveys, and urban design: Preliminary evidence of an effective methodology. J. Urban. Int. Res. Placemaking Urban Sustain. 2017, 10, 98–110. [Google Scholar] [CrossRef]

- Wang, M.; Wang, T.; Luo, Y.; He, K.; Pan, L.; Li, Z.; Cui, Z.; Liu, Z.; Tu, J.; Chen, X. Fusing stretchable sensing technology with machine learning for human–machine interfaces. Adv. Funct. Mater. 2021, 31, 2008807. [Google Scholar] [CrossRef]

- Donuk, K.; Ari, A.; Hanbay, D. A CNN based real-time eye tracker for web mining applications. Multimed. Tools Appl. 2022, 81, 39103–39120. [Google Scholar] [CrossRef]

- Hosp, B.; Eivazi, S.; Maurer, M.; Fuhl, W.; Geisler, D.; Kasneci, E. Remoteeye: An open-source high-speed remote eye tracker. Behav. Res. Methods 2020, 52, 1387–1401. [Google Scholar] [CrossRef]

- Petersch, B.; Dierkes, K. Gaze-angle dependency of pupil-size measurements in head-mounted eye tracking. Behav. Res. Methods 2022, 54, 763–779. [Google Scholar] [CrossRef]

- Larumbe-Bergera, A.; Garde, G.; Porta, S.; Cabeza, R.; Villanueva, A. Accurate pupil center detection in off-the-shelf eye tracking systems using convolutional neural networks. Sensors 2021, 21, 6847. [Google Scholar] [CrossRef]

- Hess, E.H.; Polt, J.M. Pupil size as related to interest value of visual stimuli. Science 1960, 132, 349–350. [Google Scholar] [CrossRef]

- Punde, P.A.; Jadhav, M.E.; Manza, R.R. A study of eye tracking technology and its applications. In Proceedings of the 2017 1st International Conference on Intelligent Systems and Information Management (ICISIM), Aurangabad, India, 5–6 October 2017; pp. 86–90. [Google Scholar]

- Rusnak, M.A.; Rabiega, M. The Potential of Using an Eye Tracker in Architectural Education: Three Perspectives for Ordinary Users, Students and Lecturers. Buildings 2021, 11, 245. [Google Scholar] [CrossRef]

- Edition, F. Diagnostic and statistical manual of mental disorders. Am Psychiatr. Assoc 2013, 21, 591–643. [Google Scholar]

- Constantino, J.N.; Kennon-McGill, S.; Weichselbaum, C.; Marrus, N.; Haider, A.; Glowinski, A.L.; Gillespie, S.; Klaiman, C.; Klin, A.; Jones, W. Infant viewing of social scenes is under genetic control and is atypical in autism. Nature 2017, 547, 340–344. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Khan, M.Q.; Lee, S. Gaze and eye tracking: Techniques and applications in ADAS. Sensors 2019, 19, 5540. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cazzato, D.; Leo, M.; Distante, C. An investigation on the feasibility of uncalibrated and unconstrained gaze tracking for human assistive applications by using head pose estimation. Sensors 2014, 14, 8363–8379. [Google Scholar] [CrossRef] [PubMed]

- Hessels, R.S.; Niehorster, D.C.; Nyström, M.; Andersson, R.; Hooge, I.T. Is the eye-movement field confused about fixations and saccades? A survey among 124 researchers. R. Soc. Open Sci. 2018, 5, 180502. [Google Scholar] [CrossRef] [Green Version]

- González-Mena, G.; Del-Valle-Soto, C.; Corona, V.; Rodríguez, J. Neuromarketing in the Digital Age: The Direct Relation between Facial Expressions and Website Design. Appl. Sci. 2022, 12, 8186. [Google Scholar] [CrossRef]

- Dong, W.; Liao, H.; Roth, R.E.; Wang, S. Eye tracking to explore the potential of enhanced imagery basemaps in web mapping. Cartogr. J. 2014, 51, 313–329. [Google Scholar] [CrossRef]

- Voßkühler, A.; Nordmeier, V.; Kuchinke, L.; Jacobs, A.M. OGAMA (Open Gaze and Mouse Analyzer): Open-source software designed to analyze eye and mouse movements in slideshow study designs. Behav. Res. Methods 2008, 40, 1150–1162. [Google Scholar] [CrossRef] [Green Version]

- Niehorster, D.C.; Santini, T.; Hessels, R.S.; Hooge, I.T.; Kasneci, E.; Nyström, M. The impact of slippage on the data quality of head-worn eye trackers. Behav. Res. Methods 2020, 52, 1140–1160. [Google Scholar] [CrossRef] [Green Version]

- Hu, N. Depth Estimation Inside 3D Maps Based on Eye-Tracker. 2020. Available online: https://mediatum.ub.tum.de/doc/1615800/1615800.pdf (accessed on 19 October 2022).

- Takahashi, R.; Suzuki, H.; Chew, J.Y.; Ohtake, Y.; Nagai, Y.; Ohtomi, K. A system for three-dimensional gaze fixation analysis using eye tracking glasses. J. Comput. Des. Eng. 2018, 5, 449–457. [Google Scholar] [CrossRef]

- Špakov, O.; Miniotas, D. Visualization of eye gaze data using heat maps. Elektron. Ir Elektrotechnika 2007, 74, 55–58. [Google Scholar]

- Maurus, M.; Hammer, J.H.; Beyerer, J. Realistic heatmap visualization for interactive analysis of 3D gaze data. In Proceedings of the Symposium on Eye Tracking Research and Applications, Safety Harbor, FL, USA, 26–28 March 2014; pp. 295–298. [Google Scholar]

- Pfeiffer, T.; Memili, C. Model-based real-time visualization of realistic three-dimensional heat maps for mobile eye tracking and eye tracking in virtual reality. In Proceedings of the Ninth Biennial ACM Symposium on Eye Tracking Research & Applications, Charleston, SC, USA, 14–17 March 2016; pp. 95–102. [Google Scholar]

- Kar, A.; Corcoran, P. Performance evaluation strategies for eye gaze estimation systems with quantitative metrics and visualizations. Sensors 2018, 18, 3151. [Google Scholar] [CrossRef] [Green Version]

- Munz, T.; Chuang, L.; Pannasch, S.; Weiskopf, D. VisME: Visual microsaccades explorer. J. Eye Mov. Res. 2019, 12. [Google Scholar] [CrossRef] [PubMed]

- Reingold, E.M. Eye tracking research and technology: Towards objective measurement of data quality. Vis. Cogn. 2014, 22, 635–652. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Godijn, R.; Theeuwes, J. Programming of endogenous and exogenous saccades: Evidence for a competitive integration model. J. Exp. Psychol. Hum. Percept. Perform. 2002, 28, 1039. [Google Scholar] [CrossRef]

- Ha, K.; Chen, Z.; Hu, W.; Richter, W.; Pillai, P.; Satyanarayanan, M. Towards wearable cognitive assistance. In Proceedings of the 12th annual international conference on Mobile systems, applications, and services, Bretton Woods, NH, USA, 16–19 June 2014; pp. 68–81. [Google Scholar]

- Larrazabal, A.J.; Cena, C.G.; Martínez, C.E. Video-oculography eye tracking towards clinical applications: A review. Comput. Biol. Med. 2019, 108, 57–66. [Google Scholar] [CrossRef]

- Mahanama, B.; Jayawardana, Y.; Rengarajan, S.; Jayawardena, G.; Chukoskie, L.; Snider, J.; Jayarathna, S. Eye Movement and Pupil Measures: A Review. Front. Comput. Sci. 2022, 3, 733531. [Google Scholar] [CrossRef]

- Hessels, R.S.; Benjamins, J.S.; Niehorster, D.C.; van Doorn, A.J.; Koenderink, J.J.; Holleman, G.A.; de Kloe, Y.J.; Valtakari, N.V.; van Hal, S.; Hooge, I.T. Eye contact avoidance in crowds: A large wearable eye-tracking study. Atten. Percept. Psychophys. 2022, 84, 2623–2640. [Google Scholar] [CrossRef]

- Li, T.; Zhou, X. Battery-free eye tracker on glasses. In Proceedings of the 24th Annual International Conference on Mobile Computing and Networking, New Delhi, India, 29 October–2 November 2018; pp. 67–82. [Google Scholar]

- Ye, Z.; Li, Y.; Fathi, A.; Han, Y.; Rozga, A.; Abowd, G.D.; Rehg, J.M. Detecting eye contact using wearable eye-tracking glasses. In Proceedings of the 2012 ACM conference on ubiquitous computing, Pittsburgh, PA, USA, 5–8 September 2012; pp. 699–704. [Google Scholar]

- Aronson, R.M.; Santini, T.; Kübler, T.C.; Kasneci, E.; Srinivasa, S.; Admoni, H. Eye-hand behavior in human-robot shared manipulation. In Proceedings of the 2018 13th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Chicago, IL, USA, 5–8 March 2018; pp. 4–13. [Google Scholar]

- Callahan-Flintoft, C.; Barentine, C.; Touryan, J.; Ries, A.J. A Case for Studying Naturalistic Eye and Head Movements in Virtual Environments. Front. Psychol. 2021, 12, 650693. [Google Scholar] [CrossRef]

- Radianti, J.; Majchrzak, T.A.; Fromm, J.; Wohlgenannt, I. A systematic review of immersive virtual reality applications for higher education: Design elements, lessons learned, and research agenda. Comput. Educ. 2020, 147, 103778. [Google Scholar] [CrossRef]

- Arefin, M.S.; Swan II, J.E.; Cohen Hoffing, R.A.; Thurman, S.M. Estimating Perceptual Depth Changes with Eye Vergence and Interpupillary Distance using an Eye Tracker in Virtual Reality. In Proceedings of the 2022 Symposium on Eye Tracking Research and Applications, Seatle, WA, USA, 8–11 June 2022; pp. 1–7. [Google Scholar]

- Puig, M.S.; Romeo, A.; Supèr, H. Vergence eye movements during figure-ground perception. Conscious. Cogn. 2021, 92, 103138. [Google Scholar] [CrossRef]

- Hooge, I.T.; Hessels, R.S.; Nyström, M. Do pupil-based binocular video eye trackers reliably measure vergence? Vis. Res. 2019, 156, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Iwata, Y.; Handa, T.; Ishikawa, H. Objective measurement of nine gaze-directions using an eye-tracking device. J. Eye Mov. Res. 2020, 13. [Google Scholar] [CrossRef] [PubMed]

- Clay, V.; König, P.; Koenig, S. Eye tracking in virtual reality. J. Eye Mov. Res. 2019, 12. [Google Scholar] [CrossRef] [PubMed]

- Biedert, R.; Buscher, G.; Dengel, A. Gazing the Text for Fun and Profit. In Eye Gaze in Intelligent User Interfaces; Springer: Berlin/Heidelberg, Germany, 2013; pp. 137–160. [Google Scholar]

- Nakano, Y.I.; Conati, C.; Bader, T. Eye Gaze in Intelligent User Interfaces: Gaze-Based Analyses, Models and Applications; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Hooge, I.T.; Niehorster, D.C.; Hessels, R.S.; Cleveland, D.; Nyström, M. The pupil-size artefact (PSA) across time, viewing direction, and different eye trackers. Behav. Res. Methods 2021, 53, 1986–2006. [Google Scholar] [CrossRef]

- Shishido, E.; Ogawa, S.; Miyata, S.; Yamamoto, M.; Inada, T.; Ozaki, N. Application of eye trackers for understanding mental disorders: Cases for schizophrenia and autism spectrum disorder. Neuropsychopharmacol. Rep. 2019, 39, 72–77. [Google Scholar] [CrossRef]

- Hung, J.C.; Wang, C.-C. The Influence of Cognitive Styles and Gender on Visual Behavior During Program Debugging: A Virtual Reality Eye Tracker Study. Hum.-Cent. Comput. Inf. Sci. 2021, 11, 1–21. [Google Scholar]

- Obaidellah, U.; Haek, M.A. Evaluating gender difference on algorithmic problems using eye-tracker. In Proceedings of the 2018 ACM Symposium on Eye Tracking Research & Applications, Warsaw, Poland, 14–17 June 2018; pp. 1–8. [Google Scholar]

- Yehezkel, O.; Belkin, M.; Wygnanski-Jaffe, T. Automated diagnosis and measurement of strabismus in children. Am. J. Ophthalmol. 2020, 213, 226–234. [Google Scholar] [CrossRef] [Green Version]

- Nobukawa, S.; Shirama, A.; Takahashi, T.; Takeda, T.; Ohta, H.; Kikuchi, M.; Iwanami, A.; Kato, N.; Toda, S. Identification of attention-deficit hyperactivity disorder based on the complexity and symmetricity of pupil diameter. Sci. Rep. 2021, 11, 1–14. [Google Scholar] [CrossRef]

- Ioannidou, F.; Hermens, F.; Hodgson, T.L. Mind your step: The effects of mobile phone use on gaze behavior in stair climbing. J. Technol. Behav. Sci. 2017, 2, 109–120. [Google Scholar] [CrossRef] [Green Version]

- Scalera, L.; Seriani, S.; Gallina, P.; Lentini, M.; Gasparetto, A. Human–robot interaction through eye tracking for artistic drawing. Robotics 2021, 10, 54. [Google Scholar] [CrossRef]

- Aoyama, T.; Takeno, S.; Takeuchi, M.; Hasegawa, Y. Head-mounted display-based microscopic imaging system with customizable field size and viewpoint. Sensors 2020, 20, 1967. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mantiuk, R.; Kowalik, M.; Nowosielski, A.; Bazyluk, B. Do-it-yourself eye tracker: Low-cost pupil-based eye tracker for computer graphics applications. In Proceedings of the International Conference on Multimedia Modeling, Klagenfurt, Austria, 4–6 January 2012; pp. 115–125. [Google Scholar]

- Schneider, B.; Sharma, K.; Cuendet, S.; Zufferey, G.; Dillenbourg, P.; Pea, R. Leveraging mobile eye-trackers to capture joint visual attention in co-located collaborative learning groups. Int. J. Comput.-Support. Collab. Learn. 2018, 13, 241–261. [Google Scholar] [CrossRef]

- Hietanen, A.; Pieters, R.; Lanz, M.; Latokartano, J.; Kämäräinen, J.-K. AR-based interaction for human-robot collaborative manufacturing. Robot. Comput.-Integr. Manuf. 2020, 63, 101891. [Google Scholar] [CrossRef]

- Diaz-Piedra, C.; Sanchez-Carrion, J.M.; Rieiro, H.; Di Stasi, L.L. Gaze-based technology as a tool for surgical skills assessment and training in urology. Urology 2017, 107, 26–30. [Google Scholar] [CrossRef] [PubMed]

| Purpose | Target User | Signal | Data Processing | Refs |

|---|---|---|---|---|

| Wheelchairs | Disabled people | EOG + EEG + EMG | Signal processing | [51] |

| EOG | LDA | [1] | ||

| EOG | Signal processing | [4] | ||

| EOG + EEG + EMG | Signal processing | [52] | ||

| Game controller | Anyone | EOG | DWT | [5] |

| EOG | SWT | [60] | ||

| EOG + EEG + EMG | SVM | [47] | ||

| EOG | Signal processing | [61] | ||

| Drone | EOG | Signal processing | [11,59] | |

| Virtual keyboard | EOG | SVM | [38] | |

| EOG+EEG+EMG | Signal processing | [51] | ||

| EOG+EEG | SVM | [34] | ||

| EOG | Signal processing | [62] | ||

| ADHD | Children | EOG | Signal processing | [64] |

| EOG | Signal processing | [65] | ||

| EOG | WT | [66] | ||

| Emotion Recognition | Anyone | EOG | SVM | [127] |

| EOG + EMG | SVM | [128] | ||

| EOG + Eye image | STFT | [126] | ||

| sleepiness | Driver | EOG+EEG | GAN + LSTM | [63] |

| Drowsiness | Anyone | EOG | Signal processing | [55] |

| EOG+EEG | Signal processing | [58] | ||

| Sleep monitoring | EOG+EEG+EMG | Signal processing | [41] | |

| EOG | Linear classifier | [40] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ban, S.; Lee, Y.J.; Kim, K.R.; Kim, J.-H.; Yeo, W.-H. Advances in Materials, Sensors, and Integrated Systems for Monitoring Eye Movements. Biosensors 2022, 12, 1039. https://doi.org/10.3390/bios12111039

Ban S, Lee YJ, Kim KR, Kim J-H, Yeo W-H. Advances in Materials, Sensors, and Integrated Systems for Monitoring Eye Movements. Biosensors. 2022; 12(11):1039. https://doi.org/10.3390/bios12111039

Chicago/Turabian StyleBan, Seunghyeb, Yoon Jae Lee, Ka Ram Kim, Jong-Hoon Kim, and Woon-Hong Yeo. 2022. "Advances in Materials, Sensors, and Integrated Systems for Monitoring Eye Movements" Biosensors 12, no. 11: 1039. https://doi.org/10.3390/bios12111039