Conditional Performance Evaluation: Using Wildfire Observations for Systematic Fire Simulator Development

Abstract

:1. Introduction

2. Methods

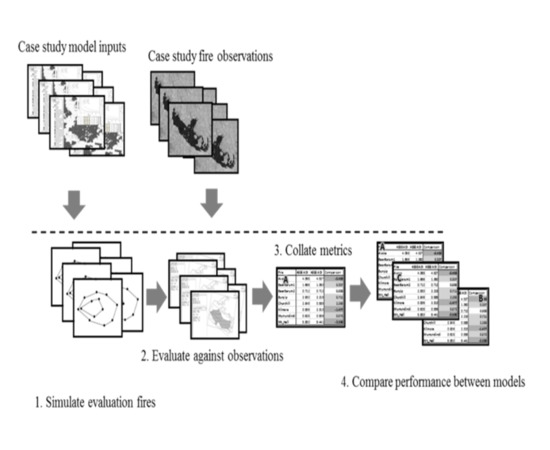

2.1. Overall Procedure

2.2. The Evaluation Set

- Observations of fire perimeters in the form of time stamped polygons that were derived from verifiable sources such as infra-red linescans [59] or official reconstructions;

- Vegetation maps from which fuel classifications were derived using the relevant lookup table for each state;

- Fire history maps indicating previously burned areas (for the moderation of fuel loads where recent fires had occurred [60]);

- High (30 m) resolution digital elevation models (to derive terrain).

2.3. Test Models

2.3.1. Evaluation Metrics

2.3.2. Evaluation Process

3. Results

4. Discussion

- Isochrones depicting the progression of the fire as a function of time;

- Spatial datasets describing the terrain, vegetation/fuel properties and recent fire/disturbance history;

- Weather observations from near the fire; in particular, temperature, relative humidity, wind speed and direction;

- Information about the state of landscape dryness, such as direct measurements or dryness indices calculated from prior observed weather;

- Details about suppression activities, including location, timing, methods used and effectiveness.

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A

References

- Krawchuk, M.A.; Moritz, M.A. Constraints on global fire activity vary across a resource gradient. Ecology 2011, 92, 121–132. [Google Scholar] [CrossRef] [PubMed]

- Bradstock, R.A. A biogeographic model of fire regimes in Australia: Current and future implications. Glob. Ecol. Biogeogr. 2010, 19, 145–158. [Google Scholar] [CrossRef]

- Gill, A.M.; Stephens, S.L.; Cary, G.J. The worldwide “wildfire” problem. Ecol. Appl. 2013, 23, 438–454. [Google Scholar] [CrossRef] [PubMed]

- McLoughlin, D. A framework for intergrated emergency management. Public Adm. Rev. 1985, 45, 165–172. [Google Scholar] [CrossRef]

- Noble, I.R.; Gill, A.M.; Bary, G.A.V. McArthur’s fire-danger meters expressed as equations. Austral Ecol. 1980, 5, 201–203. [Google Scholar] [CrossRef]

- Rothermel, R.C. How to Predict the Spread and Intensity of Forest and Range Fires; Forest Service, U.S. Department of Agriculture: Boise Idaho, ID, USA, 1983.

- Sullivan, A.L. Wildland surface fire spread modelling, 1990–2007. 3: Simulation and mathematical analogue models. Int. J. Wildland Fire 2009, 18, 387–403. [Google Scholar] [CrossRef]

- Sullivan, A.L. Wildland surface fire spread modelling, 1990–2007. 1: Physical and quasi-physical models. Int. J. Wildland Fire 2009, 18, 349–368. [Google Scholar] [CrossRef]

- Finney, M.A. FARSITE: Fire Area Simulator—Model. Development and Evaluation; Rocky Mountain Reseach Station, Forest Service, U.S. Department of Agriculture: Missoula, MT, USA, 2004.

- Tolhurst, K.G.; Shields, B.; Chong, D. PHOENIX: Development and application of a bushfire risk management tool. Aust. J. Emerg. Manag. 2008, 23, 47–54. [Google Scholar]

- Tymstra, C.; Bryce, R.W.; Wotton, B.M.; Taylor, S.W.; Armitage, O.B. Development and Structure of Prometheus: The Canadian Wildland Fire Growth Simulation Model; Canadian Forest Service: Edmonton, AB, Canada, 2010. [Google Scholar]

- Tolhurst, K.G.; Duff, T.J.; Chong, D.M. From “Wildland-Urban Interface” to “Wildfire Interface Zone” using dynamic fire modelling. In Proceedins of the 20th International Congress on Modelling and Simulation, Adelaide, Australia, 1–6 December 2013; Piantadosi, J., Anderssen, R.S., Boland, J., Eds.; Modelling and Simulation Society of Australia and New Zealand: Adelaide, Australia, 2013; pp. 290–296. [Google Scholar]

- Finney, M.A.; Sapsis, D.B.; Bahro, B. Use of FARSITE for Simulating Fire Supression and Analyzing Fuel Treatment Economics. In Proceedings of the Conference on Fire in California Ecosystems: Integrating Ecology, Prevention and Management, San Diego, CA, USA, 17–20 November 1997; Sugihara, N.G., Morales, M.E., Morales, T.J., Eds.; Association for Fire Ecology: San Diego, CA, USA, 2002; pp. 121–136. [Google Scholar]

- Alcasena, F.; Salis, M.; Ager, A.; Castell, R.; Vega-García, C. Assessing wildland fire risk transmission to communities in Northern Spain. Forests 2017, 8, 30. [Google Scholar] [CrossRef]

- Mallinis, G.; Mitsopoulos, I.; Beltran, E.; Goldammer, J. Assessing Wildfire Risk in Cultural Heritage Properties Using High Spatial and Temporal Resolution Satellite Imagery and Spatially Explicit Fire Simulations: The Case of Holy Mount Athos, Greece. Forests 2016, 7, 46. [Google Scholar] [CrossRef]

- Ager, A.A.; Vaillant, N.M.; Finney, M.A.; Preisler, H.K. Analyzing wildfire exposure and source–sink relationships on a fire prone forest landscape. For. Ecol. Manag. 2012, 267, 271–283. [Google Scholar] [CrossRef]

- Department of Environment and Primary Industries. Victorian Bushfire Risk Profiles: A Foundational Framework for Strategic Bushfire Risk Assessment; The State of Victoria Department of Environment and Primary Industries: East Melbourne, Australia, 2013.

- Duff, T.J.; Chong, D.M.; Taylor, P.; Tolhurst, K.G. Procrustes based metrics for spatial validation and calibration of two-dimensional perimeter spread models: A case study considering fire. Agric. For. Meteorol. 2012, 160, 110–117. [Google Scholar] [CrossRef]

- Alexander, M.E.; Cruz, M.G. Are the applications of wildland fire behaviour models getting ahead of their evaluation again? Environ. Model. Softw. 2013, 41, 65–71. [Google Scholar] [CrossRef]

- Sullivan, A.L. Wildland surface fire spread modelling, 1990–2007. 2: Empirical and quasi-empirical models. Int. J. Wildland Fire 2009, 18, 369–386. [Google Scholar] [CrossRef]

- Johnston, P.; Kelso, J.; Milne, G.J. Efficient simulation of wildfire spread on an irregular grid. Int. J. Wildland Fire 2008, 17, 614–627. [Google Scholar] [CrossRef]

- Rothermel, R.C.; Rinehart, G.C. Field Procedures for Verification and Adjustment of Fire Behaviour Predictions; Forest Service, U.S. Department of Agriculture: Ogden, UT, USA, 1983.

- Hoffman, C.M.; Canfield, J.; Linn, R.R.; Mell, W.; Sieg, C.H.; Pimont, F.; Ziegler, J. Evaluating crown fire rate of spread predictions from physics-based models. Fire Technol. 2016, 221–237. [Google Scholar] [CrossRef]

- Anderson, W.R.; Cruz, M.G.; Fernandes, P.M.; McCaw, L.; Vega, J.A.; Bradstock, R.A.; Fogarty, L.; Gould, J.; McCarthy, G.; Marsden-Smedley, J.B.; et al. A generic, empirical-based model for predicting rate of fire spread in shrublands. Int. J. Wildland Fire 2015, 24, 443–460. [Google Scholar] [CrossRef]

- Cheney, N.P.; Gould, J.S.; McCaw, W.L.; Anderson, W.R. Predicting fire behaviour in dry eucalypt forest in southern Australia. For. Ecol. Manag. 2012, 280, 120–131. [Google Scholar] [CrossRef]

- Rothermel, R.C. A Mathematical Model. for Predicting Fire Spread in Wildland Fuels; Forest Service, U.S. Department of Agriculture: Ogden, UT, USA, 1972.

- Cruz, M.G.; Alexander, M.E.; Sullivan, A.L. Mantras of wildland fire behaviour modelling: Facts or fallacies? Int. J. Wildland Fire 2017, 26, 973–981. [Google Scholar] [CrossRef]

- Papadopoulos, G.D.; Pavlidou, F.N. A comparative review on wildfire simulators. IEEE Syst. J. 2011, 5, 233–243. [Google Scholar] [CrossRef]

- Thaxton, J.M.; Platt, W.J. Small-scale fuel variation alters fire intensity and shrub abundance in a pine savanna. Ecology 2006, 87, 1331–1337. [Google Scholar] [CrossRef]

- Riccardi, C.L.; Ottmar, R.D.; Sandberg, D.V.; Andreu, A.; Elman, E.; Kopper, K.; Long, J. The fuelbed: A key element of the Fuel Characteristic Classification System. Can. J. For. Res. 2007, 37, 2394–2412. [Google Scholar] [CrossRef]

- Long, M. A climatology of extreme fire weather days in Victoria. Aust. Meteorol. Mag. 2006, 55, 3–18. [Google Scholar]

- Viegas, D.X. Fire line rotation as a mechanism for fire spread on a uniform slope. Int. J. Wildland Fire 2002, 11, 11–23. [Google Scholar] [CrossRef]

- Sharples, J.J.; McRae, R.H.D.; Wilkes, S.R. Wind–terrain effects on the propagation of wildfires in rugged terrain: Fire channelling. Int. J. Wildland Fire 2012, 21, 282–296. [Google Scholar] [CrossRef]

- Sharples, J.J.; Mills, G.A.; McRae, R.H.D.; Weber, R.O. Foehn-like winds and elevated fire danger conditions in southeastern Australia. J. Appl. Meteorol. Clim. 2010, 49, 1067–1095. [Google Scholar] [CrossRef]

- Haines, D.A. A lower atmospheric severity index for wildland fires. Natl. Weather Dig. 1988, 13, 23–27. [Google Scholar]

- McRae, R.H.D.; Sharples, J.J.; Wilkes, S.R.; Walker, A. An Australian pyro-tornadogenesis event. Nat. Hazards 2013, 1801–1811. [Google Scholar] [CrossRef]

- Sun, R.; Krueger, S.K.; Jenkins, M.A.; Zulauf, M.A.; Charney, J.J. The importance of fire–atmosphere coupling and boundary-layer turbulence to wildfire spread. Int. J. Wildland Fire 2009, 18, 50–60. [Google Scholar] [CrossRef]

- Viegas, D.; Simeoni, A. Eruptive behaviour of forest fires. Fire Technol. 2011, 47, 303–320. [Google Scholar] [CrossRef] [Green Version]

- Cruz, M.G.; Sullivan, A.L.; Gould, J.S.; Sims, N.C.; Bannister, A.J.; Hollis, J.J.; Hurley, R.J. Anatomy of a catastrophic wildfire: The Black Saturday Kilmore East fire in Victoria, Australia. For. Ecol. Manag. 2012, 269–285. [Google Scholar] [CrossRef]

- Alexander, M.E.; Cruz, M.G. Evaluating a model for predicting active crown fire rate of spread using wildfire observations. Can. J. For. Res. 2006, 36, 3015–3028. [Google Scholar] [CrossRef]

- Jakeman, A.J.; Letcher, R.A.; Norton, J.P. Ten iterative steps in development and evaluation of environmental models. Environ. Model. Softw. 2006, 21, 602–614. [Google Scholar] [CrossRef]

- Bennett, N.D.; Croke, B.F.W.; Guariso, G.; Guillaume, J.H.A.; Hamilton, S.H.; Jakeman, A.J.; Marsili-Libelli, S.; Newham, L.T.H.; Norton, J.P.; Perrin, C.; et al. Characterising performance of environmental models. Environ. Model. Softw. 2013, 40, 1–20. [Google Scholar] [CrossRef]

- Stratton, R.D. Guidance on Spatial Wildland Fire Analysis: Models, Tools, and Techniques; RMRS-GTR-183; Rocky Mountain Research Station, Forest Service, USDA: Fort Collins, CO, USA, 2006; p. 15. [Google Scholar]

- Perry, G.L.W. Current approaches to modelling the spread of wildland fire: A review. Prog. Phys. Geog. 1998, 22, 222–245. [Google Scholar] [CrossRef]

- Sá, A.C.L.; Benali, A.; Fernandes, P.M.; Pinto, R.M.S.; Trigo, R.M.; Salis, M.; Russo, A.; Jerez, S.; Soares, P.M.M.; Schroeder, W.; et al. Evaluating fire growth simulations using satellite active fire data. Remote Sens. Environ. 2017, 190, 302–317. [Google Scholar] [CrossRef]

- Feunekes, U. Error Analysis in Fire Simulation Models. M.Sc. Thesis, University of New Bruswick, Fredericton, NB, Canada, 1991. [Google Scholar]

- Cui, W.; Perera, A.H. Quantifying spatio-temporal errors in forest fire spread modelling explicitly. J. Environ. Inform. 2010, 16, 19–26. [Google Scholar] [CrossRef]

- Green, D.G.; Gill, A.M.; Noble, I.R. Fire shapes and the adequacy of fire-spread models. Ecol. Model. 1983, 20, 33–45. [Google Scholar] [CrossRef]

- Fujioka, F.M. A new method for the analysis of fire spread modeling errors. Int. J. Wildland Fire 2002, 11, 193–203. [Google Scholar] [CrossRef]

- Duff, T.J.; Chong, D.M.; Tolhurst, K.G. Quantifying spatio-temporal differences between fire shapes: Estimating fire travel paths for the improvement of dynamic spread models. Environ. Model. Softw. 2013. [Google Scholar] [CrossRef]

- Filippi, J.-B.; Mallet, V.; Nader, B. Representation and evaluation of wildfire propagation simulations. Int. J. Wildland Fire 2014, 23, 46–57. [Google Scholar] [CrossRef]

- Peltier, L.J.; Haupt, S.E.; Wyngaard, J.C.; Stauffer, D.R.; Deng, A.; Lee, J.A.; Long, K.J.; Annunzio, A.J. Parameterizing mesoscale wind uncertainty for dispersion modeling. J. Appl. Meteorol. Clim. 2010, 49, 1604–1614. [Google Scholar] [CrossRef]

- Valero, M.M.; Rios, O.; Mata, C.; Pastor, E.; Planas, E. An integrated approach for tactical monitoring and data-driven spread forecasting of wildfires. Fire Saf. J. 2017. [Google Scholar] [CrossRef]

- Zhang, C.; Rochoux, M.; Tang, W.; Gollner, M.; Filippi, J.-B.; Trouvé, A. Evaluation of a data-driven wildland fire spread forecast model with spatially-distributed parameter estimation in simulations of the FireFlux I field-scale experiment. Fire Saf. J. 2017, 91, 758–767. [Google Scholar] [CrossRef]

- Rochoux, M.C.; Delmotte, B.; Cuenot, B.; Ricci, S.; Trouvé, A. Regional-scale simulations of wildland fire spread informed by real-time flame front observations. Proc. Combust. Inst. 2013, 34, 2641–2647. [Google Scholar] [CrossRef]

- Kelso, J.K.; Mellor, D.; Murphy, M.E.; Milne, G.J. Techniques for evaluating wildfire simulators via the simulation of historical fires using the Australis simulator. Int. J. Wildland Fire 2015, 24, 784–797. [Google Scholar] [CrossRef]

- Filippi, J.B.; Mallet, V.; Nader, B. Evaluation of forest fire models on a large observation database. Nat. Hazards Earth Syst. Sci. 2014, 14, 3077–3092. [Google Scholar] [CrossRef] [Green Version]

- Faggian, N.; Bridge, C.; Fox-Hughes, P.; Jolly, C.; Jacobs, H.; Ebert, E.E.; Bally, J. Final Report: An. Evaluation of Fire Spread Simulators Used in Australia; Australian Bureau of Meterology: Melbourne, Australia, 2017. [Google Scholar]

- Billing, P. Operational Aspects of the Infrared Line Scanner; Department of Conservation and Environment: Victoria, Australia, 1986.

- Paterson, G.; Chong, D. Implementing the Phoenix Fire Spread Model for Operational Use. In Proceedings of the Surveying and Spatial Sciences Biennial Conference 2011, Wellington, New Zealand, 21–25 November 2011; New Zealand Institute of Surveyors and the Surveying and Spatial Sciences Institute: Wellington, New Zealand, 2011. [Google Scholar]

- Duff, T.J.; Chong, D.M.; Cirulis, B.A.; Walsh, S.F.; Penman, T.D.; Tolhurst, K.G. Understanding risk: Representing fire danger using spatially explicit fire simulation ensembles. In Advances in Forest Fire Research; Viegas, D.X., Ed.; Imprensa da Universidade de Coimbra: Coimbra, Portugal, 2014; pp. 1286–1294. [Google Scholar]

- Finkele, K.; Mills, G.A.; Beard, G.; Jones, D.A. National gridded drought factors and comparison of two soil moisture deficit formuations used in prediction of Forest Fire Danger Index in Australia. Aust. Meteorol. Mag. 2006, 55, 183–197. [Google Scholar]

- Walsh, S.F.; Nyman, P.; Sheridan, G.J.; Baillie, C.C.; Tolhurst, K.G.; Duff, T.J. Hillslope-scale prediction of terrain and forest canopy effects on temperature and near-surface soil moisture deficit. Int. J. Wildland Fire 2017, 26, 191–208. [Google Scholar] [CrossRef]

- Nyman, P.; Sherwin, C.B.; Langhans, C.; Lane, P.N.J.; Sheridan, G.J. Downscaling regional climate data to calculate the radiative index of dryness in complex terrain. Aust. Met. Ocean. J. 2014, 64, 109–122. [Google Scholar] [CrossRef]

- Duff, T.J.; Chong, D.M.; Tolhurst, K.G. Indices for the evaluation of wildfire spread simulations using contemporaneous predictions and observations of burnt area. Environ. Model. Softw. 2016, 83, 276–285. [Google Scholar] [CrossRef]

- Duff, T.J.; Chong, D.M.; Tolhurst, K.G. Using discrete event simulation cellular automata models to determine multi-mode travel times and routes of terrestrial suppression resources to wildland fires. Eur. J. Oper. Res. 2015, 241, 763–770. [Google Scholar] [CrossRef]

- Pastor, E.; Zárate, L.; Planas, E.; Arnaldos, J. Mathematical models and calculation systems for the study of wildland fire behaviour. Prog. Energ. Combust. 2003, 29, 139–153. [Google Scholar] [CrossRef]

- Stockwell, D.R.B.; Peterson, A.T. Effects of sample size on accuracy of species distribution models. Ecol. Model. 2002, 148, 1–13. [Google Scholar] [CrossRef]

- Duff, T.J.; Chong, D.M.; Cirulis, B.A.; Walsh, S.F.; Penman, T.D.; Tolhurst, K.G. Gaining benefits from adversity: The need for systems and frameworks to maximise the data obtained from wildfires. In Advances in Forest Fire Research; Viegas, D.X., Ed.; Imprensa da Universidade de Coimbra: Coimbra, Portugal, 2014; pp. 766–774. [Google Scholar]

- Hawkins, D.M. The problem of overfitting. J. Chem. Inf. Comput. Sci. 2004, 44, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Fonollosa, J.; Solórzano, A.; Marco, S. Chemical sensor systems and associated algorithms for fire detection: A review. Sensors 2018, 18, 553. [Google Scholar] [CrossRef] [PubMed]

- Loschiavo, J.; Cirulis, B.; Zuo, Y.; Hradsky, B.A.; Di Stefano, J. Mapping prescribed fire severity in south-east Australian eucalypt forests using modelling and satellite imagery: A case study. Int. J. Wildland Fire 2017, 26, 491–497. [Google Scholar] [CrossRef]

| Fire Name | Locality | Start Date | Start Time | Simulated Until | Burnt (ha) |

|---|---|---|---|---|---|

| Avoca | Victoria | 14 January 1985 | 13:50 | 19:00 | 21,147 |

| Beechworth | Victoria | 7 February 2009 | 17:55 | 2:00 1 | 10,939 |

| Beerburrum Day 2 | Queensland | 7 November 1994 | 12:50 | 18:00 | 2472 |

| Bunyip | Victoria | 7 February 2009 | 12:20 | 17:45 | 7768 |

| Churchill | Victoria | 7 February 2009 | 13:20 | 18:15 | 5802 |

| Murrindindi | Victoria | 7 February 2009 | 14:40 | 18:15 | 21,757 |

| Redesdale | Victoria | 7 February 2009 | 14:45 | 18:00 | 3850 |

| Stawell | Victoria | 31 December 2005 | 16:44 | 2:00 1 | 7511 |

| Wangary | South Aust. | 11 January 2005 | 16:30 | 14:30 | 45,810 |

| Fire Name | Description |

|---|---|

| Avoca | Dry eucalypt forest/grazing agricultural land mix, relatively flat terrain, moderate spotting |

| Beechworth | Dry eucalypt forest/pine plantation, undulating terrain, moderate spotting |

| Beerburrum Day 2 | Eucalypt forest/pine plantation, undulating terrain, substantial spotting |

| Bunyip | Wet and dry eucalypt forest, hilly terrain, substantial spotting |

| Churchill | Pine and eucalypt plantation, wet eucalypt forest, mountainous terrain, substantial spotting |

| Murrindindi | Grassland/wet and dry eucalypt forest, rural residential areas, mountainous terrain, very substantial spotting |

| Redesdale | Grazing with some remnant eucalypts, undulating terrain, minimal spotting. |

| Stawell | Grazing/cropping/remnant dry eucalypt forest patches, undulating terrain, rural residential areas, minimal spotting. Fire suppression in grasslands limited flank spread |

| Wangary | Mainly grazing/cropping farmland, undulating terrain, minimal spotting |

| Fire Name | Simulated (ha) | Intersection (ha) | Deviation (°) | ADIunder | ADIover | ADI |

|---|---|---|---|---|---|---|

| Avoca | 4173 | 4173 | 4.32 | 4.07 | 0.00 | 4.07 |

| Beerburrum Day 2 | 2807 | 1947 | 12.50 | 0.27 | 0.44 | 0.71 |

| Bunyip | 30,365 | 7734 | 6.75 | 0.00 | 2.93 | 2.93 |

| Churchill | 1522 | 1510 | 2.17 | 2.84 | 0.01 | 2.85 |

| Murrindindi | 25,813 | 18,173 | 1.87 | 0.20 | 0.42 | 0.62 |

| Redesdale | 3848 | 3012 | 3.71 | 0.28 | 0.28 | 0.56 |

| Beechworth | 3119 | 1759 | 5.41 | 5.22 | 0.77 | 5.99 |

| Wangary | 33,771 | 32,724 | 4.14 | 0.40 | 0.03 | 0.43 |

| Stawell | 21,624 | 5984 | 2.11 | 0.26 | 2.61 | 2.87 |

| Total | 127,042 | 77,016 | 13.54 | 7.49 | 21.03 |

| Fire Name | Simulated (ha) | Intersection (ha) | Deviation (°) | ADIunder | ADIover | ADI |

|---|---|---|---|---|---|---|

| Avoca | 3827 | 3827 | 4.05 | 4.53 | 0.00 | 4.53 |

| Beerburrum Day 2 | 2807 | 1947 | 12.50 | 0.27 | 0.44 | 0.71 |

| Bunyip | 24,768 | 7712 | 5.47 | 0.01 | 2.21 | 2.22 |

| Churchill | 4691 | 4053 | 0.79 | 0.43 | 0.16 | 0.59 |

| Murrindindi | 28,461 | 19,648 | 1.24 | 0.11 | 0.45 | 0.56 |

| Redesdale | 2723 | 2515 | 2.82 | 0.53 | 0.08 | 0.61 |

| Beechworth | 7939 | 2727 | 14.91 | 3.01 | 1.91 | 4.92 |

| Wangary | 26,732 | 26,066 | 3.95 | 0.76 | 0.03 | 0.78 |

| Stawell | 12,357 | 5383 | 0.49 | 0.40 | 1.30 | 1.69 |

| Total | 114,305 | 73,877 | 10.05 | 6.58 | 16.61 |

| Fire Name | ΔADIunder | ΔADIover | ΔADI |

|---|---|---|---|

| Avoca | 0.46 | 0.00 | 0.46 |

| Beerburrum Day 2 | 0.00 | 0.00 | 0.00 |

| Bunyip | 0.01 | −0.72 | −0.71 |

| Churchill | −2.41 | 0.15 | −2.26 |

| Murrindindi | −0.09 | 0.03 | −0.06 |

| Redesdale | 0.25 | −0.20 | 0.05 |

| Beechworth | −2.21 | 1.14 | −1.07 |

| Wangary | 0.36 | 0.00 | 0.35 |

| Stawell | 0.14 | −1.31 | −1.18 |

| Total | −3.49 | −0.91 | −4.42 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Duff, T.J.; Cawson, J.G.; Cirulis, B.; Nyman, P.; Sheridan, G.J.; Tolhurst, K.G. Conditional Performance Evaluation: Using Wildfire Observations for Systematic Fire Simulator Development. Forests 2018, 9, 189. https://doi.org/10.3390/f9040189

Duff TJ, Cawson JG, Cirulis B, Nyman P, Sheridan GJ, Tolhurst KG. Conditional Performance Evaluation: Using Wildfire Observations for Systematic Fire Simulator Development. Forests. 2018; 9(4):189. https://doi.org/10.3390/f9040189

Chicago/Turabian StyleDuff, Thomas J., Jane G. Cawson, Brett Cirulis, Petter Nyman, Gary J. Sheridan, and Kevin G. Tolhurst. 2018. "Conditional Performance Evaluation: Using Wildfire Observations for Systematic Fire Simulator Development" Forests 9, no. 4: 189. https://doi.org/10.3390/f9040189