Numerical Encodings of Amino Acids in Multivariate Gaussian Modeling of Protein Multiple Sequence Alignments

Abstract

:1. Introduction

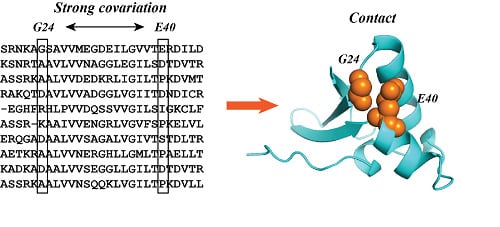

2. A Gaussian Model for Protein Contact Prediction

2.1. A Multiple Sequence Alignment and Its Numeric Representation

2.2. A Gaussian Model for the Alignment

2.3. Extracting Coupling Information from the Parameters of the Gaussian Model

3. Results

3.1. Amino Acid Representation 1: 20-Dimensional Binarized Vectors

3.2. Amino Acid Representation 2: One Dimensional Property-Based Vectors

3.3. Amino Acid Representation 3: K Dimensional BLOSUM-Based Vectors

4. Discussion

5. Materials and Methods

5.1. Vector Representations of Amino Acids

5.2. GaussCovar: Residue Contact Predictions from a MSA

| Algorithm 1 GaussCovar: extracting residue contacts from a multiple sequence alignment. |

| Input: a MSA with N sequences, of length L, t, the type of amino acid encoding, and , the weight of the prior; |

|

5.3. Assessing the Performance of GaussCovar

5.4. Test Datasets

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| MSA | Multiple Sequence Alignment |

| PCA | Principal Component Analysis |

References

- O’Leary, N.A.; Wright, M.W.; Brister, J.R.; Ciufo, S.; Haddad, D.; McVeigh, R.; Rajput, B.; Robbertse, B.; Smith-White, B.; Ako-Adjei, D.; et al. Reference sequence (RefSeq) database at NCBI: Current status, taxonomic expansion, and functional annotation. Nucl. Acids. Res. 2016, 44, 733–745. [Google Scholar] [CrossRef] [PubMed]

- Berman, H.; Westbrook, J.; Feng, Z.; Gilliland, G.; Bhat, T.; Weissig, H.; Bourne, I.S.P. The Protein Data Bank. Nucl. Acids. Res. 2000, 28, 235–242. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Delarue, M.; Koehl, P. Combined approaches from physics, statistics, and computer science for ab initio protein structure prediction: Ex unitate vires (unity is strength)? F1000Res 2018, 7, e1125. [Google Scholar] [CrossRef] [PubMed]

- Talavera, D.; Lovell, S.; Whelan, S. Covariation is a poor measure of molecular co-evolution. Mol. Biol. Evol. 2015, 32, 2456–2468. [Google Scholar] [CrossRef] [PubMed]

- Anishchenko, I.; Ovchinnikov, S.; Kamisetty, H.; Baker, D. Origins of coevolution between residues distant in protein 3D structures. Proc. Natl. Acad. Sci. USA 2017, 114, 9122–9127. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cocco, S.; Feinauer, C.; Figliuzzi, M.; Monasson, R.; Weigt, M. Inverse statistical physics of protein sequences: A key issues review. Rep. Prog. Phys. 2017, 81, 3. [Google Scholar] [CrossRef]

- Figliuzzi, M.; Barrat-Charlaix, P.; Weigt, M. How pairwise coevolutionary models capture the collective variability in proteins? Mol. Biol. Evol. 2018, 35, 1018–1027. [Google Scholar] [CrossRef]

- Szurmant, H.; Weigt, M. Inter-residue, inter-protein, and inter-family coevolution: Bridging the scales. Curr. Opin. Struct. Biol. 2018, 50, 26–32. [Google Scholar] [CrossRef]

- Schaarschmidt, J.; Monastyrskyy, B.; Kryshtafovych, A.; Bonvin, A. Assessment of contact predictions in CASP12: Co-evolution and deep learning coming of age. Proteins 2018, 86, 51–66. [Google Scholar] [CrossRef]

- Hopf, T.; Scharfe, C.; Rodrigues, J.; Green, A.; Kohlbacher, O.; Sander, C.; Bonvin, A.; Marks, D. Sequence co-evolution gives 3D contacts and structures of protein complexes. Elife 2014, 3, e03430. [Google Scholar] [CrossRef]

- Morcos, F.; Jana, B.; Hwa, T.; Onuchic, J. Coevolutionary signals across protein lineages help capture multiple protein conformations. Proc. Natl. Acad. Sci. USA 2013, 110, 20533–20538. [Google Scholar] [CrossRef] [PubMed]

- Sutto, L.; Marsili, S.; Valencia, A.; Gervasio, F. From residue coevolution to protein conformational ensembles and functional dynamics. Proc. Natl. Acad. Sci. USA 2015, 112, 13567–13572. [Google Scholar] [CrossRef] [PubMed]

- Leonardis, E.D.; Lutz, B.; Ratz, S.; Cocco, S.; Monasson, R.; Schug, A.; Weigt, M. Direct-Coupling Analysis of nucleotide coevolution facilitates RNA secondary and tertiary structure prediction. Nucl. Acids. Res. 2015, 43, 10444–10455. [Google Scholar] [CrossRef] [PubMed]

- Weinreb, C.; Riesselman, A.; Ingraham, J.; Gross, T.; Sander, C.; Marks, D. 3D RNA and functional interactions from evolutionary couplings. Cell 2016, 165, 963–975. [Google Scholar] [CrossRef] [PubMed]

- Miao, Z.; Westhof, E. RNA structure: Advances and assessment of 3D structure prediction. Ann. Rev. Biophys. 2017, 46, 483–503. [Google Scholar] [CrossRef] [PubMed]

- Toth-Petroczy, A.; Palmedo, P.; Ingraham, J.; Hopf, T.A.; Berger, B.; Sander, C.; Marks, D. Structured states of disordered proteins from genomic sequences. Cell 2016, 167, 158–170. [Google Scholar] [CrossRef] [PubMed]

- Hopf, T.A.; Ingraham, J.B.; Poelwijk, F.J.; Scharfe, C.P.I.; Springer, M.; Sander, C.; Marks, D. Mutation effects predicted from sequence co-variation. Nat. Biotechnol. 2017, 35, 128–135. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Altschuh, D.; Lesk, A.; Bloomer, A.; Klug, A. Correlation of co-ordinated amino acid substitutions with function in viruses related to tobacco mosaic virus. J. Mol. Biol. 1987, 193, 693–707. [Google Scholar] [CrossRef]

- Gobel, U.; Sander, C.; Schneider, R.; Valencia, A. Correlated mutations and residue contacts in proteins. Proteins 1994, 18, 309–317. [Google Scholar] [CrossRef]

- Shyndyalov, I.; Kolchanov, N.; Sander, C. Can three-dimensional contacts in protein structures be predicted by analysis of correlated mutations? Protein Eng. 1994, 7, 349–358. [Google Scholar] [CrossRef]

- Morcos, F.; Pagnani, A.; Lunt, B.; Bertolino, A.; Marks, D.; Sander, C.; Zecchina, R.; Onuchic, J.; Hwa, T.; Weigt, M. Direct-coupling analysis of residue coevolution captures native contacts accross many protein families. Proc. Natl. Acad. Sci. USA 2011, 108, E1293–E1301. [Google Scholar] [CrossRef] [PubMed]

- Lapedes, A.; Giraud, B.; Jarzynski, C. Using sequence alignments to predict protein structure and stability with high accuracy. arXiv, 2012; arXiv:1207.2484. [Google Scholar]

- Weigt, M.; White, R.; Szurmant, H.; Hoch, J.; Hwa, T. Identification of direct residue contacts in protein- protein interaction by message passing. Proc. Natl. Acad. Sci. USA 2009, 106, 67–72. [Google Scholar] [CrossRef] [PubMed]

- Balakrishnan, S.; Kamisetty, H.; Carbonell, J.; Lee, S.I.; Langmead, C. Learning generative models for protein fold families. Proteins 2011, 79, 1061–1078. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ekeberg, M.; Lovkvist, C.; Lan, Y.; Weigt, M.; Aurell, E. Improved contact prediction in proteins: Using pseudolikelihoods to infer Potts models. Phys. Rev. E 2013, 87, 012707. [Google Scholar] [CrossRef] [PubMed]

- Jones, D.T.; Buchan, D.; Cozzetto, D.; Pontil, M. PSICOV: Precise structure contact prediction using sparse inverse covariance estimation on large multiple sequence alignments. Bioinformatics 2012, 28, 184–190. [Google Scholar] [CrossRef] [PubMed]

- Baldassi, C.; Zamparo, M.; Feinauer, C.; Procaccini, A.; Zecchina, R.; Weigt, M.; Pagnani, A. Fast and accurate multivariate Gaussian modeling of protein families: Predicting residue contacts and protein interaction partners. PLoS ONE 2014, 9, e92721. [Google Scholar] [CrossRef] [PubMed]

- French, S.; Robson, B. What is a conservative substitution? J. Mol. Evol. 1983, 19, 171–175. [Google Scholar] [CrossRef]

- Swanson, R. A vector representation for amino acid sequences. Bull. Math. Bio. 1984, 46, 623–639. [Google Scholar] [CrossRef]

- Kidera, A.; Konishi, Y.; Oka, M.; Ooi, T.; Scheraga, H. Statistical analysis of the physical properties of the 20 naturally occuring amino acids. J. Prot. Chem. 1985, 4, 23–55. [Google Scholar] [CrossRef]

- Schwartz, R.; Dayhoff, M. Matrices for detecting distant relationships. Atlas Protein Seq. Struct. 1978, 5, 345–352. [Google Scholar]

- Rackovsky, S. Sequence physical properties encode the global organization of protein structure space. Proc. Natl. Acad. Sci. USA 2009, 106, 14345–14348. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rackovsky, S. Global characteristics of protein sequences and their implications. Proc. Natl. Acad. Sci. USA 2010, 107, 8623–8626. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rackovsky, S. Spectral analysis of a protein conformational switch. Phys. Rev. Lett. 2011, 106, 248101. [Google Scholar] [CrossRef] [PubMed]

- Scheraga, H.; Rackovsky, S. Homolog detection using global sequence properties suggests an alternate view of structural encoding in protein sequences. Proc. Natl. Acad. Sci. USA 2014, 111, 5225–5229. [Google Scholar] [CrossRef] [PubMed]

- Atchley, W.; Zhao, J.; Fernandes, A.; Druke, T. Solving the protein sequence metric problem. Proc. Natl. Acad. Sci. USA 2005, 102, 6395–6400. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, J.; Koehl, P. 3D representations of amino acids – applications to protein sequence comparison and classification. Comput. Struct. Biotech. J. 2014, 11, 47–58. [Google Scholar] [CrossRef] [PubMed]

- Bishop, C. Pattern Recognition and Machine Learning; Springer-Verlag: Berlin, Germany, 2006. [Google Scholar]

- Dunn, S.; Wahl, L.; Gloor, G. Mutual information without the influence of phylogeny or entropy dramatically improves residue contact prediction. Bioinformatics 2008, 24, 333–340. [Google Scholar] [CrossRef] [PubMed]

- Kawashima, S.; Kanehisa, M. Aaindex: Amino acid index database. Nucl. Acids. Res. 2000, 28, 374. [Google Scholar] [CrossRef] [PubMed]

- Kawashima, S.; Pokarowski, P.; Pokarowska, M.; Kolinski, A.; Katawama, T.; Kanehisa, M. Aaindex: Amino acid index database, progress report 2008. Nucl. Acids. Res. 2008, 36, D202–D205. [Google Scholar] [CrossRef]

- Orlando, G.; Raimondi, D.; Vranken, W. Observation selection bias in contact prediction and its implications for structural bioinformatics. Sci. Rep. 2016, 6, 36679. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ekeberg, M.; Hartonen, T.; Aurell, E. Fast pseudolikelihood maximization for direct coupling analysis of protein structure from many homologous amino-acid sequences. J. Comput. Phys. 2014, 276, 341–356. [Google Scholar] [CrossRef]

- Skwark, M.; Raimondi, D.; Michel, M.; Elofsson, A. Improved contact predictions using the recognition of protein like contact patterns. PLoS Comput. Biol. 2014, 10, e1003889. [Google Scholar] [CrossRef] [PubMed]

- Tomii, K.; Kanehisa, M. Analysis of amino acid indices and mutation matrices for sequence comparison and structure prediction of proteins. Prot. Eng. 1996, 9, 27–36. [Google Scholar] [CrossRef] [Green Version]

- Henikoff, S.; Henikoff, J. Amino acid substitution matrices from protein blocks. Proc. Natl. Acad. Sci. USA 1992, 89, 10915–10919. [Google Scholar] [CrossRef] [PubMed]

- Henikoff, S.; Henikoff, J. Amino acid substitution matrices. Adv. Protein Chem. 2000, 54, 73–97. [Google Scholar] [PubMed]

- Eddy, S. Where did the BLOSUM62 alignment score matrix come from? Nat. Biotechnol. 2004, 22, 1035–1036. [Google Scholar] [CrossRef] [PubMed]

- Kosciolek, T.; Jones, D. Accurate contact predictions using covariation techniques and machine learning. Proteins 2015, 84 (Suppl. 1), 145–151. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dayhoff, M.; Schwartz, R.; Orcutt, B. A model of evolutionary changes in proteins. Atlas Protein Seq. Struct. 1978, 5, 345–352. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Koehl, P.; Orland, H.; Delarue, M. Numerical Encodings of Amino Acids in Multivariate Gaussian Modeling of Protein Multiple Sequence Alignments. Molecules 2019, 24, 104. https://doi.org/10.3390/molecules24010104

Koehl P, Orland H, Delarue M. Numerical Encodings of Amino Acids in Multivariate Gaussian Modeling of Protein Multiple Sequence Alignments. Molecules. 2019; 24(1):104. https://doi.org/10.3390/molecules24010104

Chicago/Turabian StyleKoehl, Patrice, Henri Orland, and Marc Delarue. 2019. "Numerical Encodings of Amino Acids in Multivariate Gaussian Modeling of Protein Multiple Sequence Alignments" Molecules 24, no. 1: 104. https://doi.org/10.3390/molecules24010104