A Systematic Review of the Factors Influencing the Estimation of Vegetation Aboveground Biomass Using Unmanned Aerial Systems

Abstract

:1. Introduction

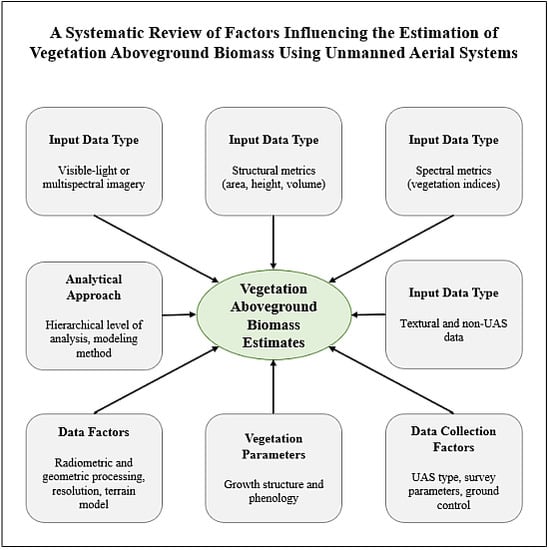

2. Methods

- How well can structural data estimate vegetation AGB? Which structural metrics are best?

- How accurately can multispectral data predict vegetation AGB? Which multispectral indices perform the best?

- How well can RGB spectral data estimate vegetation AGB? Which RGB indices perform the best? How do RGB data compare to MS data? Is including RGB textural information useful?

- Does combining spectral and structural variables improve AGB estimation models beyond either data type alone?

- What other data combinations or types are useful?

- How do the study environment and data collection impact AGB estimation from UAS data?

- How do vegetation growth structure and phenology impact AGB estimation accuracy?

- How do data analysis methods impact AGB estimation accuracy?

3. Results and Discussion

3.1. Input Data

3.1.1. How Well Can Structural Data Estimate Vegetation AGB? Which Structural Metrics Are Best?

3.1.2. How Accurately Can Multispectral Data Predict Vegetation AGB? Which Multispectral Indices Perform the Best?

3.1.3. How Well Can RGB Spectral Data Estimate Vegetation AGB? Which RGB Indices Perform the Best? How do RGB Data Compare to MS Data? Is RGB Textural Information Useful?

3.1.4. Does Combining Spectral and Structural Variables Improve AGB Estimation Models Beyond Either Data Type Alone?

3.1.5. What Other Data Combinations or Types Are Useful?

3.2. Other Factors Influencing AGB Estimation

3.2.1. How do the Study Environment and Data Collection Impact AGB Estimation from UAS Data?

Data Collection

Environment and Weather Conditions

3.2.2. How do Vegetation Growth Structure and Phenology Impact AGB Estimation Accuracy?

Growth Stage

Growth Structure

3.2.3. How do Data Analysis Methods Impact AGB Estimation Accuracy?

Radiometric and Geometric Processing

Spatial and Temporal Resolution

Hierarchical Level of Analysis

Source of Terrain Model

Statistical Model Type

3.3. Future Directions of Research

4. Conclusions

- How well can structural data estimate vegetation AGB? Which structural metrics are best?

- How well can multispectral data predict vegetation AGB? Which multispectral indices perform the best?

- How well can RGB spectral data estimate vegetation AGB? Which RGB indices perform the best? How do RGB data compare to MS data?

- Does combining spectral and structural variables improve AGB estimation models beyond either data type alone?

- What other data combinations or types are useful?

- How do the study environment and data collection impact AGB estimation from UAS data?

- How do vegetation growth structure and phenology impact AGB estimation accuracy?

- How do data analysis methods impact AGB estimation accuracy?

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Study Parameters | Data Collection Parameters | Data Analysis Parameters | Results | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Species and Location | Ground Truth Data 1 | Sensor 2 | Flight Height | Overlap, Sidelap | GSD (cm/pixel) | Area Based? | Spectral Data | Structural Data | Texture Data | Other Data | Terrain Model Source | Best Statistical Method 3 | R2 | Top Variables | Study |

| Corn, alfalfa, soybean, USA | Direct measurements of dry biomass | RGB | 200 m | unknown | 10 cm | Area-based | NGRDI | n/a | n/a | n/a | n/a | SLR | 0.39–0.88 | NGRDI | [60] |

| Ryegrass crop, Japan | Direct measurements of dry biomass | 3-band MS | 100 m | 50% | 2 cm | Area-based | DN of each band | n/a | n/a | n/a | n/a | MLR | 0.84 | DN of R, G, NIR | [18] |

| Cover crop, USA | Direct measurements of dry biomass | 4-band MS | 100 m | 70%, 80% | 15 cm | Area-based | 4 indices | n/a | n/a | n/a | n/a | SLR | 0.92 | NDVI and GNDVI | [11] |

| Winter wheat crop, Germany | Direct measurements of dry biomass | 4-band MS | 25 m | 96%, 60% | 4 cm | Area-based | NDVI, REIP | n/a | n/a | n/a | n/a | SLR | 0.72–0.85 | NDVI, REIP | [24] |

| Wheat crop, China | Direct measurements of dry biomass | 2-band MS | 1 m | Unknown | <1 cm | Area-based | NDVI, RVI | n/a | n/a | n/a | n/a | SLR | 0.62–0.66 | RVI, NDVI | [58] |

| Wheat crop, Finland | Direct measurements of dry biomass | 42-band HS | 140 m | 78%, 67% | 20 cm | Area-based | All HS bands, NDVI | n/a | n/a | n/a | DTM from ALS data | KNN | 0.57–0.80 | All HS bands with correction | [61] |

| Rice crop, China | Direct measurements of dry biomass | 4-band MS | 80 m | 70% | 8 cm | Area-based | 10 spectral indices | n/a | n/a | n/a | n/a | LME | 0.75–0.89 | All VIs | [62] |

| Tallgrass prairie, USA | Direct measurements of dry biomass | 3-band MS | 5, 20, 50 m | Unknown | 1–9 cm | Area-based | NDVI | n/a | n/a | n/a | n/a | SLR | 0.86–0.94 | NDVI | [77] |

| Coastal wetland, USA | Direct measurements of fresh and dry biomass | 5-band MS | 90 m | 75% | 6.1 cm | Area-based | 6 spectral indices | n/a | n/a | n/a | n/a | SLR | 0.36–0.71 | NDVI | [43] |

| Winter wheat, China | Direct measurements of dry biomass | RGB | 50 m | Unknown | 1 cm | Area-based | 3 RGB bands, 6 RGB indices | n/a | 8 image textures | n/a | DTM interpolated from ground points | SWR, RF | 0.84 | All | [2] |

| Rice crop, China | Direct measurements of dry biomass | 6-band MS | 100 m | unknown | 5 cm | Area-based | 8 spectral indices | n/a | 8 textures, NDTIs | n/a | n/a | SWR | 0.86 | 2 texture, 1 RGB VI | [26] |

| Barley crop, Germany | Direct measurements of fresh and dry biomass | RGB | 50 m | >80% | <1 cm | Area-based | n/a | Mean height | n/a | n/a | Leaf-off DSM | SER | 0.81–0.82 | Mean height | [23] |

| Rice crop, Germany | Direct measurements of fresh and dry biomass | RGB | 7-10 m | 95% | <1 cm | Area-based | n/a | Mean plot height | n/a | n/a | DTM from interpolating plant-free point | SLR | 0.68–0.81 | Mean height | [74] |

| Black oat crop, Brazil | Direct measurements of fresh and dry biomass | RGB | 25 m | 90% | <2 cm | Area-based | n/a | Mean plot height | n/a | n/a | Leaf-off DTM | SER | 0.69–0.94 | Mean height | [75] |

| Temperate grasslands, Germany | Direct measurements of dry biomass | RGB | 20 m | 80% | <1 cm | Area-based | n/a | Mean plot height | n/a | n/a | DTM from raster ground point classification | RMAR | 0.62–0.81 | Mean height | [19] |

| Deciduous forest, USA | Allometric measurements of tree biomass | RGB | 80m | 80% | 3.4 cm | Area-based | n/a | Mean height | n/a | n/a | LiDAR DTM | SLR | 0.80 | Mean height | [55] |

| Coniferous forest, China | Allometric measurements of tree biomass | RGB | 400 m | 80%, 60% | 5 cm | Individual trees | n/a | Tree height | n/a | n/a | DTM from point cloud classification | SER | 0.98 | Max height | [1] |

| Pine tree plantation, Portugal | Allometric measurements of tree biomass | RGB | 170m | 80%, 75% | 6 cm | Individual trees | n/a | Tree height, crown area, diameter | n/a | n/a | DTM from point cloud classification | SER | 0.79–0.84 | All | [50] |

| Tropical forest, Costa Rica | Allometric measurements of tree biomass | RGB | 30–40 m | 90%, 75% | ~10 cm | Area-based | n/a | Canopy height, proportion, roughness, openness | n/a | n/a | DTMs from point cloud and ground-based GPS interpolation | SLR | 0.81–0.83 | Median height | [48] |

| Temperate grasslands, China | Direct measurements of dry biomass | RGB | 3m, 20m | 70% | ~ 1 cm | Area-based | n/a | 5 canopy height metrics | n/a | n/a | DTM from point cloud ground point classification | SLogR, SLR | 0.76–0.78 | Mean height, median height | [65] |

| Temperate grasslands, Germany | Direct measurements of fresh and dry biomass | RGB | 25 m | 80% | ~1 cm | Area-based | n/a | 10 canopy height metrics | n/a | n/a | DTM from TLS data | SLR | 0.0–0.62 | 75th percentile of height | [63] |

| Eggplant, tomato and cabbage crops, India | Direct measurements of fresh biomass | RGB | 20 m | 80% | <1 cm | Area-based | n/a | 14 canopy height metrics | n/a | n/a | DTM from point cloud ground point classification | RF | 0.88–0.95 | All | [34] |

| Mixed forest, Japan | Allometric measurements of tree biomass | RGB | 650 m | 85% | 14 cm | Area-based | n/a | 12 point cloud and 4 CHM metrics | n/a | n/a | LiDAR DTM | RF | 0.87–0.94 | 5 height metrics | [28] |

| Onion crop, Spain | Direct measurements of dry leaf and bulb biomass | RGB | 44 m | 60%, 40% | 1 cm | Area-based | n/a | 3 canopy height, volume and cover metrics | n/a | n/a | Bare-earth DTM | SER | 0.76–0.95 | Canopy volume | [22] |

| Rye and timothy pastures, Norway | Direct measurements of dry biomass | RGB | 30 m | 90%, 60% | <2 cm | Area-based | n/a | Mean plot volume | n/a | n/a | None | SLR | 0.54 | Volume | [64] |

| Boreal forest, Alaska | Allometric measurements of tree biomass | RGB | 100m | 90% | 1.9–2.7 cm | Individual tree crowns and plot level | n/a | Tree crown volume | n/a | n/a | DTM from point cloud classification | OLSR | 0.74–0.92 | Canopy volume | [8] |

| Tropical woodland, Malawi | Allometric measurements of tree biomass | RGB, 2-band MS | 286–487 m | 90%, 70–80% | 10–15 cm | Area-based | 15 RGB and 15 MS indices per 6 bands | 86 canopy height or canopy density features | n/a | n/a | DTM derived from point cloud ground point classification | MLR | 0.76 | 4 height metrics | [57] |

| Tropical forests, Myanmar | Allometric measurements of tree biomass | RGB | 91–96 m | 82% | <4 cm | Area-based | 4 RGB indices of spectral change | 11 height variables, 3 disturbed area variables | n/a | n/a | None | Type 1 Tobit | 0.77 | 2 height metrics | [49] |

| Tropical woodland, Malawi | Allometric measurements of biomass | RGB | 325 m | 80%, 90% | ~5 cm | Area-based | 15 spectral variables per RGB band | 15 canopy height metrics, 10 canopy density metrics | n/a | n/a | DTMs derived from ground point classification or SRTM data | MLR | 0.67 | 2 height, 1 spectral metric | [30] |

| Aquatic plants, China | Direct measurements of dry biomass | RGB | 50m | 60%, 80% | 10 cm | Area-based | 7 RGB indices | 3 height metrics | n/a | n/a | Winter DTM | SWR | 0.84 | 2 spectral, 3 height metrics | [9] |

| Cover crop, Switzerland | Direct measurements of dry biomass | RGB, 5-band MS | 50 m, 30 m | >75%, >65% | <10 cm | Area-based | 1 RGB index, 2 MS indices | Plant height and canopy cover | n/a | n/a | GPS measurements taken on the ground | SLR | 0.74 | 90th percentile of height | [21] |

| Rice crop, China | Direct measurements of dry biomass | RGB, 25-band MS | 25 m | 60%, 75% | 1–5 cm | Area-based | 9 spectral indices | Mean crop height | n/a | n/a | DTM from point cloud ground point classification | RF | 0.90 | Height, 7 RGB VIs, 3 MS VIs | [5] |

| Winter wheat, China | Direct measurements of dry biomass | RGB, 125-band HS | 50 m | 1–2.5 cm | Area-based | 3 RGB bands, 4 HS bands, 9 RGB indices, 8 HS indices | Crop height | n/a | n/a | DTM interpolated from manual ground point classification | RF | 0.96 | All RGB data | [20] | |

| Winter wheat, Germany | Direct measurements of fresh and dry biomass | RGB | 50 m | 60%, 60% | ~1 cm | Area-based | 5 spectral variables | Plant height, crop area | n/a | n/a | DTM from leaf-off flight | MLR | 0.70–0.94 | 2 principal components | [25] |

| Wheat crop, China | Direct measurements of dry biomass | RGB | 30 m | 80%, 60% | 1.66 cm | Area-based | 10 spectral indices | 8 height metrics | n/a | n/a | Leaf-off DTM | RF | 0.76 | All | [12] |

| Maize crop, China | Direct measurements of dry biomass | RGB, 4-band MS | 60 m | 80%, 75% | <1 cm | Area-based | 11 spectral indices | 3 canopy metrics | n/a | n/a | DTM interpolated from un-vegetated points | RF | 0.94 | 3 structural, 2 RGB VI, 1 MS VI metrics | [16] |

| Maize crop, China | Direct measurements of dry biomass | RGB | 150 m | 80%, 40% | 2 cm | Area-based | 8 spectral indices | 4 height metrics | n/a | n/a | DTM interpolated from ALS | RF | 0.78 | 3 structural, 2 RGB metrics | [15] |

| Maize crop, China | Direct measurements of fresh and dry biomass | RGB | 30 m | 90% | <1 cm | Area-based | 6 spectral variables | 6 canopy height variables | n/a | n/a | None | MLR | 0.85 | 3 RGB, 1 structural metric | [4] |

| Barley crop, Germany | Direct measurements of dry biomass | RGB | 50 m | Unknown | 1 cm | Area-based | 3 RGB indices | Mean crop height | n/a | VI*Height | Leaf-off DTM | MNLR | 0.84 | 1 structural, 1 spectral, 4 structural times spectral metrics | [66] |

| Poplar plantation, Spain | Allometric measurements of tree biomass | RGB, 5-band MS | 100 m | 80%, 60% | 4–6 cm | Individual trees | NDVI | Tree height | n/a | NDVI* Height | None | MLR | 0.54 | NDVI * Height | [67] |

| Winter wheat, China | Direct measurements of dry biomass | 125-band HS | 50 m | Unknown | 1 cm | Area-based | 5 HS bands, 14 HS VIs | Plant height | n/a | VI*Height | DTM interpolated from identified ground points | PLSR | 0.78 | 8 structural times spectral metrics | [6] |

| Grassland, Germany | Direct measurements of dry biomass | RGB | 13–16 m and 60 m | Unknown | 1–2 cm | Area-based | RGBVI | Mean plot height | n/a | GrassI (height + RGBVI* 0.25) | Leaf-off DTM | SLR | 0.64 | Mean plot height | [68] |

| Soybean crop, USA | Direct measurements of dry biomass | RGB | 30m | 90%, 90% | <1 cm | Area-based | 3 RGB bands, 17 RGB indices | 6 canopy height metrics, canopy volume | n/a | VI-weighted canopy volume | Winter DTM | SWR | 0.91 | All | [3] |

| Rice crop, China | Direct measurements of dry biomass | RGB, 12-band MS | 120 m | Unknown | 6.5 | Area-based | 11 MS indices + band reflectance of 12 bands | 17 TIN-derived metrics | n/a | Growing degree days (GDD) | Winter DTM | RF | 0.92 | All spectral, structural, GDD metrics | [13] |

| Ryegrass crop, Belgium | Direct measurements of dry biomass | RGB | 30 m | 80% | <2 cm | Area-based | 10 spectral indices | 7 canopy height metrics | n/a | GDD, ΔGDD between cuts | DTMs from interpolation of ground points and from leaf-off flights | MLR | 0.81 | 2 structural, 1 spectral, 2 GDD metrics | [69] |

| Maize crop, Belgium | Direct measurements of dry biomass | RGB | 50 m | 80% | ≤ 5 cm | Area-based | 5 spectral indices | Median plot height | n/a | Ground-based AGB estimate | LiDAR DTM | PLSR | 0.82 | All spectral metrics, mean height, field-measured AGB | [27] |

References

- Lin, J.; Wang, M.; Ma, M.; Lin, Y. Aboveground Tree Biomass Estimation of Sparse Subalpine Coniferous Forest with UAV Oblique Photography. Remote Sens. 2018, 10, 1849. [Google Scholar] [CrossRef] [Green Version]

- Yue, J.; Yang, G.; Tian, Q.; Feng, H.; Xu, K.; Zhou, C. Estimate of winter-wheat above-ground biomass based on UAV ultrahigh- ground-resolution image textures and vegetation indices. ISPRS J. Photogramm. Remote Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Maimaitiyiming, M.; Hartling, S.; Peterson, K.T.; Maw, M.J.W.; Shakoor, N.; Mockler, T.; Fritschi, F.B. Vegetation Index Weighted Canopy Volume Model (CVM VI) for soybean biomass estimation from Unmanned Aerial System-based RGB imagery. ISPRS J. Photogramm. Remote Sens. 2019, 151, 27–41. [Google Scholar] [CrossRef]

- Niu, Y.; Zhang, L.; Zhang, H.; Han, W.; Peng, X. Estimating Above-Ground Biomass of Maize Using Features Derived from UAV-Based RGB Imagery. Remote Sens. 2019, 11, 1261. [Google Scholar] [CrossRef] [Green Version]

- Cen, H.; Wan, L.; Zhu, J.; Li, Y.; Li, X.; Zhu, Y.; Weng, H.; Wu, W.; Yin, W.; Xu, C.; et al. Dynamic monitoring of biomass of rice under different nitrogen treatments using a lightweight UAV with dual image—Frame snapshot cameras. Plant Methods 2019, 15, 1–16. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Li, C.; Li, Z.; Wang, Y.; Feng, H.; Xu, B. Estimation of winter wheat above-ground biomass using unmanned aerial vehicle-based snapshot hyperspectral sensor and crop height improved models. Remote Sens. 2017, 9, 708. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Zhang, F.; Qi, Y.; Deng, L.; Wang, X.; Yang, S. New research methods for vegetation information extraction based on visible light remote sensing images from an unmanned aerial vehicle (UAV). Int. J. Appl. Earth Obs. Geoinf. 2019, 78, 215–226. [Google Scholar] [CrossRef]

- Alonzo, M.; Andersen, H.; Morton, D.C.; Cook, B.D. Quantifying Boreal Forest Structure and Composition Using UAV Structure from Motion. Forests 2018, 9, 119. [Google Scholar] [CrossRef] [Green Version]

- Jing, R.; Gong, Z.; Zhao, W.; Pu, R.; Deng, L. Above-bottom biomass retrieval of aquatic plants with regression models and SfM data acquired by a UAV platform—A case study in Wild Duck Lake Wetland, Beijing, China. ISPRS J. Photogramm. Remote Sens. 2017, 134, 122–134. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Ghulam, A.; Sidike, P.; Hartling, S.; Maimaitiyiming, M.; Peterson, K.; Shavers, E.; Fishman, J.; Peterson, J.; Kadam, S.; et al. Unmanned Aerial System (UAS)-based phenotyping of soybean using multi-sensor data fusion and extreme learning machine. ISPRS J. Photogramm. Remote Sens. 2017, 134, 43–58. [Google Scholar] [CrossRef]

- Yuan, M.; Burjel, J.C.; Isermann, J.; Goeser, N.J.; Pittelkow, C.M. Unmanned aerial vehicle–based assessment of cover crop biomass and nitrogen uptake variability. J. Soil Water Conserv. 2019, 74, 350–359. [Google Scholar] [CrossRef] [Green Version]

- Lu, N.; Zhou, J.; Han, Z.; Li, D.; Cao, Q.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cheng, T. Improved estimation of aboveground biomass in wheat from RGB imagery and point cloud data acquired with a low-cost unmanned aerial vehicle system. Plant Methods 2019, 15, 1–16. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jiang, Q.; Fang, S.; Peng, Y.; Gong, Y.; Zhu, R.; Wu, X.; Ma, Y.; Duan, B.; Liu, J. UAV-Based Biomass Estimation for Rice-Combining Spectral, TIN-Based Structural and Meteorological Features. Remote Sens. 2019, 11, 890. [Google Scholar] [CrossRef] [Green Version]

- Viljanen, N.; Honkavaara, E. A Novel Machine Learning Method for Estimating Biomass of Grass Swards Using a Photogrammetric Canopy Height Model, Images and Vegetation Indices Captured by a Drone. Agriculture 2018, 8, 70. [Google Scholar] [CrossRef] [Green Version]

- Li, W.; Niu, Z.; Chen, H.; Li, D.; Wu, M.; Zhao, W. Remote estimation of canopy height and aboveground biomass of maize using high-resolution stereo images from a low-cost unmanned aerial vehicle system. Ecol. Indic. 2016, 67, 637–648. [Google Scholar] [CrossRef]

- Han, L.; Yang, G.; Dai, H.; Xu, B.; Yang, H.; Feng, H.; Li, Z.; Yang, X. Modeling maize above-ground biomass based on machine learning approaches using UAV remote-sensing data. Plant Methods 2019, 15, 1–19. [Google Scholar] [CrossRef] [Green Version]

- Näsi, R.; Viljanen, N.; Kaivosoja, J.; Alhonoja, K.; Hakala, T.; Markelin, L.; Honkavaara, E. Estimating Biomass and Nitrogen Amount of Barley and Grass Using UAV and Aircraft Based Spectral and. Remote Sens. 2018, 10, 1082. [Google Scholar] [CrossRef] [Green Version]

- Fan, X.; Kawamura, K.; Xuan, T.D.; Yuba, N.; Lim, J.; Yoshitoshi, R.; Minh, T.N.; Kurokawa, Y.; Obitsu, T. Low-cost visible and near-infrared camera on an unmanned aerial vehicle for assessing the herbage biomass and leaf area index in an Italian ryegrass field. Grassl. Sci. 2018, 64, 145–150. [Google Scholar] [CrossRef]

- Grüner, E.; Astor, T.; Wachendorf, M. Biomass Prediction of Heterogeneous Temperate Grasslands Using an SfM Approach Based on UAV Imaging. Agronomy 2019, 9, 54. [Google Scholar] [CrossRef] [Green Version]

- Yue, J.; Feng, H.; Jin, X.; Yuan, H.; Li, Z.; Zhou, C.; Yang, G.; Tian, Q. A Comparison of Crop Parameters Estimation Using Images from UAV-Mounted Snapshot Hyperspectral Sensor and High-Definition Digital Camera. Remote Sens. 2018, 10, 1138. [Google Scholar] [CrossRef] [Green Version]

- Roth, L.; Streit, B. Predicting cover crop biomass by lightweight UAS-based RGB and NIR photography: An applied photogrammetric approach. Precis. Agric. 2018, 19, 93–114. [Google Scholar] [CrossRef] [Green Version]

- Ballesteros, R.; Fernando, J.; David, O.; Moreno, M.A. Onion biomass monitoring using UAV - based RGB. Precis. Agric. 2018, 19, 840–857. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating Biomass of Barley Using Crop Surface Models (CSMs) Derived from UAV-Based RGB Imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef] [Green Version]

- Geipel, J.; Link, J.; Wirwahn, J.A.; Claupein, W. A Programmable Aerial Multispectral Camera System for In-Season Crop Biomass and Nitrogen Content Estimation. Agriculture 2016, 6, 4. [Google Scholar] [CrossRef] [Green Version]

- Schirrmann, M.; Giebel, A.; Gleiniger, F.; Pflanz, M.; Lentschke, J.; Dammer, K. Monitoring Agronomic Parameters of Winter Wheat Crops with Low-Cost UAV Imagery. Remote Sens. 2016, 8, 706. [Google Scholar] [CrossRef] [Green Version]

- Zheng, H.; Cheng, T.; Zhou, M.; Li, D.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Improved estimation of rice aboveground biomass combining textural and spectral analysis of UAV imagery. Precis. Agric. 2019, 20, 611–629. [Google Scholar] [CrossRef]

- Michez, A.; Bauwens, S.; Brostaux, Y.; Hiel, M.P.; Garré, S.; Lejeune, P.; Dumont, B. How far can consumer-grade UAV RGB imagery describe crop production? A 3D and multitemporal modeling approach applied to Zea mays. Remote Sens. 2018, 10, 1798. [Google Scholar] [CrossRef] [Green Version]

- Jayathunga, S.; Owari, T.; Tsuyuki, S. Digital Aerial Photogrammetry for Uneven-Aged Forest Management: Assessing the Potential to Reconstruct Canopy Structure and Estimate Living Biomass. Remote Sens. 2019, 11, 338. [Google Scholar] [CrossRef] [Green Version]

- Cunliffe, A.M.; Brazier, R.E.; Anderson, K. Ultra-fine grain landscape-scale quantification of dryland vegetation structure with drone-acquired structure-from-motion photogrammetry. Remote Sens. Environ. 2016, 183, 129–143. [Google Scholar] [CrossRef] [Green Version]

- Kachamba, D.J.; Ørka, H.O.; Gobakken, T.; Eid, T.; Mwase, W. Biomass Estimation Using 3D Data from Unmanned Aerial Vehicle Imagery in a Tropical Woodland. Remote Sens. 2016, 8, 968. [Google Scholar] [CrossRef] [Green Version]

- Zolkos, S.G.; Goetz, S.J.; Dubayah, R. A meta-analysis of terrestrial aboveground biomass estimation using lidar remote sensing. Remote Sens. Environ. 2013, 128, 289–298. [Google Scholar] [CrossRef]

- Zhao, F.; Guo, Q.; Kelly, M. Allometric equation choice impacts lidar-based forest biomass estimates: A case study from the Sierra National Forest, CA. Agric. For. Meteorol. 2012, 165, 64–72. [Google Scholar] [CrossRef]

- Li, F.; Zeng, Y.; Luo, J.; Ma, R.; Wu, B. Modeling grassland aboveground biomass using a pure vegetation index. Ecol. Indic. 2016, 62, 279–288. [Google Scholar] [CrossRef]

- Moeckel, T.; Dayananda, S.; Nidamanuri, R.R.; Nautiyal, S.; Hanumaiah, N.; Buerkert, A.; Wachendorf, M. Estimation of Vegetable Crop Parameter by Multi-temporal UAV-Borne Images. Remote Sens. 2018, 10, 805. [Google Scholar] [CrossRef] [Green Version]

- Moukomla, S.; Srestasathiern, P.; Siripon, S.; Wasuhiranyrith, R.; Kooha, P.; Moukomla, S. Estimating above ground biomass for eucalyptus plantation using data from unmanned aerial vehicle imagery. Proc. SPIE - Int. Soc. Opt. Eng. 2018, 10783, 1–13. [Google Scholar]

- Paul, K.I.; Radtke, P.J.; Roxburgh, S.H.; Larmour, J.; Waterworth, R.; Butler, D.; Brooksbank, K.; Ximenes, F. Validation of allometric biomass models: How to have confidence in the application of existing models. For. Ecol. Manag. 2018, 412, 70–79. [Google Scholar] [CrossRef]

- Roxburgh, S.H.; Paul, K.I.; Clifford, D.; England, J.R.; Raison, R. Guidelines for constructing allometric models for the prediction of woody biomass: How many individuals to harvest? Ecosphere 2015, 6, 1–27. [Google Scholar] [CrossRef] [Green Version]

- Seidel, D.; Fleck, S.; Leuschner, C. Review of ground-based methods to measure the distribution of biomass in forest canopies. Ann. For. Sci. 2011, 68, 225–244. [Google Scholar] [CrossRef] [Green Version]

- Dusseux, P.; Hubert-Moy, L.; Corpetti, T.; Vertès, F. Evaluation of SPOT imagery for the estimation of grassland biomass. Int. J. Appl. Earth Obs. Geoinf. 2015, 38, 72–77. [Google Scholar] [CrossRef]

- Eitel, J.U.H.; Magney, T.S.; Vierling, L.A.; Brown, T.T.; Huggins, D.R. LiDAR based biomass and crop nitrogen estimates for rapid, non-destructive assessment of wheat nitrogen status. Field Crop. Res. 2014, 159, 21–32. [Google Scholar] [CrossRef]

- Hansen, P.M.; Schjoerring, J.K. Reflectance measurement of canopy biomass and nitrogen status in wheat crops using normalized difference vegetation indices and partial least squares regression. Remote Sens. Environ. 2003, 86, 542–553. [Google Scholar] [CrossRef]

- Næsset, E.; Gobakken, T. Estimation of above- and below-ground biomass across regions of the boreal forest zone using airborne laser. Remote Sens. Environ. 2008, 112, 3079–3090. [Google Scholar] [CrossRef]

- Doughty, C.L.; Cavanaugh, K.C. Mapping Coastal Wetland Biomass from High Resolution Unmanned Aerial Vehicle (UAV) Imagery. Remote Sens. 2019, 11, 540. [Google Scholar] [CrossRef] [Green Version]

- Burnham, K.P.; Anderson, D.R. Multimodel inference: Understanding AIC and BIC in model selection. Sociol. Methods Res. 2004, 33, 261–304. [Google Scholar] [CrossRef]

- Shahbazi, M.; Théau, J.; Ménard, P. Recent applications of unmanned aerial imagery in natural resource management. GIScience Remote Sens. 2014, 51, 339–365. [Google Scholar] [CrossRef]

- Anderson, K.; Gaston, K.J. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef] [Green Version]

- Jayathunga, S.; Owari, T.; Tsuyuki, S. The use of fixed–wing UAV photogrammetry with LiDAR DTM to estimate merchantable volume and carbon stock in living biomass over a mixed conifer–broadleaf forest. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 767–777. [Google Scholar] [CrossRef]

- Zahawi, R.A.; Dandois, J.P.; Holl, K.D.; Nadwodny, D.; Reid, J.L.; Ellis, E.C. Using lightweight unmanned aerial vehicles to monitor tropical forest recovery. Biol. Conserv. 2015, 186, 287–295. [Google Scholar] [CrossRef] [Green Version]

- Ota, T.; Ahmed, O.S.; Thu, S.; Cin, T.; Mizoue, N.; Yoshida, S. Estimating selective logging impacts on aboveground biomass in tropical forests using digital aerial photography obtained before and after a logging event from an unmanned aerial vehicle. For. Ecol. Manag. 2019, 433, 162–169. [Google Scholar] [CrossRef]

- Guerra-Hernández, J.; González-Ferreiro, E.; Monleón, V.J.; Faias, S.; Tomé, M.; Díaz-Varela, R. Use of Multi-Temporal UAV-Derived Imagery for Estimating Individual Tree Growth in Pinus pinea Stands. Forests 2017, 8, 300. [Google Scholar] [CrossRef]

- Salamí, E.; Barrado, C.; Pastor, E. UAV flight experiments applied to the remote sensing of vegetated areas. Remote Sens. 2014, 6, 11051–11081. [Google Scholar] [CrossRef] [Green Version]

- Laliberte, A.S.; Goforth, M.A.; Steele, C.M.; Rango, A. Multispectral remote sensing from unmanned aircraft: Image processing workflows and applications for rangeland environments. Remote Sens. 2011, 3, 2529–2551. [Google Scholar] [CrossRef] [Green Version]

- Deng, L.; Mao, Z.; Li, X.; Hu, Z.; Duan, F.; Yan, Y. UAV-based multispectral remote sensing for precision agriculture: A comparison between different cameras. ISPRS J. Photogramm. Remote Sens. 2018, 146, 124–136. [Google Scholar] [CrossRef]

- Maes, W.H.; Steppe, K. Perspectives for Remote Sensing with Unmanned Aerial Vehicles in Precision Agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef] [PubMed]

- Dandois, J.P.; Olano, M.; Ellis, E.C. Optimal altitude, overlap, and weather conditions for computer vision uav estimates of forest structure. Remote Sens. 2015, 7, 13895–13920. [Google Scholar] [CrossRef] [Green Version]

- Jensen, J.L.R.; Mathews, A.J. Assessment of Image-Based Point Cloud Products to Generate a Bare Earth Surface and Estimate Canopy Heights in a Woodland Ecosystem. Remote Sens. 2016, 8, 50. [Google Scholar] [CrossRef] [Green Version]

- Domingo, D.; Ørka, H.O.; Næsset, E.; Kachamba, D.; Gobakken, T. Effects of UAV Image Resolution, Camera Type, and Image Overlap on Accuracy of Biomass Predictions in a Tropical Woodland. Remote Sens. 2019, 11, 948. [Google Scholar] [CrossRef] [Green Version]

- Ni, W.; Dong, J.; Sun, G.; Zhang, Z.; Pang, Y.; Tian, X.; Li, Z.; Chen, E. Synthesis of Leaf-on and Leaf-off Unmanned Aerial Vehicle (UAV) Stereo Imagery for the Inventory of Aboveground Biomass of Deciduous Forests. Remote Sens. 2019, 11, 889. [Google Scholar] [CrossRef] [Green Version]

- Renaud, O.; Victoria-Feser, M.P. A robust coefficient of determination for regression. J. Stat. Plan. Inference 2010, 140, 1852–1862. [Google Scholar] [CrossRef] [Green Version]

- Hunt, E.R.; Cavigelli, M.; Daughtry, C.S.T.; McMurtrey, J.E.; Walthall, C.L. Evaluation of digital photography from model aircraft for remote sensing of crop biomass and nitrogen status. Precis. Agric. 2005, 6, 359–378. [Google Scholar] [CrossRef]

- Honkavaara, E.; Saari, H.; Kaivosoja, J.; Pölönen, I.; Hakala, T.; Litkey, P.; Mäkynen, J.; Pesonen, L. Processing and Assessment of Spectrometric, Stereoscopic Imagery Collected Using a Lightweight UAV Spectral Camera for Precision Agriculture. Remote Sens. 2013, 5, 5006–5039. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Zhang, K.; Tang, C.; Cao, Q.; Tian, Y.; Zhu, Y.; Cao, W.; Liu, X. Estimation of rice growth parameters based on linear mixed-effect model using multispectral images from fixed-wing unmanned aerial vehicles. Remote Sens. 2019, 11, 1371. [Google Scholar] [CrossRef] [Green Version]

- Wijesingha, J.; Moeckel, T.; Hensgen, F.; Wachendorf, M. Evaluation of 3D point cloud-based models for the prediction of grassland biomass. Int. J. Appl. Earth Obs. Geoinf. 2019, 78, 352–359. [Google Scholar] [CrossRef]

- Rueda-Ayala, V.P.; Peña, J.M.; Höglind, M.; Bengochea-Guevara, J.M.; Andújar, D. Comparing UAV-based technologies and RGB-D reconstruction methods for plant height and biomass monitoring on grass ley. Sensors 2019, 19, 535. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, H.; Sun, Y.; Chang, L.; Qin, Y.; Chen, J.; Qin, Y.; Du, J.; Yi, S.; Wan, Y. Estimation of Grassland Canopy Height and Aboveground Biomass at the Quadrat Scale Using Unmanned Aerial Vehicle. Remote Sens. 2018, 10, 851. [Google Scholar] [CrossRef] [Green Version]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Peña, J.M.; De Castro, A.I.; Torres-sánchez, J.; Andújar, D.; San Martin, C.; Dorado, J.; Fernández-Quintanilla, C.; López-Granados, F. Estimating tree height and biomass of a poplar plantation with image-based UAV technology. Agric. Food 2018, 3, 313–326. [Google Scholar] [CrossRef]

- Possoch, M.; Bieker, S.; Hoffmeister, D.; Bolten, A.A.; Schellberg, J.; Bareth, G. Multi-Temporal crop surface models combined with the rgb vegetation index from UAV-based images for forage monitoring in grassland. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. - ISPRS Arch. 2016, XLI-B1, 991–998. [Google Scholar] [CrossRef]

- Borra-Serrano, I.; De Swaef, T.; Muylle, H.; Nuyttens, D.; Vangeyte, J.; Mertens, K.; Saeys, W.; Somers, B.; Roldán-Ruiz, I.; Lootens, P. Canopy height measurements and non-destructive biomass estimation of Lolium perenne swards using UAV imagery. Grass Forage Sci. 2019, 356–369. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Mutanga, O.; Skidmore, A.K. Narrow band vegetation indices overcome the saturation problem in biomass estimation. Int. J. Remote Sens. 2004, 25, 3999–4014. [Google Scholar] [CrossRef]

- Rasmussen, J.; Ntakos, G.; Nielsen, J.; Svensgaard, J.; Poulsen, R.N.; Christensen, S. Are vegetation indices derived from consumer-grade cameras mounted on UAVs sufficiently reliable for assessing experimental plots? Eur. J. Agron. 2016, 74, 75–92. [Google Scholar] [CrossRef]

- Haralick, R.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man. Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Willkomm, M.; Bolten, A.; Bareth, G. Non-destructive monitoring of rice by hyperspectral in-field spectrometry and UAV-based remote sensing: Case study of field-grown rice in North Rhine-Westphalia, Germany. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. - ISPRS Arch. 2016, XLI-B1, 1071–1077. [Google Scholar] [CrossRef]

- Acorsi, M.G.; das Abati Miranda, F.D.; Martello, M.; Smaniotto, D.A.; Sartor, L.R. Estimating biomass of black oat using UAV-based RGB imaging. Agronomy 2019, 9, 344. [Google Scholar] [CrossRef] [Green Version]

- Ni, J.; Yao, L.; Zhang, J.; Cao, W.; Zhu, Y.; Tai, X. Development of an unmanned aerial vehicle-borne crop-growth monitoring system. Sensors 2017, 17, 502. [Google Scholar] [CrossRef] [Green Version]

- Wang, C.; Price, K.P.; Van Der Merwe, D.; An, N.; Wang, H. Modeling Above-Ground Biomass in Tallgrass Prairie Using Ultra-High Spatial Resolution sUAS Imagery. Photogramm. Eng. Remote Sens. 2014, 80, 1151–1159. [Google Scholar] [CrossRef]

- Wang, F.; Wang, F.; Zhang, Y.; Hu, J.; Huang, J. Rice Yield Estimation Using Parcel-Level Relative Spectral Variables from UAV-Based Hyperspectral Imagery. Front. Plant Sci. 2019, 10, 453. [Google Scholar] [CrossRef] [Green Version]

- Mulla, D.J. Twenty five years of remote sensing in precision agriculture: Key advances and remaining knowledge gaps. Biosyst. Eng. 2013, 114, 358–371. [Google Scholar] [CrossRef]

- Kelcey, J.; Lucieer, A. Sensor correction of a 6-band multispectral imaging sensor for UAV remote sensing. Remote Sens. 2012, 4, 1462–1493. [Google Scholar] [CrossRef] [Green Version]

- McGwire, K.C.; Weltz, M.A.; Finzel, J.A.; Morris, C.E.; Fenstermaker, L.F.; McGraw, D.S. Multiscale assessment of green leaf cover in a semi-arid rangeland with a small unmanned aerial vehicle. Int. J. Remote Sens. 2013, 34, 1615–1632. [Google Scholar] [CrossRef]

- Whitehead, K.; Hugenholtz, C.H. Remote sensing of the environment with small unmanned aircraft systems (UASs), part 1: A review of progress and challenges. J. Unmanned Veh. Syst. 2014, 02, 69–85. [Google Scholar] [CrossRef]

- Lebourgeois, V.; Bégué, A.; Labbé, S.; Mallavan, B.; Prévot, L.; Roux, B. Can commercial digital cameras be used as multispectral sensors? A crop monitoring test. Sensors 2008, 8, 7300–7322. [Google Scholar] [CrossRef] [PubMed]

- Calderón, R.; Navas-Cortés, J.A.; Lucena, C.; Zarco-Tejada, P.J. High-resolution airborne hyperspectral and thermal imagery for early detection of Verticillium wilt of olive using fluorescence, temperature and narrow-band spectral indices. Remote Sens. Environ. 2013, 139, 231–245. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S.; Smith, M.W. 3-D uncertainty-based topographic change detection with structure-from-motion photogrammetry: Precision maps for ground control and directly georeferenced surveys. Earth Surf. Process. Landf. 2017, 1788, 1769–1788. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S. Mitigating systematic error in topographic models derived from UAV and ground-based image networks. Earth Surf. Process. Landf. 2014, 39, 1413–1420. [Google Scholar] [CrossRef] [Green Version]

- Lisein, J.; Pierrot-Deseilligny, M.; Bonnet, S.; Lejeune, P. A photogrammetric workflow for the creation of a forest canopy height model from small unmanned aerial system imagery. Forests 2013, 4, 922–944. [Google Scholar] [CrossRef] [Green Version]

- Fonstad, M.A.; Dietrich, J.T.; Courville, B.C.; Jensen, J.L.; Carbonneau, P.E. Topographic structure from motion: A new development in photogrammetric measurement. Earth Surf. Process. Landf. 2013, 38, 421–430. [Google Scholar] [CrossRef] [Green Version]

- Hardin, P.; Jensen, R. Small-scale unmanned aerial vehicles in environmental remote sensing: Challenges and opportunities. GIScience Remote Sens. 2011, 48, 99–111. [Google Scholar] [CrossRef]

- Rahman, M.M.; McDermid, G.J.; Mckeeman, T.; Lovitt, J. A workflow to minimize shadows in UAV-based orthomosaics. J. Unmanned Veh. Syst. 2019, 7, 107–117. [Google Scholar] [CrossRef]

- Goodbody, T.R.H.; Coops, N.C.; Hermosilla, T.; Tompalski, P.; Pelletier, G. Vegetation Phenology Driving Error Variation in Digital Aerial Photogrammetrically Derived Terrain Models. Remote Sens. 2018, 10, 1554. [Google Scholar] [CrossRef] [Green Version]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef] [Green Version]

- Assmann, J.J.; Kerby, J.T.; Cunliffe, A.M.; Myers-Smith, I.H. Vegetation monitoring using multispectral sensors—Best practices and lessons learned from high latitudes. J. Unmanned Veh. Syst. 2019, 7, 54–75. [Google Scholar] [CrossRef] [Green Version]

- Von Bueren, S.K.; Burkart, A.; Hueni, A.; Rascher, U.; Tuohy, M.P.; Yule, I.J. Deploying four optical UAV-based sensors over grassland: Challenges and limitations. Biogeosciences 2015, 12, 163–175. [Google Scholar] [CrossRef] [Green Version]

- Turner, D.; Lucieer, A.; Watson, C. An automated technique for generating georectified mosaics from ultra-high resolution Unmanned Aerial Vehicle (UAV) imagery, based on Structure from Motion (SFM) point clouds. Remote Sens. 2012, 4, 1392–1410. [Google Scholar] [CrossRef] [Green Version]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Dandois, J.P.; Ellis, E.C. High spatial resolution three-dimensional mapping of vegetation spectral dynamics using computer vision. Remote Sens. Environ. 2013, 136, 259–276. [Google Scholar] [CrossRef] [Green Version]

- Ota, T.; Ogawa, M.; Mizoue, N.; Fukumoto, K.; Yoshida, S. Forest Structure Estimation from a UAV-Based Photogrammetric Point Cloud in Managed Temperate Coniferous Forests. Forests 2017, 8, 343. [Google Scholar] [CrossRef]

- Fraser, R.H.; Olthof, I.; Lantz, T.C.; Schmitt, C. UAV photogrammetry for mapping vegetation in the low-Arctic. Arct. Sci. 2016, 2, 79–102. [Google Scholar] [CrossRef] [Green Version]

- Reykdal, Ó. Drying and Storing of Harvested Grain: A Review of Methods. 2018. Available online: https://www.matis.is/media/matis/utgafa/05-18-Drying-and-storage-of-grain.pdf (accessed on 14 September 2019).

- Ogle, S.M.; Breidt, F.J.; Paustian, K. Agricultural Management Impacts on Soil Organic Carbon Storage under Moist and Dry Climatic Conditions of Temperate and Tropical Regions. Biogeo 2005, 72, 87–121. [Google Scholar] [CrossRef]

- Chen, D.; Huang, X.; Zhang, S.; Sun, X. Biomass modeling of larch (Larix spp.) plantations in China based on the mixed model, dummy variable model, and Bayesian hierarchical model. Forests 2017, 8, 268. [Google Scholar] [CrossRef] [Green Version]

| Type | Variable | Used by |

|---|---|---|

| Height | Mean height | [3,9,12,13,15,16,19,20,21,23,25,28,30,34,48,49,50,57,58,63,65,67,68,69,74,75,76] |

| Maximum height | [1,3,4,13,28,30,34,48,57,63,65,69] | |

| Minimum height | [3,28,34,48,57,63,65,69] | |

| Median height | [12,21,27,48,63,65,69] | |

| Mode of height | [57] | |

| Count of height | [63] | |

| Standard deviation of height | [3,9,12,13,15,28,30,34,48,57,63,65,69] | |

| Coefficient of variation of height | [3,9,12,13,15,28,30,34,48,57,69] | |

| Variance of height | [57] | |

| Skewness of height | [30,34,57] | |

| Kurtosis of height | [30,34,57] | |

| Entropy of height | [13] | |

| Relief of height | [13,34] | |

| Height percentile(s) | [4,13,15,21,30,34,49,57,63,69] | |

| Area & Density | Canopy point density | [28,30,57] |

| Proportion of points > mean height relative to total number of points | [28,57] | |

| Proportion of points > mode height relative to total number of points | [57] | |

| Crown/vegetation area (canopy cover) | [3,21,50] | |

| Canopy relief ratio | [16,57] | |

| Crown isle—proportion of site where canopy has height greater than 2/3 of the 99th percentile of all heights | [48] | |

| Canopy openness—proportion of site area <2 m in height | [48] | |

| Canopy roughness—average of SD of each pixel from mean CHM | [48] | |

| Volume | Canopy/crown volume | [3,8,16,22,25,64] |

| VI-weighted canopy volume | [3] | |

| Change-based | Amount of change in DSM before and after plant removal | [49] |

| Other | Height*Spectral (various indices) | [6,66,67] |

| GrassI (RGBVI + CHM) | [68] | |

| TIN-based structure, area, slope | [13] |

| Index | Formula | Used by |

|---|---|---|

| NDSI | (λ1 − λ2)/(λ2 + λ2) where 1 and 2 are any bands | [5] |

| MNDSI | (λ1 − λ2)/(λ1 − λ2) where 1, 2 and 3 are any bands | [5] |

| SR | (λ1/λ2) where 1 and 2 are any bands | [5,25] |

| NDVI | (NIR − R)/(NIR + R) | [6,11,13,16,20,26,43,61,67,76,77,78] |

| GNDVI | (NIR − G)/(NIR + G) | [13,16,26,43,62] |

| NDRE | (λ800 − λ720)/(λ800 + λ720) | [13,16,43,62] |

| SR | λ800/λ700 | [13] |

| NPCI | (λ670 − λ460)/(λ670 + λ460) | [20] |

| TVI | 0.5 * [120 * (λ800 − λ550) − 200 * (λ670 − λ550) | [6,11,13] |

| EVI | 2.5 * (λ800 − λ670)/(λ800 + 6 * λ670 − 7.5 * λ490 + 1) | [6,13,43] |

| EVI2 | 2.5 * (λ800 − λ680)/(λ800 + 2.4 * λ680 + 1) | [6,20] |

| GI | λ550/λ680 | [6] |

| RDVI | (λ798 − λ670)/sqrt(λ798 + λ670) | [13] |

| CI red edge | (λ780 − Λ710) − 1 OR (NIR/RE) − 1 | [13,16,26,43,62] |

| CI green | (λ780 − λ550) − 1 OR (NIR/green) − 1 | [13,16,43] |

| DATT | (λ800 − λ720)/(λ800 − λ680) | [26] |

| LCI | (λ850 − λ710)/(λ850 − λ680) | [13,20] |

| MCARI | [(λ700 − λ670) − 0.2(λ700 − λ550)] * (λ700/λ670) | [13,20] |

| MCARI1 | 1.2(2.5(λ790 − λ660) − 1.3(λ790 − λ560)) | [62] |

| SPVI | 0.4 * (3.7(λ800 − λ670) − 1.2 * |λ530 − λ670|) | [20] |

| OSAVI | 1.16(λ800 − λ670)/(λ800 + λ670 + 0.16) | [6,20,26,62] |

| REIP | 700 + 40 * (((λ667 + λ782)/2) − λ702)/(λ738 − λ702)) | [21,24] |

| MTVI | 1.2[1.2(λ800 − λ550) − (2.5(λ670 − λ550)] | [6,62] |

| MTVI2 | 1.5[1.2(λ800 − λ550) − 2.5(λ670 − λ550)]/sqrt[(2 * λ800 + 1)2 − (6 * λ800 − (5 * λ670)1/2) − 0.5]1/2 | [6,26,62] |

| RVI | λ800/λ670 | [6,16] |

| DVI1-3 | λ800 − λ680; λ750 − λ680; λ550 − λ680 | [6] |

| WDRVI | (0.1 * λ800 − λ680)/(0.1 * λ800 + λ680) | [6,16] |

| CVI | NIR * (R/G2) | [16] |

| BGI | λ460/λ560 | [20] |

| DATT | (λ790 − λ735)/(λ790 − λ660) | [62] |

| MSAVI | (2 * λ800 + 1 − sqrt(2 * λ800 + 1)2) − 8 * (λ800 − λ670) | [6,62] |

| Index | Formula1 | Used by |

|---|---|---|

| GRVI/NGRDI | (G − R)/(G + R) | [2,3,4,5,9,11,12,16,20,21,26,27,33,60] |

| ExG | 2 × G − R − B | [3,4,9,12,15,16,25,69] |

| GLA/GLI/VDVI | (2 * G − R − B)/(2 * G + R + B) | [3,5,9,12,15,16] |

| MGRVI | G2 − R2/G2 + R2 | [5,12] |

| ExB | 1.4 * B − G/(G + R + B) | [3,12] |

| ExR | 1.4 × R − B or 1.4 * (R − G)/(G + R + B) | [12,15] |

| ExGR | ExG index − ExR index | [3,4,9,12,15,69] |

| Red Ratio | R/(R + G + B) | [2,3,20] |

| Blue Ratio | B/(R + G + B) | [2,3,20] |

| Green Ratio | G/(R + G + B) | [2,3,20] |

| VARI | (G − R)/(G + R − B) | [2,3,5,12,16,20,26,27] |

| ExR | 1.4 * (R − G) | [2,3,20] |

| NRBI | (R − B)/(R + B) | [27] |

| NGBI | (G − B)/(G + B) | [27] |

| VEG | G/(RaB(1−a)) where a = 0.667 | [3,4,5,9,15] |

| WI | (G − B)/(R − G) | [3,15] |

| CIVE | 0.441 * R − 0.881 * G + 0.385 * B + 18.78745 | [3,4,9,15] |

| COM | 0.25 * ExG + 0.3 * ExGR + 0.33 * CIVE + 0.12 * VEG | [3,4,9,15] |

| TGI | G − (0.39 * R) − (0.61 * B) | [27] |

| RGBVI | (G2 − B * R)/(G2 + B * R) | [12,68] |

| IKAW | (R − B)/(R + B) | [3,12] |

| GRRI | G/R | [3,5] |

| GBRI | G/B | [3] |

| RBRI | R/B | [3] |

| BRRI | B/R | [20] |

| BGRI | B/G | [20] |

| RGRI | R/G | [20] |

| INT | (R + G + B)/3 | [3] |

| NDI | (red ratio index − green ratio index)/ (red ratio index + green ratio index + 0.01) | [3] |

| MVARI | (G − B)/(B + R − B) | [5] |

| IPCA | 0.994 * |R − B| + 0.961 * |G − B| + 0.914 * |G − R| | [3] |

| Δ Reflectance | Change in reflectance measured at two time periods | [49] |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

G. Poley, L.; J. McDermid, G. A Systematic Review of the Factors Influencing the Estimation of Vegetation Aboveground Biomass Using Unmanned Aerial Systems. Remote Sens. 2020, 12, 1052. https://doi.org/10.3390/rs12071052

G. Poley L, J. McDermid G. A Systematic Review of the Factors Influencing the Estimation of Vegetation Aboveground Biomass Using Unmanned Aerial Systems. Remote Sensing. 2020; 12(7):1052. https://doi.org/10.3390/rs12071052

Chicago/Turabian StyleG. Poley, Lucy, and Gregory J. McDermid. 2020. "A Systematic Review of the Factors Influencing the Estimation of Vegetation Aboveground Biomass Using Unmanned Aerial Systems" Remote Sensing 12, no. 7: 1052. https://doi.org/10.3390/rs12071052