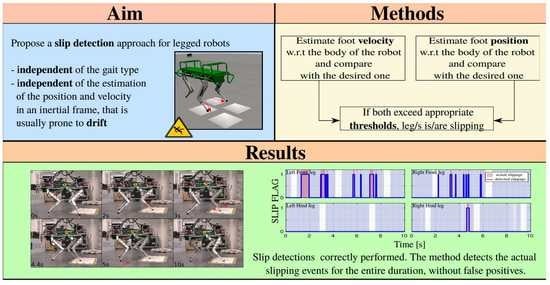

On Slip Detection for Quadruped Robots

Abstract

:1. Introduction

1.1. Related Work on Slip Detection and Recovery

1.2. Contributions

1.3. Outline

2. Modelling and Sensing

2.1. Robot Overview

2.2. Sensors Overview

2.3. Dynamic Model

2.4. Locomotion Framework

3. Slip Detection Method

3.1. Baseline Approach

3.1.1. One Leg Slip Detection

3.1.2. Multiple Leg Slip Detection

3.1.3. Drawbacks of the Baseline Method

3.2. A Novel Approach for Slip Detection

| Algorithm 1: detectSlippage(,,,) |

| 1: ←; ▹ and are the actual and desired foot velocity in |

| 2: ; ▹ and are the actual and desired foot position in |

| 3: for each stance leg i do |

| 4: ←; ▹ and are the thresholds for and |

| 5: end |

4. Simulation Results

4.1. Crawling onto Patches of Ice

4.2. Trotting onto Patches of Ice

5. Experimental Results

6. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| DLS | Dynamic Legged Systems |

| IIT | Istituto Italiano di Tecnologia |

| ROS | Robot Operating System |

| HyQ | Hydraulically actuated Quadruped |

| PTAL | Proprioceptive Terrain-Aware Locomotion |

| GRF | Ground Reaction Force |

| IMU | Inertial Measurement Unit |

| UKF | Unscented Kalman Filter |

| DoF | Degree of Freedom |

| HAA | Hip Adduction-Abduction |

| HFE | Hip Flexion-Extension |

| KFE | Knee Flexion-Extension |

| RCF | Reactive Controller Framework |

| LF | Left-Front |

| RF | Rigth-Front |

| LH | Left-Hind |

| RH | Right-Hind |

References

- Witze, A. NASA has launched the most ambitious Mars rover ever built: Here’s what happens next. Nature 2020, 584, 15–16. [Google Scholar] [CrossRef] [PubMed]

- Potter, N. A Mars helicopter preps for launch: The first drone to fly on another planet will hitch a ride on NASA’s Perseverance rover—[News]. IEEE Spectr. 2020, 57, 6–7. [Google Scholar] [CrossRef]

- ATLAS by Boston Dynamics Inc. Available online: https://www.bostondynamics.com/atlas (accessed on 15 September 2021).

- ATLAS|Partners in Parkour by Boston Dynamics Inc. Available online: https://www.youtube.com/watch?v=tF4DML7FIWk (accessed on 15 September 2021).

- Semini, C.; Tsagarakis, N.; Guglielmino, E.; Focchi, M.; Cannella, F.; Caldwell, D.G. Design of HyQ—A hydraulically and electrically actuated quadruped robot. IMechE Part I J. Syst. Control Eng. 2011, 225, 831–849. [Google Scholar] [CrossRef]

- Semini, C.; Barasuol, V.; Focchi, M.; Boelens, C.; Emara, M.; Casella, S.; Villarreal, O.; Orsolino, R.; Fink, G.; Fahmi, S.; et al. Brief introduction to the quadruped robot HyQReal. In Proceedings of the Italian Conference on Robotics and Intelligent Machines (I-RIM), Rome, Italy, 18–20 October 2019; pp. 1–2. [Google Scholar]

- HyQReal Robot Release: Walking Robot Pulls a Plane by Dynamic Legged Systems Lab. Available online: https://www.youtube.com/watch?v=pLsNs1ZS_TI (accessed on 10 December 2021).

- Hutter, M.; Gehring, C.; Jud, D.; Lauber, A.; Bellicoso, C.D.; Tsounis, V.; Hwangbo, J.; Bodie, K.; Fankhauser, P.; Bloesch, M.; et al. ANYmal—A highly mobile and dynamic quadrupedal robot. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 38–44. [Google Scholar] [CrossRef] [Green Version]

- Fankhauser, P.; Hutter, M. ANYmal: A unique quadruped robot conquering harsh environments. Res. Featur. 2018, 126, 54–57. [Google Scholar] [CrossRef]

- Seok, S.; Wang, A.; Chuah, M.Y.; Otten, D.; Lang, J.; Kim, S. Design principles for highly efficient quadrupeds and implementation on the MIT Cheetah robot. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013; pp. 3307–3312. [Google Scholar] [CrossRef]

- Bledt, G.; Powell, M.J.; Katz, B.; Di Carlo, J.; Wensing, P.M.; Kim, S. MIT Cheetah 3: Design and control of a robust, dynamic quadruped robot. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 2245–2252. [Google Scholar] [CrossRef]

- Guizzo, E. By leaps and bounds: An exclusive look at how Boston Dynamics is redefining robot agility. IEEE Spectr. 2019, 56, 34–39. [Google Scholar] [CrossRef]

- Aliengo by Unitree Robotics. Available online: https://www.unitree.com/products/aliengo/ (accessed on 15 September 2021).

- He, J.; Shao, J.; Sun, G.; Shao, X. Survey of Quadruped Robots Coping Strategies in Complex Situations. Electronics 2019, 8, 1414. [Google Scholar] [CrossRef] [Green Version]

- Lee, J.; Hwangbo, J.; Wellhausen, L.; Koltun, V.; Hutter, M. Learning quadrupedal locomotion over challenging terrain. Sci. Robot. 2020, 5, eabc5986. [Google Scholar] [CrossRef] [PubMed]

- Bloesch, M.; Gehring, C.; Fankhauser, P.; Hutter, M.; Hoepflinger, M.A.; Siegwart, R. State estimation for legged robots on unstable and slippery terrain. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Tokyo, Japan, 3–7 November 2013; pp. 6058–6064. [Google Scholar]

- Manuelli, L.; Tedrake, R. Localizing external contact using proprioceptive sensors: The contact particle filter. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 5062–5069. [Google Scholar]

- Barasuol, V.; Fink, G.; Focchi, M.; Caldwell, D.; Semini, C. On the Detection and Localization of Shin Collisions and Reactive Actions in Quadruped Robots. In Proceedings of the 2019 International Conference on Climbing and Walking Robots (CLAWAR), Kuala Lumpur, Malaysia, 26–28 August 2019. [Google Scholar]

- Wang, S.; Bhatia, A.; Mason, M.T.; Johnson, A.M. Contact localization using velocity constraints. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 7351–7358. [Google Scholar]

- Fahmi, S.; Focchi, M.; Radulescu, A.; Fink, G.; Barasuol, V.; Semini, C. STANCE: Locomotion Adaptation Over Soft Terrain. IEEE Trans. Robot. 2020, 36, 443–457. [Google Scholar] [CrossRef]

- Park, J.; Kong, D.H.; Park, H.W. Design of Anti-Skid Foot With Passive Slip Detection Mechanism for Conditional Utilization of Heterogeneous Foot Pads. IEEE Robot. Autom. Lett. RA-L 2019, 4, 1170–1177. [Google Scholar] [CrossRef]

- Okatani, T.; Shimoyama, I. Evaluation of Ground Slipperiness During Collision Using MEMS Local Slip Sensor. In Proceedings of the 2019 IEEE 32nd International Conference on Micro Electro Mechanical Systems (MEMS), Seoul, Korea, 27–31 January 2019; pp. 823–825. [Google Scholar] [CrossRef]

- Massalim, Y.; Kappassov, Z.; Varol, A.; Hayward, V. Robust Detection of Absence of Slip in Robot Hands and Feet. IEEE Sens. J. 2021, 21, 27897–27904. [Google Scholar] [CrossRef]

- Takemura, H.; Deguchi, M.; Ueda, J.; Matsumoto, Y.; Ogasawara, T. Slip-adaptive walk of quadruped robot. Robot. Auton. Syst. 2005, 53, 124–141. [Google Scholar] [CrossRef]

- Kaneko, K.; Kanehiro, F.; Kajita, S.; Morisawa, M.; Fujiwara, K.; Harada, K.; Hirukawa, H. Slip observer for walking on a low friction floor. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Edmonton, AB, Canada, 2–6 August 2005; pp. 634–640. [Google Scholar] [CrossRef] [Green Version]

- Teng, S.; Mueller, M.W.; Sreenath, K. Legged Robot State Estimation in Slippery Environments Using Invariant Extended Kalman Filter with Velocity Update. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 3104–3110. [Google Scholar]

- Focchi, M.; Barasuol, V.; Frigerio, M.; Caldwell, D.G.; Semini, C. Slip detection and recovery for quadruped robots. In Robotics Research; Springer: Cham, Switzerland, 2018; pp. 185–199. [Google Scholar] [CrossRef]

- Jenelten, F.; Hwangbo, J.; Tresoldi, F.; Bellicoso, C.D.; Hutter, M. Dynamic locomotion on slippery ground. IEEE Robot. Autom. Lett. RA-L 2019, 4, 4170–4176. [Google Scholar] [CrossRef] [Green Version]

- Fink, G.; Semini, C. The DLS Quadruped Proprioceptive Sensor Dataset. In Proceedings of the 2020 International Conference on Climbing and Walking Robots (CLAWAR), Moscow, Russia, 24–26 August 2020; pp. 1–8. [Google Scholar]

- Fahmi, S.; Fink, G.; Semini, C. On State Estimation for Legged Locomotion Over Soft Terrain. IEEE Sens. Lett. 2021, 5, 1–4. [Google Scholar] [CrossRef]

- Barasuol, V.; Buchli, J.; Semini, C.; Frigerio, M.; De Pieri, E.R.; Caldwell, D.G. A Reactive Controller Framework for Quadrupedal Locomotion on Challenging Terrain. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013; pp. 2554–2561. [Google Scholar] [CrossRef]

- Fahmi, S.; Mastalli, C.; Focchi, M.; Semini, C. Passive Whole-Body Control for Quadruped Robots: Experimental Validation Over Challenging Terrain. IEEE Robot. Autom. Lett. RA-L 2019, 4, 2553–2560. [Google Scholar] [CrossRef] [Green Version]

- Havoutis, I.; Ortiz, J.; Bazeille, S.; Barasuol, V.; Semini, C.; Caldwell, D.G. Onboard perception-based trotting and crawling with the hydraulic quadruped robot (HyQ). In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Tokyo, Japan, 3–7 November 2013; pp. 6052–6057. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nisticò, Y.; Fahmi, S.; Pallottino, L.; Semini, C.; Fink, G. On Slip Detection for Quadruped Robots. Sensors 2022, 22, 2967. https://doi.org/10.3390/s22082967

Nisticò Y, Fahmi S, Pallottino L, Semini C, Fink G. On Slip Detection for Quadruped Robots. Sensors. 2022; 22(8):2967. https://doi.org/10.3390/s22082967

Chicago/Turabian StyleNisticò, Ylenia, Shamel Fahmi, Lucia Pallottino, Claudio Semini, and Geoff Fink. 2022. "On Slip Detection for Quadruped Robots" Sensors 22, no. 8: 2967. https://doi.org/10.3390/s22082967