Abstract

Constant decision-making underpins much of daily life, from simple perceptual decisions about navigation through to more complex decisions about important life events. At many scales, a fundamental task of the decision-maker is to balance competing needs for caution and urgency: fast decisions can be more efficient, but also more often wrong. We show how a single mathematical framework for decision-making explains the urgency/caution balance across decision-making at two very different scales. This explanation has been applied at the level of neuronal circuits (on a time scale of hundreds of milliseconds) through to the level of stable personality traits (time scale of years).

Similar content being viewed by others

Life presents a constant stream of big and small decisions. Decision-making organisms can maximize their rewards by striking a careful balance between urgent decisions and cautious decisions. Compared with cautious decisions, urgent decisions are fast, allowing the decision-maker to act upon more decisions per unit time, and to avoid missing out on fleeting opportunities. However, fast decisions are also more often wrong, which can have negative consequences. Humans and other animals are sensitive to this tension, and dynamically adjust the balance between caution and urgency, even in simple laboratory decision-making tasks. In many cases, both humans and other animals can even identify the precise quantitative tradeoff between caution and urgency which uniquely optimizes their rewards (Balci et al., 2011; Evans & Brown, 2016; Simen, Cohen, & Holmes, 2006; Starns & Ratcliff, 2012). This balance between caution and urgency has been studied for decades in humans and many other animals, from rats to bees and even slime mould (Chittka, Dyer, Bock, & Dornhaus, 2003; Evans & Brown, 2016; Latty & Beekman, 2011; Rinberg, Koulakov, & Gelperin, 2006; Uchida & Mainen, 2003; Wickelgren, 1977).

For rapid decisions, such as those about simple perceptual categorization, the balance between caution and urgency is known as the speed-accuracy tradeoff, and is explained by mathematical accounts of decision-making based on “evidence accumulation”. These accounts have provided detailed explanations of behavioral and neurophysiological data related to decision-making for more than 50 years (see Fig. 1a). Evidence accumulation theories assume that decisions are made by gradually accumulating evidence from the environment in favor of each possible choice. The first choice to accumulate a threshold amount of evidence is selected. Through variations on this basic theme, accumulator models of decision-making have helped explain cognitive and neurophysiological aspects of decision-making (Brown & Heathcote, 2008; Ratcliff, 1978; Ratcliff & Rouder, 1998; Roitman & Shadlen, 2002; Van Zandt, Colonius, & Proctor, 2000), and have been used to understand dozens of important problems, from clinical disorders (Ho et al., 2014), to alcohol intoxication (van Ravenzwaaij, Dutilh, & Wagenmakers, 2012), and sleep deprivation (Ratcliff & Van Dongen, 2011).

Mathematical models of decision-making explain the tradeoff between caution and urgency. Top row (a) illustrates the basic framework of accumulator models. Decisions are represented as a race between accumulators which gather evidence in favor of competing decision outcomes. The first accumulator to reach a threshold amount of evidence triggers the decision. Bottom row (b) shows the basic mechanism by which accumulator models explain the speed-accuracy tradeoff. Lower thresholds lead to decisions based on less evidence. These decisions are faster (because less time is required to reach threshold) but more often wrong (because there is less time to integrate out random variability)

A key success of accumulator-based theories of decision-making is their natural explanation of the balance between caution and urgency (see Fig. 1b). Accumulator theories can explain the “speed-accuracy tradeoff” via the amount of evidence required to trigger a decision, called the “threshold”. When a high threshold is set, a lot of evidence must be collected before a decision is made, leading to slow but careful decisions. Conversely, when a low threshold is set decisions are made quickly, but are more often wrong because they are based on too little evidence. The threshold has been linked to the firing rates of cortical neurons in monkeys, and to the activity in cortico-thalmic networks in humans (Forstmann et al., 2008; Heitz & Schall 2012; Roitman & Shadlen, 2002). The speed-accuracy tradeoff is a flexible setting which can be strategically adjusted, in less than a second (Heitz & Schall, 2012). Much is known about the neurophysiological substrates of this flexibility, and even how they change in elderly humans (Forstmann et al., 2008, 2011; Heitz & Schall, 2012).

Decision-making research has robustly found that participants, both human and monkey, are able to adapt their decision thresholds based on task instructions and requirements (Ratcliff, Smith, Brown, & McKoon, 2016). However, individual differences in these threshold values, and the speed-accuracy tradeoff strategy that they represent, have been seldom explored. The limited research that has attempted to explore these differences has uncovered exciting new findings, and greatly aided our understanding of the decision threshold. For example, Forstmann et al. (2010) extended previous findings of age-related differences in threshold setting (Ratcliff, Thapar, & McKoon, 2001) and its relation to white matter tracts within specific brain regions (Forstmann et al., 2011), finding the strength of the tracts between the presupplementary motor area and the striatum partially explained individual differences in the ability to successfully adjust their threshold setting when under speed emphasis.

We attempt to further explore individual differences in the speed-accuracy tradeoff by establishing a link between this decision-making component and the previously-unrelated concept of “need for closure”. Need for closure (NFC) is a personality trait, defined by a desire for answers and conclusions compared with confusion and ambiguity (Kruglanski, 1989; Webster & Kruglanski, 1994a). People who have a high need for closure tend to “[have a] desire for predictability, preference for order and structure, discomfort with ambiguity, [as well as having high levels of] decisiveness, and close-mindedness” (Webster & Kruglanski, 1994b, p.1049; though also see Barkley-Levenson and Fox (2016), who found mixed results regarding the relationship between the NFC sub-scale of decisiveness and different types of impulsiveness). People with a low need for closure tend to be the opposite, being adaptable, open-minded, less focused on certainty, and happy to expend longer periods of time before taking action (Kruglanski, 1989, 2004; Webster & Kruglanski, 1994a, 1994b). Need for closure has been related to judgments of social stimuli (e.g., stereotyping; Kruglanski, 2004), although more recent research has found that it also predicts negative evaluations of category-inconsistent non-social stimuli (Rubin, Paolini, & Crisp, 2011) and poorer mental health (Roets & Soetens, 2010).

Need for closure and decision thresholds are defined at very different time scales, with decision threshold being adaptable within seconds (Forstmann et al., 2008; Heitz & Schall, 2012), while need for closure is a stable personality trait. Nevertheless, there appears to be some common theoretical underpinnings between the two constructs. Both concern a tendency towards hastiness (low decision threshold, and high need for closure) or tardiness (high decision threshold, and low need for closure). This leads us to investigate a hypothesized negative correlation between need for closure and decision thresholds.

In the following two experiments, we link the speed-accuracy tradeoff setting with the need for closure. Speed-accuracy tradeoffs were measured in rapid perceptual decision-making, where most decisions required much less than 1 s. By contrast, need for closure was measured by a questionnaire which probed participants about long-term personality traits (see Fig. 2). We further demonstrate that a unified mathematical theoretical framework accommodates both rapid decision-making speed-accuracy tradeoffs and the need for closure. This framework uses a generative statistical model to accommodate both quantities, via the threshold parameter of an evidence accumulator.

Methods and analyses. Our experiment (top row) included a standard questionnaire to estimate individual subjects’ need for closure (a well-established personality trait), as well as a simple perceptual decision-making task in which subjects classified random-pixel squares as either lighter or darker than a criterion. During some parts of the decision-making task, instructions and feedback emphasized urgency (“speed-emphasis”); during other parts they emphasized caution (“accuracy-emphasis”). For each subject, this procedure resulted in a need for closure score, and a joint distribution over response latency and response choice separately for the speed- and accuracy-emphasis conditions. A parallel-data-streams analysis (middle row) used a hierarchical Bayesian framework to estimate the parameters of an accumulator model of decision-making for each subject. The model parameter corresponding to the speed-accuracy tradeoff (the threshold parameter) correlated with need for closure scores from the questionnaire, and the other model parameters did not. An integrated data analysis (bottom row) simultaneously accounted for threshold parameters and need for closure scores

Experiment 1

Method

Participants

Forty-nine participants were recruited from an undergraduate psychology pool and from the general university population for the first experiment. Participants were reimbursed with a AUD$25 voucher redeemable at a large shopping chain. Data from two participants were rejected because of close-to-chance-level accuracy rates in the perceptual discrimination task.

Procedure

The need for closure scale was administered online, following the standard procedure (Kruglanski, 1989; Webster & Kruglanski, 1994a). There were 41 items in total, of which 11 were reverse-scored. We replaced the original Decisiveness subscale with updated decisiveness items as suggested by Roets and van Hiel (2007). Half of the participants completed the need for closure survey before the perceptual discrimination task, and half completed the tasks in reverse order. Both tasks were completed within a one hour session. Participants completed the 41 items of the Need for Closure scale online, using a 7-point Likert scale to respond with each item: strongly disagree, disagree, partially disagree, neutral, partially agree, agree, strongly agree. Following the items of the NFC scale, participants indicated their gender. Participants were also given the option to enter their age and their ethnicity (Caucasian, Aboriginal, Torres Strait Islander, African, Asian, Other).

For the perceptual discrimination task, we used stimuli made of 64 × 64 pixel black-and-white patches (see also Ratcliff & Rouder, 1998). The squares measured 2.5 cm × 2.5 cm on screen, and each pixel in the square was randomly assigned to either black or white. There were two categories of stimuli: pixels in “light” squares had a 53% probability of being white, and pixels in a “dark” square had a 47% chance of being white. Presentation of stimuli on a computer screen was made dynamic by repeatedly cycling through four squares, chosen at random and independently for each decision trial from a pre-generated set of 50 squares from each category. Cycling occurred at a rate of 67 Hz, and continued until a response was made.

In the perceptual decision-making task, participants made 416 decisions. Each time, they had to choose whether the stimulus was predominately light or dark, by pressing “D” or “L” on the keyboard. Participants had up to 5 s to respond. At the beginning of accuracy-emphasis blocks the following instructions were given: This is an accuracy emphasis block. Be careful, try to minimise your errors, even if that means your responses are quite slow. In speed-emphasis blocks the instructions were: This is a speed block. Go quickly, don’t worry about making a few extra mistakes, we are interested in fast decisions. During the accuracy-emphasis blocks, Try to respond *accurately* was displayed on the screen during all trials, while in the speed-emphasis blocks Try to respond *quickly* was displayed. In both emphasis conditions feedback was given for correct or incorrect responses: CORRECT (in green font) or INCORRECT (in red font), respectively. Additionally, if responses were made less than 250 ms, TOO FAST (in red font) was displayed. Each participant completed four blocks (two of each emphasis) of 104 trials which took approximately 25 min in total including compulsory one-minute-minimum breaks between each block.

Analyses

Our first analysis for Experiment 3 involved correlating estimated model parameters with need for closure scores. The logic of this analysis is illustrated in the middle row of Fig. 2. Need for closure scores were calculated for each individual, by averaging the ratings provided for each questionnaire item after reverse-scoring negatively-worded items. Speed-accuracy tradeoff settings were estimated from each participant’s decision-making data by fitting the linear ballistic accumulator model (LBA; Brown & Heathcote, 2008). The LBA model assumes that each decision is a race between two accumulators, corresponding to “light” and “dark” response choices, in this case. The accumulators gather evidence in favor of each choice until one of them reaches a response threshold. Evidence accumulation is a simple linear process, with a rate of increase that varies randomly from decision to decision according to a normal distribution. The amount of evidence in favor of each response choice before the decision begins (“start point”, representing a priori bias) is also random from decision to decision. The parameters of the model include: the threshold amount of evidence required to trigger a decision, b; the speed with which evidence accumulates, also known as the “drift rate”, v; the range of the uniform distribution of start points, A; the standard deviation of drift rates across trials, s; and a constant offset amount of time taken for all process other than the actual decision, including the time taken to execute the motor response, and the time taken to perceive the stimulus, t 0.

To keep the model as simple as possible, we assumed that the two accumulators racing to make the decision shared identical parameters except for their drift rate distributions. In the accumulator whose response matched the actual identity of the stimulus (e.g. the accumulator corresponding to the response choice “dark”, when the stimulus really did contain more black pixels than white pixels), the mean of the normal distribution of drift rates was freely estimated, and the standard deviation of this distribution was fixed at s = 1, to satisfy a mathematical scaling property of the model. For the other accumulator the mean was fixed at zero (i.e., v = 0)Footnote 1 and the standard deviation was freely estimated. We also considered a model that allowed a bias in responding for bright or dark stimuli, by allowing a difference in the threshold value for the two accumulators. This model did not appear to improve the fit to the data and lead to qualitative identical findings for the first analysis (a statistically reliable correlation of r = −.29 between decision threshold and need for closure score).

To model the difference between the decisions made under speed-emphasis and those made under accuracy emphasis, we allowed different decision threshold parameters for the two conditions (b s p e e d and b a c c u r a c y ) and also a non-zero mean drift rate for the accumulator corresponding to the incorrect response under speed-emphasis (i.e. allowing v > 0) (Rae, Heathcote, Donkin, Averell, & Brown, 2014).

We estimated the parameters of the model from data using a hierarchical Bayesian approach. Each individual subject was allowed their own parameters, but these were constrained to follow normal distributions—truncated to positive values—across participants. Each group-level distribution was itself defined by two parameters, a mean and a standard deviation. Diffuse, relatively uninformative priors were placed on the parameters of the group-level distributions, with positive-only truncated normal distributions for the priors on mean parameters and gamma distributions for the priors on standard deviation parameters. The complete model for all participants and the associated hierarchy, and priors can be specified as follows:

Above, the subscript i indexes participants, N +(μ, σ) indicates a normal distribution with mean μ and standard deviation σ, truncated to strictly positive values (we use Greek letters for parameters that are shared across the entire group of participants).

Samples were drawn from the posterior distribution over parameters by Markov chain Monte-Carlo, with proposals generated by differential evolution (Turner, Sederberg, Brown, & Steyvers, 2013). We ran 20 parallel chains, with 5,000 iterations for burn-in followed by 3,000 iterations after convergence. Initial samples for group-level parameters were drawn from the prior distributions. Initial samples for individual-level parameters were drawn from broad distributions covering more than double the width of parameter values observed in other fits of the LBA model to perceptual decision-making experiments.

The posterior distributions over the parameters of this model were used to calculate point estimates of decision caution settings, for every participant, with our point estimate being the mean of the distribution. As is standard, decision caution was operationalized as the average amount of evidence accumulation required to trigger a decision(\(b-\frac {A}{2}\), see also Forstmann et al., 2010, 2011). Decision caution was calculated this way for both accuracy-emphasis and speed-emphasis conditions.

Our second analysis involved an integrated model which jointly addressed decision thresholds and need for closure scores. Although the correlation of decision threshold estimates and need for closure scores is helpful in understanding that these measures are related, such an analysis still leave the question as to whether both sources of data can be accounted for under a single, joint process model, and whether this single model can adequately account for empirical data (de Hollander, Forstmann, & Brown, 2016). In addition, the correlation analysis only focuses on a point estimate of decision threshold, which has been criticized by researchers in the past (Kruschke & Liddell, 2015; Morey, Hoekstra, Rouder, Lee, & Wagenmakers, 2016), whereas our joint model uses the full posterior distribution. Using a single-step, joint model approach to the analysis of related but separate data streams has several important advantages—for recent and in-depth discussions of these issues, see de Hollander et al. (2016), Forstmann et al. (2015). As a concrete example, consider a potential weakness of the more traditional analysis presented above. Imagine a participant for whom there were two different parameter settings which led to nearly-equivalent goodness of fit (this is quite plausible, with finite sample sizes). If one of those parameter settings provided marginally better goodness-of-fit than the other, but was also inconsistent with the participant’s need for closure score, then the other parameter setting would never be discovered. Such anomalies can occur in the traditional separate-streams analysis because the information contained in need for closure scores is not allowed to influence estimates of decision-making parameters. That is a sub-optimal approach, given the hypothesis to be investigated is that need for closure is related to decision-making parameters.

For our joint-model analysis, we took the relationship between decision threshold and need for closure to its logical extreme and removed all individual subjects’ threshold parameters from the model, replacing them instead by a single group-level threshold parameter, modulated for individuals by their measured need for closure scores. The logic of this analysis is illustrated in the bottom row of Fig. 2.

For each participant we estimated only five parameters (down from seven parameters in the initial analysis). Those parameters were A/b, t 0, v, v i n c o r r e c t, s p e e d and s, with the same hierarchical and prior specification as in the initial analysis, except for one difference: in order to more accurately make need for closure a function of decision threshold, A was estimated as a proportion of b, now being A/b. The prior on μ for parameter A/b was a normal distribution truncated to the unit interval, with mean 0.5 and standard deviation 0.3. Importantly, there are no individual-subject parameters that can explain differences between participants in the speed-emphasis and accuracy-emphasis conditions. We estimated a single threshold parameter, separately for speed and accuracy emphasis conditions, which was shared among the entire group of participants (y s p e e d and y a c c u r a c y ). Any variation from this group threshold in each condition was only allowed as a function of the participant’s need for closure score, modulated by an estimated parameter for the group of participants, again separately for the speed and accuracy emphasis conditions (k s p e e d and k a c c u r a c y , for the speed and accuracy conditions, respectively). That is, the decision threshold for the i th participant was set at y s p e e d + \(\frac {k_{speed}}{NFC_{i}}\) for the speed condition, and y a c c u r a c y + \(\frac {k_{accuracy}}{NFC_{i}}\) for the accuracy condition, where N F C i was the i th participant’s need for closure score. The priors for the group threshold parameters, y, were identical for each emphasis condition, being N +(4,3). The priors for the NFC linking parameters, k, were also identical for each emphasis condition, being N +(0,3).

This new model was dramatically more constrained than the standard model estimated in the initial analysis. The standard model used a total of 343 parameters to explain the entire data set (7 individual-level parameters for each participant, and 14 group-level parameters to constrain the distributions of the individual-level parameters across subjects). The constrained model in this subsequent analysis used 249 parameters (5 individual-level parameters per participant, 10 group-level parameters to constrain the distributions of the individual-level parameters, and an extra 4 fixed parameters that were shared across individuals).

Results

In the first experiment, no participants yielded outlying or exceptional scores for need for closure (z-scored range: [−3.1,1.5]). Basic descriptive statistics for the decision-making and NFC data are presented in Table 1.

We first established that performance on the perceptual decision-making task covaried with need for closure. We calculated mean response time separately for data from speed-emphasis and accuracy-emphasis conditions, separately for each individual. Mean response times from the speed-emphasis condition showed a statistically reliable (at the α = .05 level) correlation with need for closure scores (r = −.29). This correlation was in the expected direction, with higher need for closure associated with faster decision-making. There was no reliable correlation between need for closure scores and mean RT from the accuracy-emphasis condition.

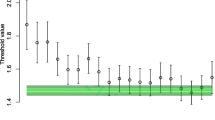

Mean response time in a decision-making task is the product of many cognitive elements. We used a decision-making model to establish whether the observed correlation between mean response time and need for closure was really due to changes in the balance between caution and urgency, as opposed to changes in other things such as processing speed, or perceptual and motor delays (middle row of Fig. 2). The joint distributions over response time and accuracy were used to estimate the parameters of the LBA model (Brown & Heathcote, 2008). The model parameters yielded, for each individual, estimates of: the threshold amount of evidence required to trigger a decision, separately for decisions made under speed pressure and made under accuracy pressure; the time taken by perceptual and motor delays; speed of evidence accumulation; and the variability in prior decision bias and processing speed. Our specification of this model fit the data well, as shown in Fig. 3a.

Joint cumulative distributions over response times and choices for Experiment 3 (panels a and b) and Experiment 3 (c and d). In each panel, data are represented by circles, with red and green for incorrect and correct responses. The left-most circle is the 10th percentile, averaged over subjects, the next circle is the 20th percentile, and so on up to the 90th percentile. Model fits are shown by the same percentiles calculated from posterior predictive data, indicated by the solid lines. a The left and right pair of distributions are for speed-emphasis and accuracy-emphasis conditions, respectively, in Experiment 3. Posterior predictive data are from the model with free threshold parameters. b As for A, except that posterior predictive data are from the model with threshold parameters determined by NFC scores. c Experiment 3, with circles, squares, triangles, and diamonds, used to show data from the 0, 5, 10, and 40% motion coherence conditions, respectively. Posterior predictive data were generated from the model with free threshold parameters. d As for C, except that posterior predictive data were generated from the model where threshold parameters were determined by NFC scores

We calculated the correlation—across participants—of parameter estimates with need for closure scores, for all parameters. As hypothesized, the only parameters for which these correlations were statistically significant were the parameters that influence the balance between caution and urgency. The model-parameter-based measure of caution calculated from data from the speed-emphasis conditions correlated reliably with with need for closure (r = −.32). The same caution measure calculated from data from the accuracy-emphasis condition did not correlate reliably with need for closure (r = −.05). Consistent with our hypothesis, correlations with need for closure were specific to just the threshold parameters: all correlations of other model parameters with need for closure were smaller, and not significantly different from zero: t 0, r = .16; v, r = .11; v i n c o r r e c t , r = .2; and s, r = .24.

A joint model

In order to attempt to account for both latent variables within a single process model, we developed a mathematical decision-making model that linked personality-level information (need for closure scores) with the speed-accuracy tradeoff in perceptual decision-making. This link was incorporated in a generative model (bottom row of Fig. 2) such that need for closure scores replaced the subject-by-subject estimates for the threshold parameter. This eliminated some free parameters from the model, replacing them instead with questionnaire data. In particular, the model allowed no differences between participants for the threshold setting, both under speed-emphasis and accuracy-emphasis conditions. Both conditions were only allowed to have subject-by-subject variation through their need for closure score, as a function of the linking parameter, k.

The fit of the model to all individual participants is shown in Fig. 3b. Despite to the strong constraints placed on the model—with no parameters available to account for the differences between speed-emphasis and accuracy-emphasis conditions for individual subjects—the model still provides a reasonable account of the data. The integrated model’s use of need for closure scores to set threshold parameters naturally accounts for the increasing difference between data from speed-emphasis and accuracy-emphasis conditions as need for closure increases.

If need for closure was not contributing to the joint model, and therefore not linked to the decision threshold, the linking parameter k would be zero. Instead, under speed emphasis we found that the posterior distribution over k s p e e d was well above zero, with the 99% highest posterior density interval covering [1.742,3.77]. In addition, a Savage-Dickey test (Wagenmakers, Lodewyckx, Kuriyal, & Grasman, 2010) yielded strong evidence against the hypothesis that k s p e e d = 0 (B F 10 > 2 × 106), also indicating that the linking parameter was reliably greater than 0. As expected from the previous correlations, we found the opposite for the accuracy emphasis condition, with the 99% highest posterior density interval being close to 0, covering [0.002,2.267], and a Savage-Dickey test yielding weak evidence in favor of the null hypotheses k a c c u r a c y = 0 (B F 01 = 1.9). Even with these very strict assumptions, and no free parameters for decision thresholds for individual participants, the integrated decision-making model provided a good account of the data.

In addition, to ensure the robustness of the method, we carried out a permutation test on the joint model. This involved fitting the same joint model to the same data, except with the NFC scores shuffled randomly between participants. This ensures that the NFC scores were—by design—not related to the decision thresholds. If our method is effective in only detecting when there is a true relationship between threshold and NFC, then we would expect to observe evidence in favor of the null hypothesis, i.e. k = 0. Analysis of the permuted data set supported this, showing both a Savage-Dickey ratio in favor of k = 0 for the accuracy emphasis condition (B F 01 = 3.3, HDI [0.003,1.396]) and with no conclusive preference for the speed emphasis condition (B F 10 = 1.25, HDI [0.025,1.366]).

Lastly, in order to quantitatively assess how well the joint model fit the data, we compared it to two other models: the original, freely estimated model, and a model that only estimated a single threshold shared across all participants for each condition (allowing for no individual differences). We performed these comparisons using the model selection metric WAIC (Vehtari, Gelman, & Gabry, 2015), and it should be noted that throughout this paper we have chosen to calculate WAIC using the standard approach, and report the expected log pointwise predictive density (e l p d w a i c ). This means that larger WAIC values indicate a superior model. The NFC joint model clearly outperformed the single-threshold model (joint model W A I C = −10137, fixed model W A I C = −12427.9), showing that the inclusion of NFC was justified, and resulted in a substantially improved fit. However, the freely-estimated model outperformed both of these alternatives (W A I C = −9820.2), suggesting that NFC should not be considered as a complete replacement for freely-estimated decision thresholds.

Discussion

Experiment 3 led to three key findings. Firstly, we found that NFC scores showed a reliable negative correlation with both the task mean reaction time, as well as the participant’s decision threshold, when the participant was under speed emphasis, suggesting a relationship between NFC and people’s decision urgency in situations requiring speed. However, no such relationship was found in the condition where accuracy was emphasized. Secondly, we found that NFC could successfully be integrated into a process model of decision-making (the LBA), replacing the decision threshold without causing severe decrements in the goodness of fit. We also found strong evidence for the linking parameter to be greater than zero in the speed-emphasis condition, k > 0, confirming that NFC was associated with threshold settings. Lastly, we found that the freely-estimated LBA model still outperformed the joint model, indicating that not all of the variation in decision threshold could be explained by need for closure.

An interesting, but unexpected finding was that the relationship between need for closure and decision threshold was limited to decisions made under speed emphasis. A possible reason for this finding could be that subject-to-subject variation in participants decision-making strategy, and therefore threshold setting, is much greater in accuracy- than speed-emphasis conditions, obscuring any potential relationship with NFC. Previous research has found that participants behave very similarly when under accuracy-emphasis as they do when under no instructions at all, which suggests that performance under accuracy emphasis reflects the a default, unconstrained strategy (Forstmann et al., 2008). We examine the robustness of this finding in Experiment 3, but replicating the speed-emphasis condition only. Experiment 3 also employed a much larger sample of participants, by using group-wise recruitment methods (although this also leads to higher rates of non-compliance with experiment instructions). Finally, Experiment 3 extends the previous results to a new and more widely-used perceptual decision-making task, using random dot motion.

Experiment 2

Method

Participants

Another 153 participants were recruited from an undergraduate psychology pool, who were awarded course credit for participation. Data from 23 participants were removed due to poor accuracy (< 90%) in the easiest trials in the task (40% motion coherence), and data from 23 participants were removed due to failure to comply with the speed-emphasis instructions (mean response times above 1.5 s, which is well above the maximum mean response time found in the speed emphasis condition of Experiment 3 of 1.2 s). Additionally, the first block of all participants’ data was considered as practice data, and were not analyzed.

Procedure

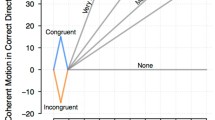

The need for closure questionnaire was administered in an identical fashion to the first experiment. However, in this experiment all participants firstly completed the perceptual decision making task, and then the need for closure survey, as order of administration was found to not effect the results in the first experiment. For the random dot motion perceptual decision task in Experiment 3, a cloud of 40 white dots appeared on a black background on the screen at the start of each trial, with each dot having a diameter of 3 pixels. Within a trial, the dots always remained within a 100 × 100 pixel box in the center of the screen, and any dot that left this box was immediately randomly replaced within it. During each trial, a portion of the dots moved in a coherent direction towards either the top-left or top-right of the screen, with the dots that were moving coherently being re-sampled on every frame, at a frame rate of 15 Hz. The portion of dots moving in a coherent direction towards either the left or the right is known as the “motion coherence”. We used four different levels of coherence, randomly mixed from trial-to-trial: 0, 5, 10, and 40%.

Participants indicated the direction that the dots were moving using the keyboard. Participants were instructed to respond as quickly as they could while maintaining a reasonable level of accuracy. If participants made an error on an easy (40% coherence) trial, a message of “ERROR ON EASY TRIAL” was display on the screen in red. The second form of feedback occurred if a participant took longer than 5 s on a trial, where a message of “Trial timed out” was displayed in white, following which the participant was asked to press the space bar to continue with the task. All participants took less than one hour to complete both the perceptual task and the need for closure questionnaire. Participants completed 5 blocks of 48 trials each, with each block having 6 trials of each combination of motion coherence and direction, in random order.

Analyses

The analyses of Experiment 3 were very similar to those of the first experiment. The two accumulators represented the “left” and “right” responses for this experiment, rather than representing “light” and “dark” as in the first experiment. The two accumulators shared all parameters except for drift rates. We allowed separate drift rate distributions for each coherence condition. In addition to this, the two accumulators shared the same drift rate distribution for the 0% coherence condition, as this condition presented no evidence for either alternative. Rather than the incorrect response’s distribution mean being fixed at zero, it was freely estimated for all other coherence conditions. As there was only a speed condition in this experiment, there was no need for separate thresholds for speed and accuracy. The full model specification, including prior, was:

For the second experiment, 28 MCMC chains were ran in parallel for 4,000 iterations to burn-in, followed by 1,000 iterations after convergence. Initial samples for both the subject and group-level distributions were drawn from wide distributions, similar to those of the initial experiment. As with Experiment 3, the caution parameter was calculated as b − A/2, using the mean of the posterior distributions as a point estimate.

Next, we applied the same approach as the second analysis of the first experiment, although we now only had a single set of linking parameters due to there being no accuracy emphasis condition in this experiment. As with the analysis for the first experiment, the same hierarchical and prior specification was used as the first analysis, with the exception of A being estimated as a proportion of b. The prior on μ for parameter A/b was changed to respect the new definition, using a normal distribution truncated to the unit interval, with mean 0.5 and standard deviation 0.3. Again, a decision threshold was generated for each participant by the equation y + \(\frac {k}{NFC_{i}}\), with the same priors on these parameters as in Experiment 3. We drew 4,000 samples from each chain during burn-in, followed by 1,000 samples after convergence.

Results

Table 2 provides descriptive statistics for the decision-making and need for closure data from Experiment 3.

The range of need for closure scores were not exceptionally large or small (z-scored range [−2.29,2.91]), though participants did appear to show more variability than the first experiment. The correlation between need for closure scores and the response times of the perceptual task was weaker (r = −.15), and was no longer reliable, t(105) = −1.57, p = 0.12. The parameter-based measure of response caution found through application of the LBA also correlated more weakly with need for closure score (r = −.12), although maximum threshold distance (b) was found to correlate better (r = −.17). However, both were not found to be reliable (p > 0.05). Unlike the first experiment, two parameters correlated with need for closure score slightly more than response caution: v 0, r = .14; s, r = .15. However, all other parameters correlated with need for closure score less than response caution: t 0, r = −.118; v 5.c , r = .004; v 10.c , r = −.002; v 40.c , r = .03; v 5.i , r = .05; v 10.i , r = .03; and v 40.i , r = −.1. Additionally, all other parameters correlated with need for closure score less than maximum distance from threshold.

In order to integrate the need for closure scores with the speed-accuracy tradeoff in the perceptual task, we used the same mathematical function as the first task for our single speed emphasis condition, being: \(b_{i} = y + \frac {k}{NFC_{i}}\), where b i and N F C i are the decision threshold and need for closure score for the i th participant, respectively. The parameter y is the population-level threshold intercept, and k is the population-level constant which links threshold with need for closure. Our analysis showed that the central result from the first experiment was replicated in the second experiment: the parameter k, which measures the association of need for closure scores with thresholds, was reliably different from zero. The 99% highest posterior density interval for k did not include zero, covering [0.06,3.61]. In addition, a Savage-Dickey test yielded reliable evidence against the hypothesis that k = 0 (Bayes factor 6.2-to-1). The Bayes factor from the Savage-Dickey test was fairly robust against prior specification; for example, it remained greater than 2.1-to-1 against the hypothesis of k = 0 for a range of priors as narrow as N +(0,1) (2.15) and as broad as N +(0,6) (8.3). To put these prior distribution in perspective, compare to the mean posterior estimate, which was k = 1.67. Despite the lack of reliable correlations in descriptive statistics and separately-estimated model parameters, the joint modeling of Experiment 3 confirmed the central finding that need for closure and decision thresholds share some variance, at a deep level.

We also performed the same robustness check using randomly permuted data for Experiment 3 as in Experiment 3. Again, we found evidence for the hypothesis that k = 0 when using the randomly permuted data (B F 01 = 4.3, H D I[0.001,1.174]).

Lastly, we again performed the same comparison between potential candidate models to help assess how well need for closure accounted for the threshold value when compared to a freely estimated model, and a model with no variation in threshold. Although the differences in model fit were smaller than in Experiment 3, we again found that the joint model was preferred over the model with no individual differences (joint model W A I C = −14324.7, fixed model W A I C = −14327.0), suggesting some impact of need for closure, though the difference is quite small (2.3), suggesting a much weaker effect than Experiment 3. Again, the freely estimated model was the best (W A I C = −14232.2), suggesting that need for closure can’t account for all of the variance in threshold.

Discussion

The aim of our second experiment was to provide a conceptual replication of the first, with a new paradigm and larger sample size. Experiment 3 provided some interesting findings. Firstly, unlike the Experiment 3, we failed to find a reliable correlation between reaction time and need for closure, or decision threshold and need for closure. We also found a great deal less divergence between the other parameters and need for closure. However, when applying our joint model analysis, we still found need for closure to be related to decision threshold, and to do a good job of accounting for experimental data when used in place of the threshold. These results are consistent with the idea that need for closure and decision thresholds share some common variance, but that decisions under speed-emphasis are required to make this relationship apparent. It seems likely that, by removing the accuracy-emphasis condition in Experiment 3, the speed-emphasis instructions were less effective than in Experiment 3.

General discussion

The balance between caution and urgency in decision-making has been extensively studied, especially for rapid decisions about simple stimuli. This balance is known as the “speed-accuracy tradeoff” and is explained by dominant accumulator-based theories of decision-making. In those theories, decision thresholds set the amount of evidence that is required to trigger a decision, and these thresholds manage the speed-accuracy tradeoff. The nature and operation of decision thresholds has been elaborated using neurophysiological recordings from brain imaging and from single-neuron recordings, and also using behavioural data including joint distributions over response time and accuracy.

Our study found a potential link between response caution in rapid decision-making and a previously unrelated personality trait known as “need for closure”. Need for closure describes a stable, long-term personality trait, which presents on a very different time-scale to that of the rapid decision speed-accuracy tradeoff. Despite important differences in their administration, which is bound to result in unique sources of noise between measures, and the different time-scales of caution which these measures attempt to assess, we found a link between these constructs in Experiment 3. These findings showed that our model-based measure of response caution (i.e. speed-accuracy tradeoff) in a speed-emphasis condition of a perceptual task not only correlated sizeably with need for closure scores, but that integrating need for closure scores into a mathematical model where need for closure score replaced response caution produced a good fit to the data, even with this extra constraint. These findings suggest the potential of some general underlying caution construct, which influences some part of both people’s short-term caution states (response caution), and long term caution states (need for closure/desire for structure and lack of ambiguity).

Although future research is needed to uncover why the relationship was only present in the speed-emphasis condition, and not in the accuracy-emphasis condition, one potential reason mentioned in the first experiment is the default strategy participants have been found to use under accuracy emphasis (Forstmann et al., 2008). Previous research has found participants perform very similarly when under no instruction, or accuracy emphasis, suggesting that participants treat accuracy emphasis conditions as one where they should perform naturally. This could potentially result in a greater variability in the decision threshold seen under accuracy emphasis, with different participants using their different default strategies, when compared to speed-emphasis where participants are all focused on a single strategy.

In addition, we were able to partially replicate this finding, with a much larger sample of participants, and a different perceptual task. Although the correlation between response caution and need for closure score was much weaker, and no longer statistically reliable, the same trend was still observed. The strength of the link was much lower than in Experiment 3 (based on an independent streams analysis), but an integrated model analysis still supported a link between the two measures, and provided an adequate fit to the data. In addition, although our exclusion criteria were theoretically based upon task performance expectations, meaning that they are not subject to bias, there were an unusually large amount of participants who performed the task incorrectly or poorly, and therefore, had to be excluded. Taken in combination with the weakened size of the relationship between need for closure and decision threshold in this experiment, the results of the replication should probably be interpreted with some caution, and further research is needed to more conclusively confirm and explore this relationship.

The weakened support for the main hypothesis observed in the second experiment raises questions about the effect, and about our second experiment. It is possible that the different perceptual tasks used in the two studies contributed to the different results. Although there is no obvious theoretical reason as to why a brightness task’s response caution should be a better measure than that of a random dot motion task, one could speculate that the different levels of difficulty may have effected the measure of response caution. Research into speed-accuracy tradeoffs and performance optimality have suggested that in paradigms where there are multiple levels of difficulty intermixed into a task, participants may use a response caution setting which collapses over the course of a decision (Cisek, Puskas, & El-Murr, 2009; Ditterich, 2006; Drugowitsch, Moreno-Bote, Churchland, Shadlen, & Pouget, 2012; Thura, Beauregard-Racine, Fradet, & Cisek, 2012). As our model assumes a fixed response caution setting over the course of a decision, if participants were using some collapsing setting then this may have led to inaccuracy in our measured response caution, which could potentially explain the diluted effect.

A second potential reason for the diluted effect in the second experiment is that the link between response caution and need for closure is actually weaker than originally thought, and that the first experiment overestimated the strength of such a link. However, the finding of the same trend of results in the second experiment appears to suggest that some link, despite its weak strength, is in fact present. Alternatively, one could also suggest that the strength of the link measured in the first experiment was actually correct, and the second experiment’s finding was an underestimation of the effect. Further research is needed to help confirm the existence of this link between response caution and need for closure, and if so, to narrow down more accurately the exact strength of this relationship. For example, sampling differences between the two studies need to be investigated.

Lastly, one could potentially take the difference between our correlation analysis and our joint model analysis as being reflective of the differences between point-estimate analysis, and using the full Bayesian posterior. Joint modeling of data streams which are hypothesized to be related allows the discovery of relationships which can be obscured by separate analyses (de Hollander et al., 2016).

Future research should also explore how other personality constructs may relate into the speed-accuracy tradeoff, or show clear paths of divergence. Prior research has demonstrated that the need for closure is associated with several variables that may also be related to speed-accuracy tradeoff, such as impulsivity, the need for cognition, and fear of invalidity (Webster & Kruglanski, 1994b). However, the moderate size of these associations confirms the divergent validity of the need for closure construct (absolute rs ≤ .28). Consequently, although these other variables may be related to speed-accuracy tradeoff, they are unlikely to fully account for the effects that we observed. It would be useful to follow up such speculation, and investigate whether need for closure is in fact the main construct that relates the speed-accuracy tradeoff, and not one of the constructs that it shares a relationship with.

Notes

This simplifying assumption was used in order to improve parameter estimation. When freely estimated, the drift rate estimated very close to 0, but the model resulted in poorer overall parameter estimates.

References

Balci, F., Simen, P., Niyogi, R., Saxe, A., Hughes, J., Holmes, P., ... Cohen, J. D. (2011). Acquisition of decision making criteria: Reward rate ultimately beats accuracy. Attention, Perception, & Psychophysics, 73, 640–657.

Barkley-Levenson, E. E.,& Fox, C. R. (2016). The surprising relationship between indecisiveness and impulsivity. Personality and Individual Differences, 90, 1–6.

Brown, S. D.,& Heathcote, A. J. (2008). The simplest complete model of choice reaction time: Linear ballistic accumulation. Cognitive Psychology, 57, 153–178.

Chittka, L., Dyer, A. G., Bock, F., & Dornhaus, A. (2003). Bees trade off foraging speed for accuracy. Nature, 424, 388.

Cisek, P., Puskas, G. A., & El-Murr, S. (2009). Decisions in changing conditions: The urgency-gating model. The Journal of Neuroscience, 29(37), 11560–11571.

de Hollander, G., Forstmann, B. U., & Brown, S. D. (2016). Different ways of linking behavioral and neural data via computational cognitive models. Biological Psychiatry: Cognitive Neuroscience and Neuroimaging, 1(2), 101–109.

Ditterich, J. (2006). Evidence for time-variant decision making. European Journal of Neuroscience, 24(12), 3628–3641.

Drugowitsch, J., Moreno-Bote, R., Churchland, A. K., Shadlen, M. N., & Pouget, A. (2012). The cost of accumulating evidence in perceptual decision making. The Journal of Neuroscience, 32(11), 3612–3628.

Evans, N. J.,& Brown, S. D. (2016). People adopt optimal policies in simple decision-making, after practice and guidance. Psychonomic Bulletin & Review, 1–10.

Forstmann, B. U., Dutilh, G., Brown, S., Neumann, J., von Cramon, D. Y., Ridderinkhof, K. R., ... Wagenmakers, E. -J. (2008). Striatum and pre-SMA facilitate decision-making under time pressure. Proceedings of the National Academy of Sciences, 105, 17538–17542.

Forstmann, B. U., Schafer, A., Anwander, A., Neumann, J., Brown, S. D., Wagenmakers, E. -J., ..., ... Turner, R. (2010). Cortico-striatal connections predict control over speed and accuracy in perceptual decision making. Proceedings of the National Academy of Sciences, 107, 15916–15920.

Forstmann, B. U., Tittgemeyer, M., Wagenmakers, E. -J., Derffuss, J., Imperati, D., & Brown, S. D. (2011). The speed-accuracy tradeoff in the elderly brain: A structural model-based approach. The Journal of Neuroscience, 34(47), 17242–17249.

Forstmann, B. U., Wagenmakers, E. -J., & et al. (2015). An introduction to model-based cognitive neuroscience. Springer.

Heitz, R. P.,& Schall, J. D. (2012). Neural mechanisms of speed-accuracy tradeoff. Neuron, 76(3), 616–628.

Ho, T. C., Yang, G., Wu, J., Cassey, P., Brown, S. D., Hoang, N., ... Yang, T. T. (2014). Functional connectivity of negative emotional processing in adolescent depression. Journal of Affective Disorders, 155, 65–74. Retrieved from doi:10.1016/j.jad.2013.10.025

Kruglanski, A. W. (1989). Lay epistemic and human knowledge: Cognitive and motivational bases. New York, NY: Plenum.

Kruglanski, A. W. (2004). The psychology of closed mindedness. New York, NY: Psychology Press.

Kruschke, J.,& Liddell, T. (2015). The bayesian new statistics: Two historical trends converge. SSRN, 2606016, 1–21.

Latty, T.,& Beekman, M. (2011). Speed-accuracy trade-offs during foraging decisions in the acellular slime mould physarum polycephalum. Proceedings of the Royal Society B: Biological Sciences, 278(1705), 539–545.

Morey, R. D., Hoekstra, R., Rouder, J. N., Lee, M. D., & Wagenmakers, E. -J. (2016). The fallacy of placing confidence in confidence intervals. Psychonomic Bulletin & Review, 23(1), 103–123.

Rae, B., Heathcote, A. J., Donkin, C., Averell, L., & Brown, S. D. (2014). The hare and the tortoise: Emphasizing speed can change the evidence used to make decisions. Journal of Experimental Psychology: Learning, Memory, and Cognition.

Ratcliff, R. (1978). A theory of memory retrieval. Psychological Review, 85, 59–108.

Ratcliff, R.,& Rouder, J. N. (1998). Modeling response times for two-choice decisions. Psychological Science, 9, 347–356.

Ratcliff, R.,& Van Dongen, H. P. A. (2011). Diffusion model for one-choice reaction-time tasks and the cognitive effects of sleep deprivation. Proceedings of the National Academy of Sciences, 108(27), 11285–11290. Retrieved from doi:10.1073/pnas.1100483108

Ratcliff, R., Thapar, A., & McKoon, G. (2001). The effects of aging on reaction time in a signal detection task. Psychology and Aging, 16, 323–341.

Ratcliff, R., Smith, P. L., Brown, S. D., & McKoon, G. (2016). Diffusion decision model: Current issues and history. Trends in Cognitive Sciences, 20(4), 260–281.

Rinberg, D., Koulakov, A., & Gelperin, A. (2006). Speed-accuracy tradeoff in olfaction. Neuron, 51, 351–358.

Roets, A.,& van Hiel, A. (2007). Separating ability from need: Clarifying the dimensional structure of the need for closure scale. Personality and Social Psychology Bulletin, 33, 266–280.

Roets, A.,& Soetens, B. (2010). Need and ability to achieve closure: Relationships with symptoms of psychopathology. Personality and Individual Differences, 48, 155–160.

Roitman, J. D.,& Shadlen, M. N. (2002). Responses of neurons in the lateral interparietal area during a combined visual discrimination reaction time task. Journal of Neuroscience, 22, 9475–9489.

Rubin, M., Paolini, S., & Crisp, R. J. (2011). The relationship between the need for closure and deviant bias: An investigation of generality and process. International Journal of Psychology, 46, 206– 213.

Simen, P., Cohen, J. D., & Holmes, P. (2006). Rapid decision threshold modulation by reward rate in a neural network. Neural Networks, 19, 1013–1026.

Starns, J.,& Ratcliff, R. (2012). Age-related differences in diffusion model boundary optimality with both trial-limited and time-limited tasks. Psychonomic Bulletin and Review, 19, 139145.

Thura, D., Beauregard-Racine, J., Fradet, C. -W., & Cisek, P. (2012). Decision making by urgency gating: Theory and experimental support. Journal of Neurophysiology, 108(11), 2912–2930.

Turner, B. M., Sederberg, P., Brown, S. D., & Steyvers, M. (2013). A note on efficiently sampling from distributions with correlated dimensions. Psychological Methods, 3, 368–384.

Uchida, N.,& Mainen, Z. F. (2003). Speed and accuracy of olfactory discrimination in the rat. Nature Neuroscience, 6, 1224–1229.

van Ravenzwaaij, D., Dutilh, G., & Wagenmakers, E. -J. (2012). A diffusion model de- composition of the effects of alcohol on perceptual decision making. Psychopharmacology, 219(4), 1017–1025. Retrieved from doi:10.1007/s00213-011-2435-9

Van Zandt, T., Colonius, H., & Proctor, R. W. (2000). A comparison of two response time models applied to perceptual matching. Psychonomic Bulletin and Review, 7.

Vehtari, A., Gelman, A., & Gabry, J. (2015). Efficient implementation of leave-one-out cross-validation and waic for evaluating fitted bayesian models.

Wagenmakers, E. -J., Lodewyckx, T., Kuriyal, H., & Grasman, R. P. P. P. (2010). Bayesian hypothesis testing for psychologists: A tutorial on the savage-dickey method. Cognitive Psychology, 60, 158–189.

Webster, D. M.,& Kruglanski, A. W. (1994a). Individual differences in need for closure. Journal of Personality and Social Psychology, 67, 1049–1062.

Webster, D. M.,& Kruglanski, A. W. (1994b). Individual differences in need for cognitive closure. Journal of personality and social psychology, 67(6), 1049.

Wickelgren, W. A. (1977). Speed-accuracy tradeoff and information processing dynamics. Acta Psychologica, 41, 67–85.

Acknowledgements

This research was supported in part by the Australian Research Council grants DP130100124 and FT120100244 to SDB.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Evans, N.J., Rae, B., Bushmakin, M. et al. Need for closure is associated with urgency in perceptual decision-making. Mem Cogn 45, 1193–1205 (2017). https://doi.org/10.3758/s13421-017-0718-z

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13421-017-0718-z