Abstract

The ongoing discussion among scientists about null-hypothesis significance testing and Bayesian data analysis has led to speculation about the practices and consequences of “researcher degrees of freedom.” This article advances this debate by asking the broader questions that we, as scientists, should be asking: How do scientists make decisions in the course of doing research, and what is the impact of these decisions on scientific conclusions? We asked practicing scientists to collect data in a simulated research environment, and our findings show that some scientists use data collection heuristics that deviate from prescribed methodology. Monte Carlo simulations show that data collection heuristics based on p values lead to biases in estimated effect sizes and Bayes factors and to increases in both false-positive and false-negative rates, depending on the specific heuristic. We also show that using Bayesian data collection methods does not eliminate these biases. Thus, our study highlights the little appreciated fact that the process of doing science is a behavioral endeavor that can bias statistical description and inference in a manner that transcends adherence to any particular statistical framework.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Scientists are required to make numerous decisions in the course of designing and implementing experiments and, again, when analyzing the data. No doubt, many of these decisions are relevant to and informed by theory. However, a good number of decisions have more to do with how the data collection process unfolds over time and less to do with the specific scientific question under scrutiny—so-called “researcher degrees of freedom” (Simmons, Nelson, & Simonsohn, 2011). What little data have been collected on the topic of scientific decision making has corroborated these speculations about researcher behavior: Researchers often use shortcuts in their scientific judgment and decision making when communicating findings, interpreting published results (Soyer & Hogarth, 2012), and collecting data, such as stopping an experiment once a statistical test reaches significance (John, Loewenstein, & Prelec, 2012). But this is only a precursor to the broader question that we, as researchers, should be asking ourselves: How do scientists make decisions? And, if scientists are susceptible to decision-making fallacies, what is the consequence for science? In this article, we argue that heuristic use can collide with the goals of science. We show that the heuristics that researchers use when implementing the scientific method are more diverse than merely stopping when a study reaches significance and that the consequences are more pronounced than increases in false-positive rates.

The motivation for our work stems from what Tversky and Kahneman (1971) famously termed the “law of small numbers.” Simply put, the law of small numbers is the belief that small samples are representative of the population and is a specific instantiation of the more general representativeness heuristic. Tversky and Kahneman (1971) argued that belief in the law of small numbers was sufficient to explain why people, including scientists, are often overly confident in statistics based on small samples and generally biased toward endorsing conclusions based on small samples. We argue that scientists’ decisions about which experiments to pursue and which to abandon midstream are overly sensitive to the outcomes of preliminary analyses based on small samples. Given that preliminary analyses can cut both ways, yielding significant effects or nonsignificant effects by conventional null-hypothesis standards, it is likely that scientists will be willing to terminate experiments that appear destined for failure on the basis of preliminary results, while selectively pursuing those experiments that appear destined to succeed (i.e., those with significant or marginally significant p values). In the present article, we evaluate the nature of the heuristics that scientists use to guide decisions about how much data to collect and the impact that data collection heuristics have on scientific conclusions.Footnote 1

We place our analysis within the context of decision heuristics to differentiate between behaviors that are acts of deception (John et al., 2012) versus those that are driven by misconceptions about sampling processes (cf. Tversky & Kahneman, 1971). While there have been a number of recent high-profile cases of deceptive practices in science (Carey, 2011; Simonsohn, in press), we believe that the bigger issue lies not with the small number of cases of outright fraud, but with a general lack of understanding of the relationship between a researcher’s behavior, biased sampling processes, and statistical analysis.

Our investigation of stopping decisions by researchers is couched within the context of the null hypothesis significance testing (NHST) framework, which is the most common framework within which behavioral science is conducted. Within prescriptive NHST, researchers are permitted very few degrees of freedom for deciding how much data to collect and when to terminate collection. For most behavioral studies, the prescribed method is to decide on the sample size a priori using power calculations and then sample to completion, regardless of what the data look like as they are being collected, or else risk inflated false-positive rates (Simmons et al., 2011; Strube, 2006). That is, one is not permitted to act on preliminary findings when those findings look promising or when the preliminary findings suggest that the study is doomed to failure.Footnote 2 However, power analyses are imperfect, since effect size calculations tend to overestimate the population effect sizes when based on either small samples (e.g., pilot data; Fiedler, 2011; Ioannidis, 2008) or published work (i.e., due to publication biases; Francis, 2012). Thus, while power analyses offer a rough guide to how much data are needed, they require that the researcher make several assumptions about the still unknown data set—even if there is uncertainty concerning the validity of those assumptions. This uncertainty provides the researcher with a ready-made justification for deviating from the original data collection plan, whether it involves collecting more data than initially planned when the manifest effects appear smaller than anticipated or terminating a study early on the basis of the significance or nonsignificance of an effect. Indeed, the commonly held belief that small samples are representative of population parameters is the only assumption needed to justify stopping rules based on p values: If, given half of the desired sample, the effects appear significant (or far from significant, for that matter), then why continue collecting data?

In what follows, we examine the decisions that researchers make when collecting data. Our approach was to construct a simulated research environment in which the goal was to earn promotion or tenure while managing a series of simulated two-independent-group experiments in which scientists decided how much data to collect and when to terminate the data collection process. The patterns of choices made by scientists allowed us to evaluate whether scientists’ stopping decisions were sensitive to results based on small samples. We then simulated the impact of a range of p-value-based heuristics on statistical conclusions’ validity across a number of measures, including Bayesian methods. In light of the recent calls to abandon NHST methods in favor of Bayesian methods (Gallistel, 2009; Rouder, Speckman, Sun, Morey, & Iverson, 2009; Wagenmakers, 2007), as well as recent attempts to reanalyze data originally collected under the NHST framework using Bayesian analyses (Gallistel, 2009; Rouder et al., 2009; Wetzels et al., 2011), we thought it timely to assess the impact of heuristic use on a broader array of statistical outcomes. Our analyses show that heuristics, including those used by scientists in our study, lead to effects that cannot be easily corrected by simply reanalyzing the data using Bayesian statistics and that effects of deciding to terminate a study early transcend both descriptive and inferential statistics.

Method

Participants were recruited using e-mails to professional societies asking researchers to participate in a study on scientific decision making. Data were collected for 15 days, during which 462 people gave their informed consent and began the experiment; collection was stopped when the daily completion rate dropped below 50 %. Ultimately, 314 researchers completed the study (including 37.6 % faculty, 16.9 % postdoctoral students, and 38.5 % graduate students), who work primarily within psychology (46.5 %), business/management (13.1 %), economics (9.2 %), linguistics (8.9 %), and neuroscience (7.6 %). Most participants primarily engage in experimental research (72.6 %), followed by modeling (11.8 %) and fieldwork (11.8 %). Participants were knowledgeable of statistics, having reported completing a median of four statistics courses.

The task was designed as a data collection environment where the goal was to earn promotion or tenure while managing a series of simulated two-independent-samples experiments given a limited budget. Figure 1 illustrates the structure of the decision points in the game. At the start of the task, instructions stated that the typical sample size for the experiments ranged from 20 to 50 subjects. These sample sizes were subsequently offered to all participants as data collection goals. Thus, there existed a straightforward policy for participants pursuing a purist methodology: set a priori sample size goals of 20 or 50 subjects per group and end data collection regardless of statistical outcomes. Alternatively, participants could elect to monitor the data as they were being collected. As is described below, regardless of participants’ initial decisions, they were always given the opportunity to collect additional data—an opportunity that also exists for most researchers.

The first decision of each simulated experiment was whether to monitor the data as they were collected or look at the data only at the end of that experiment. Those participants who chose to monitor the experiment (monitors) observed interim results as data were collected at predetermined intervals (n = 20, 35, 50, 60, 70 per group or until termination). Those participants who chose to look at data only at the end of that simulated experiment were then given the option to collect a sample of 20 or 50 subjects per group. Participants who chose a sample size of 20 subjects per group (choose 20) ultimately observed the same stimuli as monitors; participants who chose a sample size of 50 subjects per group (choose 50) also observed the same stimuli, but only for the predetermined intervals from n = 50 and beyond. At each observed interval, participants were given feedback on the outcome of an independent t test in the form of a p value. Participants then chose whether to continue data collection (which would result in observing the results at the next predetermined sample size interval), publish results and move on, or end that simulated experiment and move on. Regardless of these choices, all participants observed the same simulated experiments, differing only in the time of the decision to terminate data collection.

To evaluate whether data collection decisions are sensitive to preliminary results, six different sequences of p values were used across scenarios (see Table 1). Some sequences reflected null effects, and others true effects. Precise p values shown were jittered around the specified values to avoid repetition of stimuli: Values shown were selected from a uniformly distributed interval with minimum calculated at 10 % below the specified value and maximum 10 % above (e.g., for a p value of .06, actual values were drawn from the interval [0.054, 0.066]). After observing each of the six sequences once, sequences were randomly selected to repeat evenly.

To approximate the conditions under which most research is conducted, the participants were also given cost information and a research budget. Each data point collected by the researcher cost $10. Thus, for example, if a participant decided to collect 100 data points for a particular experiment, he or she would be charged $1,000 against his or her total budget. In other words, the more money spent on one experiment, the less would be available for other experiments. The accumulated experiment cost was displayed alongside p value feedback at each interval, and the remaining budget size was summarized at the completion of each experiment alongside the number of studies published up until that point. Budget size was varied between subjects, with half of the participants being provided enough funds to complete the requested 12 simulated experiments using a purist policy and aiming for 50 subjects per group for each experiment, for a total of $12,000, while the other group was provided only $6,500.

After completing the simulated experiments or exhausting their budgets, participants were asked about the data collection policies they adopt when doing their own research. Questions prompted participants for both open-ended descriptions and responses to a pair of questions that explicitly asked for their decision based on early p value results: “Still thinking of your own research, imagine that you have started a new series of studies. Halfway through data collection, a significance test shows a very high, nonsignificant p value. What would you typically do?” Response choices included the following: “Continue data collection as planned,” “Re-evaluate the study design,” “Stop the study and prepare for publication,” and “other.” The paired question and response choices read the same, except that in the second question, the significance tests showed a “very low, highly significant p value.” Demographic data including field, position, primary methodology, and statistics experience were collected.

Results

To be clear, under NHST, the prescribed method for data collection is to decide on the sample size a priori and to sample to completion. However, as is shown in Fig. 2, the prescribed method was not the most common strategy used in the experimental task. Of the 3,274 methodology decisions made by participants, 1,642 decisions were to monitor data collection (monitor: 50.2 %), 1,058 were to check results after 50 subjects (choose 50: 32.3 %), and 574 were to check results after 20 subjects (choose 20: 17.5 %).

Proportions of participants who decided to collect more data. Panels represent the different simulated experiment scenarios. Lines represent initial data collection policy choices: monitor data collection (dashed line), check results only after 20 subjects per group (solid line), or after 50 subjects (open marker). At the fifth decision point, all remaining participants were forced to end data collection

Overall, researchers frequently decided to collect more data when the p value was marginally significant, as is evident in the top two panels of Fig. 2, where the initial p value was .11. This pattern held regardless of whether participants initially chose to monitor data collection or look only at the end. Generally, participants who chose to look at results at the end after 50 subjects were far less likely to continue sampling beyond their initial choice. Across nearly all scenarios, monitors ultimately collected more data than did those who chose to check results after reaching a sample size of 20 (all Mann–Whitney Us < 1,978.50, Zs > 3.35, ps < .001, except the repetition of scenario D: U = 2,122.5, Z = 1.477, p = .14). But also interesting is the finding that these participants often decided to stop data collection after observing a high nonsignificant p value. Even slight trends toward high p values resulted in termination decisions: As is shown in the top two panels, after initial p values of .11, more than 80 % of monitors continued data collection after a subsequent p value of .06, but only 45.3 % continued after a subsequent p value of .14. Such patterns are also apparent in the behavior of participants choosing to check results after a sample size of 20 or 50, suggesting that p values influence even those participants who start with the intention to terminate data collection at a predetermined sample size. As a final point, not all participants stopped after observing a significant p value. In fact, some participants continued to collect more data even after observing a significant effect, even if the initial sampling goal was reached. For example, roughly 40 % of monitors and between 10 % and 20 % of choose 20 participants continued to collect data after the initial p value was .035 (see middle two panels of Fig. 2). Interestingly, participants with low budgets made data collection decisions similar to those with high budgets. The budget size had no significant effect on the number of simulated subjects that participants decided to run within the first six simulated experiments encounteredFootnote 3 (see Appendix 1), suggesting that financial considerations were secondary to considerations of the p value.

The pattern of choice behavior in Fig. 2 demonstrates that the data collection decisions made by researchers are sensitive to the magnitude of the p value. Specifically, it appears that researchers are willing to terminate data collection after observing a low (significant) p value or after observing a high (nonsignificant) p value but continue to sample when the p value is marginal. Regardless of the specific heuristic participants may have used, a large proportion of data collection decisions were influenced by preliminary results.

To estimate the proportion of participants whose data collection policies are sensitive to p values, three methods were used. The results are shown in Table 2. In the first, behavioral data was extracted from the simulated experiments task. To qualify for classification, a participant had to show consistent behavior across all simulated experiments; inconsistent individuals were labeled “unclassified.”Footnote 4 In the second, two independent coders, who were both knowledgeable of the analysis, classified participants’ open-ended descriptions of their own research decisions according to mutually exclusive data collection policies. There was substantial agreement between the two raters (Cohen’s unweighted kappa = 0.72, SE = 0.032). In the third, participants responded to a pair of questions that explicitly asked for their decision based on early p value results: “Still thinking of your own research, imagine that you have started a new series of studies. Halfway through data collection, a significance test shows a very high, nonsignificant p value. What would you typically do?” Response choices included the following: “Continue data collection as planned,” “Re-evaluate the study design,” “Stop the study and prepare for publication,” or “other.” The paired question and response choices read the same, except that in the second question, the significance tests showed a “very low, highly significant p value.”

Within the simulated experiments game, 71.3 % of individuals could be classified, with 56.7 % using a heuristic in which sampling decisions were based on a low or a high p value and only 14.7 % consistently using the prescriptive (purist) policy. In contrast, when scientists were asked how they decide when to terminate data collection in their own research, the most common response was a purist policy (41.9 %). Similarly, only 16.2 % of participants self-reported using a heuristic based on a high p value threshold, but when given the hypothetical scenario about whether they would stop an experiment when the preliminary analysis yielded a high p value, 49.4 % said they would. Interestingly, the proportion of people identified as purists was lowest in the experimental task, whereas the proportion identified as using a low stopping rule was highest for the simulated game.

We suspect that behavior in the experimental task is a better representation of what researchers would actually do when confronted with a decision of whether to collect more data when provided with the results of a preliminary analysis. We also speculate that the discrepancy between game behavior and explicit claims of strategy use demonstrates that even relatively knowledgeable and well-intentioned scientists are not immune to the powerful effects of beliefs about the robustness of small sample statistics, a conclusion that was also drawn by Tversky and Kahneman (1971) in their seminal work. Our work, however, illustrates that researchers’ use of information about p values is actually more nuanced than previously believed (John et al., 2012; Simmons et al., 2011; cf. Frick, 1998). But what are the implications of using decision heuristics based on small sample preliminary analyses, in comparison with the prescriptive method?

Simulations of heuristics

To evaluate the impact of researchers’ decisions on statistical conclusions, we simulated stopping heuristics using Monte Carlo methodology. The simulations were specifically targeted at understanding the impact of p-value-based stopping heuristics, given their dominance in professional discussions and the open-ended descriptions of scientists’ own practices: purist (see Table 2, 41.9 %) and stopping based on low p values (9.6 %), high p values (6.9 %), and low or high p values (9.4 %). The other most commonly reported stopping heuristics (subject availability, 7.32 %; time, 5.7 %; and money, 5.1 %) are not data dependent, and therefore, their consequences are limited to the known problems of small sample experiments such as low power (we return to this in the Discussion section). Although only 1 out of the 314 scientists who completed our study reported using Bayesian statistics, we simulated a set of heuristics analogous to those based on the p value, using the magnitude of the Bayes factor (BF) as a stopping threshold, to determine whether the problems stemming from optional stopping were unique to the NHST approach.

All of the simulations were implemented within the context of a two-independent-samples research design, with significance tests conducted using a t test with an α = .05. For completeness, we simulated experiments both when the null hypothesis was true and when the alternative hypothesis was true and with varying effect sizes, with Cohen’s d ranging from 0.00 to 0.80, in steps of 0.10. Data for all simulations were generated from random normal distributions with σ1 = σ2 = 1 and with the value of the means depending on the magnitude of the effect size. Ten thousand Monte Carlo runs were simulated for each heuristic by effect size combination, resulting in 1,530,000 simulated experiments. The initial check of the data was done with a sample of n1 = n2 = 10, and sampling continued in increments of n = 10 per group until the particular heuristic stopping rule was triggered or when n1 = n2 = 100 (i.e., we never sampled more than 100 observations per group). For each simulated experiment, we computed the t test and significance value, the BF, and the effect size using Hedges’ g. Formulae for the BF and Hedges’ g are provided in Appendix 3.

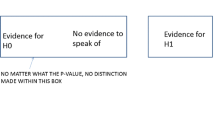

P-value-based data collection heuristics

All of these heuristics are based on the magnitude of the p value, with some heuristics designed to stop when the p value is .05 or less, when the p value exceeds a relatively high threshold indicating nonsignificance (.25, .50, .75), or when the p value either drops to or below .05 or becomes greater than a high threshold. For reference, the purist policy is also simulated.

Purist

The purist sampling heuristic is one that is adopted by a researcher who goes by the books. This researcher chooses the desired sample size a priori (perhaps using power analyses), samples to completion, and then stops data collection. That is, the stopping decision is based solely on whether the desired sample size has been achieved.

Low optional stopper

We implemented this heuristic using the following algorithm. (1) Start with a small sample (e.g., n1 = n2 = 10). (2) Run statistical test (if p ≤ .05, then terminate data collection; if p > .05, then go to step 3). (3) If total N < 200, then add 10 subjects to each group and go to step 2; otherwise, stop and report as nonsignificant.

High optional stopper

This heuristic was implemented for three variations, using high thresholds (HTs) of p ≥ .75 (H75), p ≥ .50 (H50), and p ≥ .25 (H25), as follows. (1) Start with a small sample (e.g., n1 = n2 = 10). (2) Run statistical test (if p ≥ HT, then terminate data collection; otherwise, go to step 3). (3) If total N < 200, add 10 subjects to each group and go to step 2; otherwise, stop and report as nonsignificant.

Low–high optional stopper

This heuristic was implemented for three variations, using HTs of p ≥ .75 (LH75), p ≥ .50 (LH50), and p ≥ .25 (LH25), as follows. (1) Start with a small sample (e.g., n1 = n2 = 10). (2) Run statistical test (if p ≤ .05 or p ≥ HT, then terminate data collection; otherwise, go to step 3). (3) If total N < 200, add 10 subjects to each group and go to step 2; otherwise, stop and report as nonsignificant.

Bayes-factor-based data collection heuristics

We simulated nine additional data collection heuristics within a Bayesian framework. These rules are similar in structure to those based on the p value but implement high and low thresholds on the BF instead, varying in the amount of evidence (magnitude of the BF) needed to trigger a stopping decision. In other words, these heuristics approximate the decision process of a researcher who checks preliminary results using Bayesian statistics to determine whether the evidence in favor of a hypothesis is sufficiently strong. From a Bayesian perspective, any experiment provides information useful for evaluating any particular hypothesis. Therefore, the measure of interest is the degree to which the evidence supports the null or alternative hypothesis; issues of type I errors and power are not relevant to evaluating evidentiary strength.

The first heuristic is a sample to completion heuristic and is formally identical to the purist policy. The remaining eight heuristics follow a three-step algorithm consisting of the following. (1) Start with n1 = n2 = 10. (2) Estimate the BF. If BF ≥ HT or BF ≤ LT, then terminate data collection; otherwise, go to step 3. (3) If total N < 200, add 10 subjects to each group and go to step 2; otherwise, stop and report BF. The thresholds used are listed in Table 3. In order to simulate heuristics for which the decision to stop is based on a low threshold but not a high threshold, we prevented the simulation from reaching a high threshold by setting the level to 50,000:1.

Simulation results

The results of these simulations are presented in Figs. 3, 4 and 5. The simulations demonstrate that different decision heuristics for data collection have distinct consequences for statistical conclusions. As is well known, decisions to terminate experiments based on a low p value lead to an increase in false-positive rates (see Fig. 3). However, much more interesting are the results from the other heuristics. For example, for stopping rules that involved both high and low thresholds, false positives decreased as a function of upper threshold on the p value; while LH25 led to a false-positive rate of approximately 7 %, rules based exclusively on the upper threshold led to false positives at rates lower than alpha. Concomitantly, statistical power was also decreased when decisions to terminate include an upper threshold. Thus, while some heuristics used by researchers lead to increased false positives, others actually lead to increases in false negatives.

But the false-positive and false-negative rates tell only part of the story. As is shown in Fig. 4, estimated effect sizes were also impacted differentially by different decision heuristics. Effect sizes derived from heuristics that terminated data collection based on p ≤ .05 (low, LH75, LH50, and LH25) systematically overestimated the magnitude of the population effect size, whereas heuristics based only on the upper threshold led to an underestimation of the population effect.Footnote 5

Clearly, stopping early on the basis of p values has negative consequences for the validity of tests of statistical significance and measures of effect size. However, one might believe that the negative effects of liberal stopping policies can be self-corrected by using a more conservative approach in setting a more stringent stopping threshold. For example, one might conduct preliminary tests and decide to collect more data only if the test is already significant or near significant, effectively requiring that the test result show significance two times. The underlying rationale of continuing to collect data after a significant or marginally significant effect may be that people believe that an effect is “robust” if, upon further inspection and after more data are collected, the effect is still significant. Such beliefs may lead people to adopt data collection heuristics in which they either stop data collection after the p value “stabilizes” or only pursue experiments that are already marginally significant—two behaviors that we observed in our experiment. This type of reasoning, however, is a reflection of the erroneous intuition that if an effect is significant at a small sample size, then it is likely to be significant at a larger sample size (Tversky & Kahneman, 1971). Indeed, simulations show that requiring significance multiple times does not correct the inflation of type I errors. Figure 5 plots the type I error rate for simulated experiments in which a researcher decides to stop only after observing multiple significant effects.Footnote 6 If the decision rule is to collect data until two tests are significant at p ≤ .05, the type I error rate is .12. In the most extreme case, the type I error rate is still inflated even after observing “preliminary” significant effects five times.

Recently, several researchers have argued for the use of Bayesian statistics in psychological science (for recent discussions of Bayesian methods, see Gallistel, 2009; Kruschke, 2010; Masson, 2011; Rouder et al., 2009; Wagenmakers, 2007; Wetzels et al., 2011). These arguments are relevant to the present discussion because Bayesian methods are often touted as robust to stopping rules (Bernardo & Smith, 1994; Edwards, Lindman, & Savage, 1963; Kadane, Schervish, & Seidenfeld, 1996). For example, Edwards et al. stated, “It is entirely appropriate to collect data until a point has been proven or disproven, or until the data collector runs out of time, money, or patience” (p. 193). Such statements clearly imply that BFs can be used post hoc to reevaluate previously published results collected under the NHST framework, without concern for how the data were collected. Such an approach has been undertaken in a series of recent papers. For example, Wagenmakers, Wetzels, Borsboom, and van der Maas (2011; see also Rouder & Morey, 2011) used BFs to reanalyze results published by Bem (2011) to argue against the existence of psi. Likewise, Wetzels et al. reanalyzed all t tests that appeared in 2007 issues of Psychonomic Bulletin & Review and the Journal of Experimental Psychology: Learning, Memory and Cognition and showed that 70 % of results reported as significant with .01 < p < .05 provided only anecdotal support against the null. Newell and Rakow (2011) analyzed 16 experiments to evaluate the support for the provocative idea that unconscious thought can yield better decision outcomes than deliberation (see Dijksterhuis, Bos, Nordgren, & Van Baaren, 2006) and concluded that the data favored the null hypothesis, with a BF = 9:1. Other examples of the use of Bayesian analysis include Gallistal and Rouder et al., in which several empirical findings were reanalyzed to examine the possibility that the data, previously reported as either significant or nonsignificant using NHST methods, support the null hypothesis.

As remarkable as these reanalyses are, the conclusions, which are based on the post hoc application of BF to data collected within a NHST framework, assume that the BF is unaffected by data collection heuristics. This assumption is challenged by Rosenbaum and Rubin’s (1984) demonstration that other forms of Bayesian analyses are affected by data-dependent stopping rules. Is the BF robust to the types of behavior that our participants engaged in?

The answer to this question is No! Figures 6 and 7 plot the distribution of the natural logarithm of the BF for two effect sizes (d = 0.0 and d = 0.5) for each heuristic rule.Footnote 7 In these graphs, a value of 0 is equivalent to a BF = 1.0, which corresponds to equal evidence for the null and alternative hypotheses. Values greater than 0.0 are taken as evidence for the null hypothesis, whereas values less than 0.0 are taken as evidence in favor of the alternative, with the strength of evidence for the null and alternative given by the magnitude (see Jeffreys, 1961; Rouder et al., 2009). Heuristics based on the p value (Fig. 6) led to pronounced distortions in the distributions and the resulting interpretation of the strength of evidence in favor of the null or alternative hypotheses. Figure 7 plots the log distributions of BFs for a set of decision heuristics based on the magnitude of the BF (e.g., terminate data collection when the evidence favors the null or alternative), as opposed to the p value. Here, too, the distribution of BFs is sensitive to the stopping rule (cf. Rosenbaum & Rubin, 1984). As is evident, if a researcher were to consistently adopt a stopping policy in which data collection is terminated only after reaching a BF = 1:5 in favor of the alternative, then the observed distribution of BFs for this researcher would necessarily be truncated to exclude the BF values beyond the researcher’s stopping threshold.

Distributions of log(BF) for p-value-based data collection heuristics. The two left panels show distributions at varying low and low or high thresholds for an underlying effect size of d = 0.0 (upper) and d = 0.5 (lower), and the two right panels show distributions at varying high thresholds. In all panels, the solid line represents a purist policy of sampling until completion

Distributions of log(BF) for BF-based data collection heuristics. The two left panels show distributions at varying low thresholds for an underlying effect size of d = 0.0 (upper) and d = 0.5 (lower), and the two right panels show distributions at varying low or high thresholds. In all panels, the solid line represents a purist policy of sampling until completion

Exploring the Bayesian stopping rules further, Fig. 8 compares the results from simulations of a purist data collector (left panels) and of an optional stopper (right panels), conditionalized on sample size (where the sample size obtained after a threshold-based decision to terminate sampling is denoted n-prime).Footnote 8 First, the distribution of BFs when sampled-to-completion to some sample size (e.g., n = 30) does not equal the expectation of the BF distribution based on “selected” sample sizes (e.g., n-prime = 30), where the distribution of BF is “missing” values between the stopping thresholds (see Fig. 8, top two panels). Thus, two experimenters with different intentions will show qualitatively different distributions of BFs in the long run.

Distributions of log(BF) for purist (left panels) and optional stopping (right) heuristics, for equal samples sizes (n = n-prime). Top panels shows distributions for which the ultimate sample size is 30 per group, and bottom panels for 100 per group. The vertical markers indicate the optional stopping thresholds of 5:1 and 1:5

However, the simulations also show that BFs are affected by what is in the researcher’s mind even when they do not intervene in data collection. In all of our simulations, our simulated researchers continued sampling until the BF breached the stopping threshold or until the sample size reached 100 observations per group. One might expect that sampling 100 observations per group would result in identical BF distributions regardless of the researcher’s stopping policy, because the researcher did not stop data collection. However, for the researcher who intended to stop but did not, sampling 100 observations per group (n-prime = 100) is conditional on having not breached the stopping threshold at a smaller sample size, and therefore, the expected distribution is missing experiments that were terminated prematurely. This is illustrated in comparing the bottom two panels of Fig. 8: The distribution of BFs for the researcher who has an optional stopping heuristic in mind but samples to completion anyway (Fig. 8, bottom-right panel) deviates substantially from that which obtains in the absence of an optional stopping heuristic (Fig. 8, bottom-left panel). This is analogous to situations where it looks like researchers have sampled-to-completion (e.g., run an n to the number they proposed in a grant), but because they would have stopped (if they could have), the distribution of BFs is grossly distorted. In other words, the distributions of the BFs are sensitive not only to the decisions that scientists make, but also to their intentions, even when they do not intervene in data collection!

Discussion

The belief that small numbers are representative of the larger population can lead people to commit “a multitude of sins against the logic of statistical inference in good faith” (Tversky & Kahneman, 1971, p. 110). According to Tversky and Kahneman (1971), intuitions about sampling processes are fundamentally wrong, even among scientists. Consistent with this assertion, we show that scientists are much too willing to embrace results based on small samples, terminating data collection prematurely when the preliminary findings are significant and abandoning experiments when preliminary findings are not promising.

While the results of our study suggest that a relatively large proportion of our participants were willing to engage in optional stopping within the simulated task, we caution that there are limitations to the data set. Our analyses of our behavioral data may represent an upper bound on the percentage of researchers using a p-value-based stopping decision when conducting their own studies; participants may behave very differently when faced with similar decisions in real data collection contexts, instead of our experimental context. Furthermore, our sample was not a random or representative sample of scientists, and it is theoretically possible that those who chose to respond and complete the study were relatively more likely to engage in optional stopping.

Nevertheless, our data on scientists’ descriptions in their own words of their real-life decisions to quit data collection reveal a disturbing phenomenon of researchers basing sampling decisions on the p value. In our sample, 25.79 % of respondents explicitly described basing stopping decisions on p values, indicating that the use of optional stopping rules and, therefore, also the consequences of such rules exist with a nontrivial prevalence in real research studies.

We interpret the results of our behavioral study cautiously as indicating that a relatively large percentage of scientists may utilize optional stopping rules of one form or another in the context of doing research, but not that any individual researcher will necessarily always engage in optional stopping. We note, however, that optional stopping comes in many forms and, in some cases, a researcher may post hoc label a study a “pilot” when the effect doesn’t appear to manifest after a small number of subjects. In such cases, a researcher might stop data collection and retool the experiment to make the manipulation stronger or to rethink the experimental design. Pilot studies are useful, but there is a fine line between a true pilot study and optional stopping, which rests with the researcher’s intention. If the intention prior to starting an experiment was to complete the experiment, then we argue that stopping and retooling the experiment is an instance of optional stopping.

Of course, there are many potential heuristics that people might employ to guide sampling decisions in real experiments, many of which may actually characterize how our own participants approached our simulated data collection task. For example, one plausible heuristic is a trending heuristic, in which participants might decide to continue sampling as long as the p value continues to decrease until significance is reached or until the p value shows an increasing trend. An exhaustive test of all possible heuristics was not within the scope of this study, but any optional stopping rule warrants further investigation.

What might drive optional stopping?

One need not postulate that decisions to terminate sampling prematurely are an act of deception, since responsible researchers can easily fall prey to the allure of small sample results. To be sure, there are many reasons why a prudent and well-intentioned researcher might implement stopping heuristics. For example, pragmatically, it may seem reasonable to look at the data every now and again to assess whether the experiment is “working” as planned and to terminate those experiments that, on the basis of a preliminary analysis, appear to be destined for failure. Scientists may simply be unaware of the consequences of using optional stopping heuristics in such contexts (Simmons et al., 2011). Second, scientists may be intrinsically more motivated to find effects than to indicate that effects do not exist. In a task in which participants were asked to verify whether statements were true, Wallsten, Bender, and Li (1999) found that participants had a general bias to say “true” and that bias did not dissipate with more experience with the task. Similarly, Kareev and Trope (2011) showed that when participants attempted to determine whether statistical relationships exist, they were biased to set their decision criteria so as to increase hits and decrease the number of misses, at the cost of increasing false alarms and decreasing correct rejections. That is, participants’ subjective cost for misses appeared to exceed that of false alarms, and the subjective value of hits appeared to exceed that of correct rejections. These tendencies may predispose individuals to engage in optional stopping.

Regardless of intentions, scientists must still manage to conduct research with limited resources. While stopping decisions based on considerations that lie outside the data, such as the availability of resources (e.g., money, time, or size of the participant pool), are not theoretically subject to the same consequences as data-dependent optional stopping, it seems likely that they might influence the adoption and parameterization of data-dependent rules. Thus, we believe that “data-independent” constraints may interact with data-dependent stopping behavior. In an era when the pressure to publish is exceedingly high and data collection can be costly, in terms of both expense and time, individuals with limited resources may be forced to make trade-offs to choose data collection policies that seemingly “optimize” their efficiency. How these trade-offs should be made, if at all, is a matter for future inquiry and debate.

Implications

The results of the work presented in this article have a number of important implications. First, the impact of stopping heuristics on scientific outcomes cannot be captured solely in terms of an inflation of the false-positive rate (cf. Simmons et al., 2011). Without knowing the precise heuristic that individual researchers use to govern their decisions about when to terminate a study, one cannot accurately assess the scope and type of biases that manifest in published reports (see also Pfeiffer, Rand, & Dreber, 2009). Individuals who use different heuristics yield qualitatively different patterns of false positives, false negatives, effect sizes, and BFs. Some heuristics yield increased false positives, but others yield increased false negatives; some heuristics yield inflated effect sizes, whereas others yield conservative estimates of the effect sizes.

Second, Bayesian analysis is not the magic elixir it is sometimes made out to be. One cannot simply apply Bayesian statistics to any old data set and be confident that the outcome is free of bias. As our results illustrate, the BF distribution shows substantial irregularities, which vary depending on which heuristic was used to collect data. Thus, prior analyses in which BFs are computed post hoc on data collected under the NHST framework, such as those by Wetzels et al. (2011), are not interpretable if researchers used a data-dependent stopping heuristic. This does not disparage the validity of Bayesian methods or discourage their use by psychologists. Indeed, there are many reasons to abandon NHST in favor of Bayesian methods (see Raftery, 1995; Wagenmakers, 2007). However, that they can be used to fix biases introduced by data-dependent stopping heuristics is not one of them.

Third, scientific conclusions are closely tied to the decisions that researchers make throughout the course of conducting a study. Seemingly responsible decisions, such as deciding to terminate a study that appears will result in a null finding, can severely undermine scientific progress in the long run. While there have been recent discussions in the literature about publication biases and the focus on publishing significant findings, there also appears to be a problem with biases inherent in scientists’ decision making that have equally broad consequences—namely, the decision to terminate studies prematurely when a null result appears imminent. We speculate that a great number of studies go uncompleted due to nonsignificant preliminary results, many of which likely reflect false negatives.

Fourth, stopping heuristics have downstream consequences on meta-analytic studies. While it is clear that meta-analyses can be biased when they are based selectively on published studies (Ferguson & Brannick, 2012), the fact that researchers use heuristics in the conduct of research provides another source of bias. Because the heuristics lead to biases in estimated effect sizes, which are the unit of analysis in meta-analyses, the resulting meta-analysis will also be biased—even if one were able to correct for publication bias.

Summary

The analyses presented in this article indicate that the product of scientific research is inextricably linked to the behavior of the scientists doing the research. Scientists are people, too, and they (we) are as susceptible to the same biases in judgment and decision making that they (we) have studied for decades. Not only can decision heuristics lead to the biases found in laboratory studies of lay decision making, but also they can lead to biases in the conduct and analysis of research.

Notes

We use the term heuristics in the broadest sense to represent informal strategies or shortcuts for decision making.

Note that the proper test to evaluate the evidence for the null hypothesis is a Bayesian test. However, because the sample size selected by participants was scaled as an ordinal variable, we know of no straightforward Bayesian test that is appropriate. Additionally, note that relatively fewer participants in the low-budget condition completed the second block of six scenarios. Thus, we are hesitant to overinterpret any apparent differences between budget sizes for the second block (see Appendix 1 for a complete report of the data).

Alternative classification criteria lead to similar results. For example, allowing one transgression (e.g., a participant who stops early in one experiment only is still classified as a purist) increases the proportion of purists from 14.6 % to 22.9 %. An additional alternative method, which is sensitive to stopping intentions, is presented in Appendix 2.

We did not include plots of effect size for LH50 and LH75, because the estimated effect sizes from these heuristics will fall between LH25 and low. Plots for H50 and H75 will fall between H25 and the purist strategy.

The simulation methodology was identical to that of the low optional stopper, except that the algorithm did not stop until after k total significant effects were observed, where k was manipulated between 1 and 5.

Bayes factors reported in Figs. 6 and 7 were computed using the method outlined by Kass and Raftery (1995; see also Masson, 2011; Wagenmakers, 2007) and using the Bayesian information criterion as input (see Appendix 3 for formulae). We note, however, that the general pattern of the results are also obtained using the method developed by Rouder et al. (2009), which makes different assumptions about the prior distribution.

The simulation methodology was identical to that of the purist and low–high optional stoppers described on pp. 16–17. Purist simulations were each based on 10,000 experiments for which the sample size was determined a priori. Optional stopping simulations were based on 100,000 experiments conducted using a low–high stopping heuristic (5:1 and 1:5). In these simulations, researchers continue collecting data until the data breaches the stopping threshold or sample size reaches 100 observations per group. The experiments were then filtered to include only those that resulted in n1 = n2 = 30 (top two panels) and n1 = n2 = 100 (bottom two panels). For all simulations, the underlying effect size was d = 0.5.

References

Bem, D. J. (2011). Feeling the future: Anomalous retroactive influences on cognition and affect. Journal of Personality and Social Psychology, 100, 407–425.

Bernardo, J. M., & Smith, A. F. M. (1994). Bayesian theory. New York: Wiley.

Carey, B. (2011, November 2). Fraud case seen as a red flag for psychology research. The New York Times. Retrieved from http://www.nytimes.com

Dijksterhuis, A., Bos, M. W., Nordgren, L. F., & Van Baaren, R. B. (2006). On making the right choice: The deliberation without attention effect. Science, 311, 1005–1007.

Edwards, W., Lindman, H., & Savage, L. J. (1963). Bayesian statistical inference for psychological research. Psychological Review, 70, 193–241.

Fiedler, K. (2011). Voodoo correlations are everywhere—not only in neuroscience. Perspectives on Psychological Science, 6, 163–171.

Francis, G. (2012). Too good to be true: Publication bias in two prominent studies from experimental psychology. Psychonomic Bulletin & Review, 19, 151–156.

Frick, R. W. (1998). A better stopping rule for conventional statistical tests. Behavior Research Methods, Instruments, & Computers, 30, 690–697.

Ferguson, C., & Brannick, M. (2012). Publication bias in psychological science: Prevalence, methods for identifying and controlling, and implications for the use of meta-analyses. Psychological Methods, 17, 120–128.

Gallistel, C. R. (2009). The importance of proving the null. Psychological Review, 116, 439–453.

Ioannidis, J. P. A. (2008). Why most discovered true associations are inflated. Epidemiology, 19, 640–648.

Jeffreys, H. (1961). Theory of probability. Oxford: Oxford University Press.

Jennison, C., & Turnbull, B. W. (2000). Group sequential methods with applications to clinical trials. Boca Raton: CRC Press.

John, L. K., Loewenstein, G., & Prelec, D. (2012). Measuring the prevalence of questionable research practices with incentives for truth-telling. Psychological Science, 23, 524–532.

Kadane, J. B., Schervish, M. J., & Seidenfeld, T. (1996). Reasoning to a foregone conclusion. Journal of the American Statistical Association, 91, 1228–1235.

Kareev, Y., & Trope, Y. (2011). Correct acceptance weighs more than correct rejection: A decision bias induced by question framing. Psychonomic Bulletin Review, 18, 103–109.

Kass, R. E., & Raftery, A. E. (1995). Bayes factors. Journal of the American Statistical Association, 90, 377–395.

Kruschke, J. K. (2010). What to believe: Bayesian methods for data analysis. Trends in Cognitive Sciences, 14, 293–300.

Masson, M. E. J. (2011). A tutorial on a practical Bayesian alternative to null-hypothesis significance testing. Behavior Research Methods, 43, 679–690.

Newell, B. R., & Rakow, T. (2011). Revising beliefs about the merit of unconscious thought: Evidence in favor of the null hypothesis. Social Cognition, 29, 711–726.

Pfeiffer, T., Rand, D. G., & Dreber, A. (2009). Decision-making in research tasks with sequential testing. PLoS ONE, 4, e4607.

Raftery, A. E. (1995). Bayesian model selection in social research. Sociological Methodology, 25, 111–163.

Rosenbaum, P. R., & Rubin, D. B. (1984). Sensitivity of Bayes inference with data-dependent stopping rules. American Statistician, 38, 106–109.

Rouder, J. N., & Morey, R. D. (2011). A Bayes-factor meta analysis of Bem’s ESP claim. Psychonomic Bulletin & Review, 18, 682–689.

Rouder, J. N., Speckman, P. L., Sun, D., Morey, R. D., & Iverson, G. (2009). Bayesian t tests for accepting and rejecting the null hypothesis. Psychonomic Bulletin & Review, 16, 225–237.

Simmons, J., Nelson, L. D., & Simonsohn, U. (2011). False-positive psychology: Undisclosed flexibility in data collection and analysis allows presenting anything as significant. Psychological Science, 22, 1359–1366.

Simonsohn, U. (in press). Just post it: The lesson from two cases of fabricated data detected by statistics alone. Psychological Science.

Soyer, E., & Hogarth, R. M. (2012). The illusion of predictability: How regression statistics mislead experts. International Journal of Forecasting, 28, 695–711.

Strube, M. J. (2006). A program for demonstrating the consequences of premature and repeated null hypothesis testing. Behavior Research Methods, 38, 24–27.

Tversky, A., & Kahneman, D. (1971). Belief in the law of small numbers. Psychological Bulletin, 76, 105–110.

Wagenmakers, E.-J. (2007). A practical solution to the pervasive problems of p values. Psychonomic Bulletin & Review, 14, 779–804.

Wagenmakers, E.-J., Wetzels, R., Borsboom, D., & van der Maas, H. L. J. (2011). Why psychologists must change the way they analyze their data: The case of psi: Comment on Bem (2011). Journal of Personality and Social Psychology, 100, 426–432.

Wald, A. (1947). Sequential analysis. New York: John Wiley and Sons.

Wallsten, T. S., Bender, R. H., & Li, Y. (1999). Dissociating judgement from response processes in statement verification: The effects of experience on each component. Journal of Experimental Psychology: Learning, Memory, Cognition, 25, 96–115.

Wetzels, R., Matzke, D., Lee, M. D., Rouder, J. N., Iverson, G. J., & Wagenmakers, E.-J. (2011). Statistical evidence in experimental psychology: An empirical comparison using 855 t tests. Perspectives on Psychological Science, 6, 291–298.

Acknowledgments

This work was supported in part by grant BCS 1030831 awarded to M.R.D. The authors thank Thomas Wallsten, Bob Slevc, members of the Decision, Attention, and Memory lab, and students in the graduate statistics course at UMD for their feedback.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1 Data collection decisions and budget size

Comparisons of data collection decisions within the simulated experiment scenarios were made across high- and low-budget groups. These analyses are done separately for the first block of six simulated experiments and the second block due to uneven sample sizes in the latter block (participants with low budgets were less likely to complete more than six simulated experiments). For each comparison across six scenarios, the critical alpha level was calculated as p < .008 using the Holm–Bonferroni adjustment. Those who checked only at the end show no differences due to initial budget size (all Mann–Whitney Us > 166.00, Zs < 2.10, ps > .036); however, comparisons within the monitor group show that those with high budgets collected significantly more data than did those with low budgets in four of the latter six simulated experiments (repetitions of B, C, E, F, all Us < 1,373.00, Zs > 3.068, ps < .002; others, all Us > 2,406.5, Zs > 2.24, ps > .025). There were no significant differences within the first six scenarios encountered. Median sample sizes collected by participants is shown in Table 4, according to budget size and initial data collection methodology choice for each scenario.

Appendix 2 Alternative classification criteria

Participants’ data collection policies in the experimental task were classified according to stopping decisions: A participant was considered to have stopped data collection when he or she chose to end data collection, regardless of intentions to abandon or publish the results. However, for the purposes of comparison with the hypothetical choices, we also report alternative estimated proportions of abandoning studies based on preliminary high p values and publishing results based on preliminary low p values. Using this method, 61.2 % of participants could be classified, with 46.5 % using a heuristic in which sampling decisions were based on a low or a high p value (Table 5).

Appendix 3 Simulation details

The BF quantifies the probability that the null is true given the data, as compared with the probability that the alternative is true. This is in contrast to the NHST framework, under which the decision to reject or retain the null is often taken as a binary one. The BF expresses the odds ratio of

Under the equal prior where p(H1) = p(H0) = .5, the odds ratio can be converted into the posterior probability

An approximation of the BF can be obtained from the Bayesian information criterion (BIC; Kass & Raftery, 1995; Raftery, 1995):

where,

N is the total sample size, and k is the number of free parameters. In a two independent samples situation, there is one free parameter corresponding to the independent variable. BIC1 therefore corresponds to the value of the BIC when the independent variable is included in the model (k = 1), and BIC0 is the value of BIC when that parameter is removed (k = 0). Use of Eq. 3 to compute the BIC when k = 0 yields a value of BIC = 0. For our purposes, we estimated the value of R 2 using the point biserial correlation, which itself can be estimated from the t statistic:

r pb can also be transformed into an estimate of the effect size, Hedges’ g, as expressed in terms of the number of standard deviation between two groups:

Rights and permissions

About this article

Cite this article

Yu, E.C., Sprenger, A.M., Thomas, R.P. et al. When decision heuristics and science collide. Psychon Bull Rev 21, 268–282 (2014). https://doi.org/10.3758/s13423-013-0495-z

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-013-0495-z