Abstract

We investigated the decision process underlying the detection of targets at multiple locations. In three experiments using the same observers, target location probability and attentional instructions were manipulated. A redundant-target detection task was conducted in which participants were required to detect a dot presented at one of two locations. When the dot appeared at the two locations with equal frequency (Experiment 1), those participants who were found to have limited to unlimited capacity were shown to adopt a parallel, self-terminating strategy. By contrast, those participants who had supercapacity were shown to process redundant targets in a coactive manner. When targets were presented with unequal probability, two participants adopted a parallel, self-terminating strategy regardless of whether they were informed the target location probability (Experiment 3) or not (Experiment 2). For the remaining two participants, the strategy changed from parallel, self-terminating to serial, self-terminating as a result of the probability instructions. In Experiments 2 and 3, all the participants were of unlimited to limited capacity. Taken together, these results suggest that target location probability differently affects the selection of a decision strategy and highlight the role of controlled attention in selecting a decision strategy.

Similar content being viewed by others

Introduction

The visual environment consists of an overwhelming number of objects and events. The human capacity for selectively attending to a subset of this information enables us to guide action and decision making efficiently in this environment. Traditionally, visual attention is thought to be guided by two types of attentional cues (Chun, Golomb, & Turk-Browne, 2011; Corbetta & Shulman, 2002; Jonides, 1981; Pashler, Johnston, & Ruthruff, 2001; Posner, 1980; Yantis, 2000): (1) endogenous, top-down attentional cues, which arise from knowledge and experience, such as task goals and instructions (Hopfinger, Buonocore, & Mangun, 2000; E. K. Miller & Cohen, 2001), and (2) exogenous, bottom-up cues, which reflect perceptual salience and uniqueness of external stimuli, such as abrupt onsets (Yantis & Jonides, 1984) and singletons (Theeuwes, 1992). Both types of attentional cues play an important role in directing spatial attention to a cued location, which can boost target processing when the target appears at the cued location.

In addition to exogenous and endogenous cues, people are sensitive to the spatial and temporal probabilities that govern target location and presence (Geng & Behrmann, 2002, 2005; Jones & Kaschak, 2012; Walthew & Gilchrist, 2006). The target location probability denotes a target appearing at one location more often than at other locations and is regarded as an attentional cue that directs spatial attention in a way that cannot be explained within the typical endogenous/exogenous framework (Geng & Behrmann, 2002, 2005; Zhao, Al-Aidroos, & Turk-Browne, 2013). The target location probability effect is defined as faster detection of targets at high probability locations compared with targets at low probability locations. This effect has been consistently demonstrated in a variety of tasks (Baker, Olson, & Behrmann, 2004; Chun & Jiang, 1998; Fiser & Aslin, 2001; Geng & Behrmann, 2002; Hoffmann & Kunde, 1999; Hughes & Zimba, 1985; Jones & Kaschak, 2012; Kinchla, 1977; Kingstone & Klein, 1991; Lambert & Hockey, 1986; J. Miller, 1988; Posner, Snyder, & Davidson, 1980; Reder, Weber, Shang, & Vanyukov, 2003; Saffran, 2002; Shaw & Shaw, 1977; Shomstein & Yantis, 2004; Summerfield, Lepsien, Gitelman, Mesulam, & Nobre, 2006; Walthew & Gilchrist, 2006; Yantis & Egeth, 1999). For example, Geng and Behrmann (2005) asked participants to search for a target and make a response based on its identity. Results showed that eye movements proceeded to high-probability locations over low-probability locations, and identification of a target at the high-probability locations was more accurate than identification at the low-probability locations, suggesting facilitated target detection through the development of a target-location association. Using a contextual cuing paradigm, Chun and Jiang (1998) also reported facilitated target detection through past experience of spatial regularities. Participants were faster to search for a target when the target appeared within consistent locations and the spatial configuration repeated across blocks than when the target appeared at the novel spatial configuration. Although the effect of learned spatial regularities has been widely investigated in the literature of visual search with a focus on how the spatial probability affects attention and target processing, the specific details of the information processing system underlying these effects is less well explored. We examined the time course of processing when the target location probability is systematically manipulated in the detection of redundant targets.

Detection of redundant targets

Detection has a prominent history in the study of mental processing particularly when attention is evenly distributed across space. Questions of interest include: (1) the minimal amount of stimulation necessary to support reliable detection accuracy (i.e., detection thresholds, Blackwell, 1953); (2) the interaction between stimulation and response bias particularly when target presentation is uncertain (i.e., as explained by signal detection theory, Green & Swets, 1966; Peterson, Birdsall, & Fox, 1954); (3) the latency of simple detection phenomena focusing on the aspects of stimulation and response that explain the observed variability in response times (Luce, 1986). This last approach has provided insight into the mechanisms underlying detection. For instance, several authors (Burbeck & Luce, 1982; Luce, 1986; Smith, 1995) have utilized a psychophysically inspired model which combines sustained and transient signal detectors (i.e., that are sensitive to signals which are stable over time and signals that are sensitive to onsets and offsets, respectively) to explain observed detection hazard functions for suprathreshold stimuli. These hazard functions rise to a peak and then decay (consistent with the detection of transient signals) to a non-zero asymptote (consistent with the detection of sustained signals). Such a model is supported by a wide variety of psychophysical (see Smith, 1995 for a review) and physiological data (Cleland, Dubin, & Levick, 1971; Livingstone & Hubel, 1988). The implication is that both transient and sustained mechanisms race in response to stimulation; however, different manipulations such as high temporal and low spatial frequency or low temporal and high spatial frequency (Robson, 1966; Watson & Nachmias, 1977) might elicit contributions from solely the transient or sustained detectors, respectively.

Consider the role these detectors would play in the detection of redundant targets presented in different spatial locations. Rather than having one detector for each location, both mechanisms would pool activity across locations to drive the detection decision. This suggests that the decision process follows what has been termed a coactive processing architecture (Fific, Little, & Nosofsky, 2010; Miller, 1982; Townsend & Nozawa, 1995; Townsend & Wenger, 2004). Stated plainly, the idea underlying coactive detection of redundant targets is that there is a single source of evidence, created by pooling information across locations, driving the detection decision, even if this evidence in each location is initially processed by parallel sustained and transient detection mechanisms (Smith, 1995).

The assumption that redundant-target detection decisions proceed coactively is not supported by data showing that detection decisions have limited capacity. For instance, Townsend and Nozawa (1995) measured response times from individual observers tasked with detecting dichoptically presented single or double suprathreshold luminance targets. Using a measure of capacity based on the ratio of integrated hazard functions from redundant and single targets (i.e., the capacity coefficient, see below), capacity was consistently found to be much more limited than would be expected under an unlimited-capacity independent parallel race model in their Experiment 1. Note that this parallel baseline model is one in which the processing channels are organized by location and not by the pooled sustained or transient energy. Under these conditions, a coactive model is expected to predict better than baseline performance (i.e., supercapacity; Townsend & Nozawa, 1995; Houpt & Townsend, 2012). Intriguingly, however, in Townsend and Nozawa’s (1995) Experiment 2, supercapacity was observed. A key difference between the two experiments was that in the first experiment, the probability of a single, double, or no target was equal, but in the second experiment, target-absent trials were presented on half of the experimental trials. Hughes and Townsend (1998) found further evidence of limited-capacity processing when the target-absent trials have a low probability of occurrence. Consequently, to formulate inferences about the role of attention in detection, it is necessary to ensure that an unbiased measure of processing is available for comparison. By adopting Townsend and Nozawa’s (1995) nonparametric methodological tools (i.e., Systems Factorial Technology, SFT), we seek to characterize several key properties of the information processing system.

Information processing and the role of attention in redundant target detection

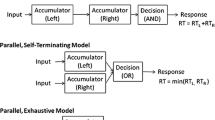

The information processing system is generally thought to be comprised of successive stages of encoding, decision making, and response. Each can be characterized by four important properties: (1) processing architecture (parallel vs. serial vs. coactive), which denotes the order of multiple-signal processing; (2) stopping rule (self-terminating vs. exhaustive), which denotes the amount of information required for decision making; (3) processing capacity (limited-capacity vs. unlimited-capacity vs. supercapacity), which denotes the variation of processing efficiency as workload increases; and (4) independence, which refers to the nature of facilitation and interference between processing channels (Townsend, Fific, & Neufeld, 2007; Townsend & Nozawa, 1995; Townsend & Wenger, 2004). Hence, as described above, while encoding of stimulus information might involve a parallel race between sustained and transient detector channels, the information from these channels concerning targets in multiple locations might be pooled into a single coactive decision process (Smith, 1995). On the other hand, under some conditions the decision process may also proceed in parallel or in serial (i.e., across locations).

Previous studies have shown that the perceptual decision process is flexible and can be affected by multiple factors, such as task instructions (Donkin, Little, & Houpt, 2013), relative saliency of external stimuli (Yang, 2011; Yang, Hsu, Huang, & Yeh, 2011), relative change probability (Yang, Chang, & Wu, 2013), and attentional focusing (Eidels, Townsend, & Algom, 2010; Yang, Little, & Hsu, 2014). For example, in Yang et al. (2014), an exogenous cue was used to cue the target location, which had 100 % (informative cue) or 50 % (uninformative cue) validity. A positive response was made when participants detected any dot (OR-rule condition). Results showed that the validity of an exogenous cue affected the processing architecture and processing capacity. When a cue was 50 % valid (Experiment 1), all of the participants adopted a parallel self-terminating processing strategy. That is, processing for both the cued and uncued locations was conducted in parallel and simultaneously and terminated as soon as either target reached the decision criterion. In addition, processing capacity ranged from unlimited to moderately limited capacity. By contrast, when a cue was 100 % valid (Experiment 2), all the participants switched to a serial self-terminating processing strategy. That is, participants first processed the cued location, and if the information was sufficient for decision making, a positive response was made (i.e., a target is detected). Otherwise, processing switched to the uncued location. Generally, capacity became much more limited in the informative-cue condition than in the uninformative-cue condition. These findings are intriguing because varying the cue validity can result in a shift from parallel to serial processing, supporting the flexibility of a decision mechanism.

We hypothesize that target location probability may affect the perceptual decision process by biasing visual attention. This bias is likely to alter the quality of information accumulation or the temporal order that information from each stimulus location enters the decision process (Sewell & Smith, 2012). The implication is that a shift in processing from parallel to serial may be observed if the participants are able to flexibly adjust their attention to first process the information at the high-probability location. In addition, processing capacity may become more limited as the participants focus on processing signals at high-probability locations than low-probability locations, such that additional information from low-probability locations slows down the processing for high-probability locations. It is also possible that the target location probability manipulation may not affect all of the participants in the same way. There might be individual differences in how well one observer could utilize target location probability for effective strategy selection.

Our goal of the present study was to examine the effect of target location probability on the perceptual decision process while detecting multiple targets in a display. In three experiments, participants were required to detect a target dot at two pre-specified locations. The same participants participated in each experiment so that we can track the change in strategy across each of the experimental manipulations. In Experiment 1, the target appeared at the two locations with equal frequency (equal-probability condition). In Experiments 2 and 3, the target appeared at one location more often than at another location (unequal-probability condition). Participants were not instructed about the probability manipulation until Experiment 3 (via explicit instructions). Experimental design and data analysis followed SFT to allow for strong inferences about the characteristics of information processing.

We hypothesized that the perceptual decision process is sensitive to target location probability. By contrasting the results of Experiment 1 to Experiments 2 and 3, we directly examine sensitivity to target location probability focusing on processing architecture and processing capacity. When target location probability is equal across locations (Experiment 1), the system should not favor any location, leading to a parallel processing strategy. In contrast, when target location probability is unequal across locations (Experiments 2 and 3), participants may set a higher priority to process the high-probability location first, leading to a serial processing strategy. In terms of processing capacity, we expected that biased attention can boost the processing for the high-probability location. Processing additional signals at the low-probability location may demand additional cognitive resources, thus revealing a limited-capacity system. Second, comparing the results of Experiments 2 and 3 enabled us to examine whether the strategy selection involves explicit awareness. We expected to observe individual differences in selecting a decision strategy because of the variation among the participants’ processing capacity that may constrain the controlled attention in the utilization of the decision strategy.

Experiment 1

In Experiment 1, we examined how participants detect redundant targets when the targets appeared at two locations with equal presentation frequency (hereafter, termed the equal-probability condition). Based on previous studies (Townsend & Eidels, 2011; Townsend & Nozawa, 1995), the processing characteristics of a detection task in which no attentional cue is given to the presence or absence of a target is typically identified as a parallel, self-terminating processing with limited capacity. Therefore, in this experiment, we expected that the participants would adopt a parallel, self-terminating processing strategy with limited capacity to process signals at multiple locations.

Method

Participants

Four participants (CHY, CHC, TYC, and HLM) from National Cheng Kung University participated in Experiment 1. One of the authors (TYC) was a participant. Ages ranged from 19 to 21 years; all had normal or corrected-to-normal vision. Each participant completed five sessions, which lasted approximately 40 minutes for each session. The participants signed a written informed consent prior to the experiment and received NTD 120 per hour after they completed the experiment.

Apparatus

The experiment was conducted in a dimly lit room. A personal computer with a 2.40 G-Hz Intel Pentium IV processor controlled the stimulus display and recorded manual responses via a mouse button press. The display resolution was 1024 × 768 pixels. The visual stimuli were presented on a 19-inch CRT monitor with a refresh rate of 85 Hz. The experiment was run with E-prime 1.1 (Schneider, Eschman, & Zuccolotto, 2002). The viewing distance was 60 cm. A chin-rest was used to prevent head movements.

Design and stimuli

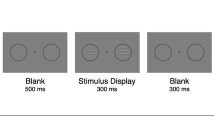

The participants were instructed to detect a target. The target was a light dot with a diameter of 0.2°, and the target dot appeared at two locations (left and right, the distance between the two locations was 16°). Following SFT (Townsend & Nozawa, 1995), we adopted the double factorial design. Specifically, we created four types of test trials, including (1) redundant-target condition: both locations contained a light dot; (2) left-only condition: only the left location contained a light dot; (3) right-only condition: only the right location contained a light dot; (4) target-absent condition: neither location contained a light dot. Conditions (2) and (3) were termed as the single-target conditions. In addition, we manipulated the brightness of the light dot. The luminance of the dot in the high-brightness condition was 0.3 cd/m2 and the luminance of the dot in the low-brightness condition was 0.038 cd/m2. Therefore, there were four types of redundant-target trials: (1) HH: both left and right dots were of high brightness; (2) HL: the left dot was of high brightness and the right dot was of low brightness; (3) LH: the left dot was of low brightness and the right dot was of high brightness; (4) LL: both left and right dots were of low brightness. There were four single-target trials: (1) HX: only the left location contained a dot of high brightness; (2) LX: only the left location contained a dot of low brightness; (3) XH: only the right location contained a dot of high brightness; (4) XL: only the right location contained a dot of low brightness. See Fig. 1 for an illustration.

Each participant completed five sessions, which contained 10 blocks for each session. On each block, there were a total of 80 trials, with equal frequency of the four test conditions, including the redundant-target condition, left-only condition, right-only condition, and target-absent condition. Table 1 presents the trial frequency for each condition within a block. Under this arrangement, the target location probability was equal across locations, such that information from two locations was equally salient and important for target detection. Moreover, a potential anticipation for redundant-target trials can be eliminated because only one-fourth of the trials contained dots at both locations (Fiedler, Schröter, & Ulrich, 2011; Mordkoff & Yantis, 1991; Townsend & Nozawa, 1995).

Procedure

Each trial began with a fixation cross presented at the center of the screen for 350 ms (see Fig. 2 for an illustration of the experimental procedure), accompanying a 500-Hz pure tone. The test display was presented after a foreperiod randomly chosen from 50 ms to 850 ms. Participants were required to click the left mouse button if they detected any dots; otherwise, they had to click the right mouse button. The test display would disappear after a response is made or until 2000 ms. The inter-trial interval (ITI) was 1000 ms. Both accuracy and reaction time were emphasized. When an error is made, a feedback display shown “Wrong!” is shown to remind the participant.

Data analysis

SFT was utilized to infer the processing characteristics of the decision mechanism. We adopted a nonparametric bootstrapping method (Van Zandt, 2000) and utilized statistics from the sft R package (Houpt, Blaha, McIntire, Havig, & Townsend, 2014) to analyze the reaction time data and make inferences. All analyses were conducted separately for each individual.

We first tested the selective-influence assumption, a critical assumption of SFT (Dzhafarov, 1999; Kujala & Dzhafarov, 2008; Townsend & Thomas, 1994). In the current experimental setting, the selective-influence assumption holds when the brightness manipulation on a location only influences the information processing speed of that location. Two methods were used to verify this assumption at the level of mean reaction time. First, using the data of the single-target conditions, t tests were conducted on the mean reaction times between the high-brightness condition and the low-brightness condition for each location. If the selective-influence assumption holds, we would observe significant differences between the HX and LX conditions and between the XH and XL conditions. Second, a 2 (high/low brightness of the left dot) × 2 (high/low brightness of the right dot) analysis of variance (ANOVA) was conducted on the mean reaction times of the redundant-target conditions. We would expect to observe significant main effects of the two factors. To test this assumption at the reaction time distribution level, we compared the reaction time distributions of the four redundant-target conditions. First, we examined whether the survivor functions gradually shifted from the HH to the LL condition. Next, we conducted two levels of Kolmogorov-Sminov (K-S) tests to compare the distributions of the four redundant-target conditions. The ordering of the reaction time distributions were examined by using eight two-sample K-S tests (i.e., HH > HL, HH > LH, HL > LL, LH > LL, HH < HL, HH < LH, HL < LL, and LH < LL). If the selective-influence assumption holds, we would expect that the former four tests are significant, and the latter four tests are not significant. See Houpt et al. (2014) for more details. In addition, we tested the reaction time distributions at the factor level. We would expect to find a significant difference between the marginal distributions in the left, high-brightness condition (HH and HL) and the left, low-brightness condition (LH and LL) to support the effective brightness manipulation on the left dot, as well as a significant difference between the marginal distributions in the right, high-brightness condition (HH and LH) and the right, low-brightness condition (HL and LL) to support the effective brightness manipulation on the right dot.

After the selective-influence assumption was verified, mean interaction contrast (MIC) and survivor interaction contrast (SIC) were computed to infer the processing architecture and stopping rule. MIC can be expressed as

where \( \overline{\mathrm{RT}} \) indicates the mean reaction time of the redundant-target conditions. The subscripts denote the brightness level of the left and right dot, with “H” indicating a high-brightness dot and “L” indicating a low-brightness dot. Testing MIC allows researchers to infer the additivity of the two factors (Sternberg, 1969; Townsend & Nozawa, 1995): a zero MIC indicates that two factors are additive and that processing architecture is serial. A positive MIC reflects that two factors are over-additive, which is observed when one adopts parallel self-terminating processing, or coactive processing. A negative MIC indicates that two factors are subadditive and that parallel exhaustive processing is adopted.

Three methods were utilized to confirm the value of MIC. First, a 2 (high/low brightness of the left dot) × 2 (high/low brightness of the right dot) ANOVA was conducted. A significant interaction effect would indicate that MIC is not 0 and that the two factors are nonadditive. Second, a nonparametric adjusted rank transform test (Houpt et al., 2014) was conducted to test for the interaction between the two factors. Similar to the ANOVA, a significant interaction indicates that MIC is not equal to zero. Third, the 95 % confidence interval (CI) for MIC was simulated based on a nonparametric bootstrapping method (Van Zandt, 2000). If the 95 % CI for MIC does not include 0, we would infer that MIC is not equal to 0.

SIC can be expressed, analogous to MIC as:

where S(t) is the survivor function of the redundant-target conditions, and the subscripts denote the brightness level of the left and right dots, respectively. SIC allows researchers to distinguish between different stopping rules that cannot be inferred from MIC alone. Table 2 shows the five possible processing models and their corresponding MIC and SIC predictions. To confirm whether SIC values equal to 0 for all times t, we constructed the 95 % CI for SIC via a nonparametric bootstrapping method (Van Zandt, 2000). If the 95 % CI for SIC does not include 0 for all times t, we would infer that SIC is not equal to 0. In addition, we used the sft R package (Houpt et al., 2014) to separately assess the positive-going deviations from SIC = 0 and the negative-going deviations from SIC = 0. Two one-sided K-S tests were conducted; one test tests whether the largest positive value of the SIC (D+) is significantly different from zero, and the other test tests whether the largest negative value of the SIC (D–) is significantly different from zero.

To infer the processing capacity, the capacity coefficient (Eidels, Houpt, Altieri, Pei, & Townsend, 2011; Townsend & Eidels, 2011; Townsend & Nozawa, 1995) and two inequalities, the race-model inequality (or Miller inequality; J. Miller, 1982) and the Grice inequality (Grice, Canham, & Boroughs, 1984; Grice, Canham, & Gwynne, 1984), were tested. The capacity coefficient is defined as

where S 1,2, S 1(t), and S 2(t) are survivor functions of the redundant-target condition and the two single-target conditions, respectively. The ranges of values of C(t) and their implications are as follows: if C(t) equals 1 for all times t, processing capacity is unlimited; if C(t) exceeds 1 for some time t, processing capacity is supercapacity; otherwise, processing capacity is limited. Townsend and Nozawa (1995) concluded that there is systematic relationship between capacity coefficient, race-model inequality, and Grice inequality. To compare the values of different capacity assays (capacity coefficient, race-model inequality, and Grice inequality), the two inequalities can be transformed onto a unified capacity space (Townsend & Eidels, 2011). Transformed onto the workload capacity space, the race-model inequality is expressed as:

and the Grice inequality is expressed as:

The race-model inequality and the Grice inequality form an upper bound and a lower bound on unlimited-capacity parallel processing, respectively. If C(t) exceeds the race-model bound at some time t, then the processing capacity can be considered as demonstrating very large supercapacity; if C(t) exceeds the Grice bound, then the processing capacity is extremely limited. In order to provide a statistical basis for the inference of processing capacity, we first constructed the 95 % CI for C(t). If the 95 % CI exceeds the race-model bound at some time t, then we concluded the processing capacity is supercapacity; if the 95 % CI for C(t) exceeds the Grice bound at some time t, then we concluded the processing capacity is extremely limited. In addition, the raw capacity scores can be transformed to the statistic Cz (Houpt & Townsend, 2012), which provides a summary measure of the entire capacity function, aggregated over time. Values are distributed as a standard normal distribution such that we can apply z test to Cz. A value of 0 indicates unlimited capacity, a negative value indicates limited capacity, and a positive value indicates supercapacity.

Results

All of the participants had high accuracy. Across all the conditions, the mean accuracy was 0.98 with a standard deviation of 0.01. Correct reaction times ranging from 100 ms to 800 ms were extracted for further analysis. This range was chosen because simple reaction times are generally not faster than 100 ms and 800 ms exceeds five times the standard deviation from mean reaction time. Table 3 shows the mean reaction times of the redundant-target conditions and MIC for each participant.

Tests for the selective-influence assumption

Results showed that the differences in mean reaction times between the HX and LX conditions and the XH and XL conditions were all statistically significant for each participant (ps < 0.001). Results from the two-way ANOVA also showed significant main effects of the brightness manipulation at both locations for each participant (ps < 0.001). These results suggested that the selective-influence assumption was verified at the mean reaction time level.

Next, we tested the selective-influence assumption at the reaction time distribution level. First, we observed the ordering of four survivor functions of the redundant-target conditions (Fig. 3). For all the participants, the survivor functions shifted from the HH to the LL condition, while HL and LH conditions were in between the two conditions. Second, we conducted eight two-sample K-S tests, including HH > HL, HH > LH, HL > LL, LH > LL, HH < HL, HH < LH, HL < LL, and LH < LL. Results showed that, for most participants (CHC, TYC, and HLM), the former four tests were significant (ps < 0.01), and the latter four tests were not significant (ps > 0.6). For participant CHY, the test for HH > LH did not reach the significance level (p > 0.12) as expected; however, these insignificant results did not violate the selective-influence assumption. Third, we conducted the K-S tests to compare the marginal distributions of the four redundant-target conditions. Results showed significant differences between the marginal distributions between the left, high brightness condition and left, low-brightness condition and between the right, high brightness condition and right, low-brightness condition (ps < 0.001), indicating that the brightness manipulation was effective at the factor level. The selective-influence assumption held at the reaction time distribution level; the four distributions were ordered appropriately.

Plots for the survivor functions of the four redundant-target conditions in Experiment 1

Processing architecture and stopping rule

Because the selective-influence assumption was verified, the reaction times for the redundant-target trials were analyzed to infer the processing architecture and stopping rule. First, all of the participants had a positive MIC (Table 3). Results of the two-way ANOVA on mean reaction times showed that, for each participant, the interaction between two factors was statistically significant (ps < 0.001). Second, results from the adjusted rank transform test also confirmed a significant interaction between two factors for all the participants (ps < 0.001). Third, results from the nonparametric bootstrapping confirmed the results; the 95 % CI for MIC did not include 0 (Fig. 4).

Results of the simulated MIC and the 95 % CI for MIC for each participant in Experiment 1

We then tested SIC. For participants CHY and CHC, whose SICs were most consistent with parallel self-termination, the bootstrapped 95 % CI for SIC was positive for all times t (Fig. 5). In line with the findings, results of testing the largest positive and negative values of SIC showed that D+ was significantly different from 0 (ps < 0.001) and D– was not significantly different from 0 (ps > 0.86). By contrast, for participants TYC and HLM, whose SICs were most consistent with coactivity, the 95 % CI for SIC was less than 0 at early time points but then became positive for the slower reaction times (Fig. 4), showing a negative to positive (− → +) S-shaped SIC. The results of testing the largest positive and negative values of SIC only showed that D+ was significantly different from 0 (ps < 0.001) but D− was not significantly different from 0 (ps > 0.43). D− is conservative in its rejection of the null hypothesis; for instance, Houpt and Townsend (2011) did not always find a significant D− test even when the data were simulated from a coactive model.

Results of the SIC (thick solid lines) for each participant in Experiment 1. Dotted lines above and below the SIC represent the 95 % CI for SIC

Combining the results from MIC and SIC (refer to Table 2), the inferences for processing architecture and stopping rule differed across participants. We inferred that participants CHY and CHC adopted parallel processing and followed a self-terminating stopping rule as they had a positive MIC and a positive SIC for all times t. We inferred that participants TYC and HLM adopted coactive processing as they had a positive MIC and a negative-to-positive S-shaped SIC.

Processing capacity

The estimated C(t) and the bootstrapped 95 % CI for C(t) along with race-model bound and Grice bound are shown in Fig. 6. Results showed that, for participants CHY and CHC, the 95 % CI for C(t) included 1 at the faster reaction times and less than 1 at the slower reaction times, suggesting that they processed information with unlimited to limited capacity. Their Czs were significantly less than 0 (ps < 0.001), supporting that they performed with limited capacity. By contrast, for participants TYC and HLM, the 95 % CI for C(t) was greater than 1 at some time t, and their Czs were significantly greater than 0 (ps < 0.01), suggesting a system of supercapacity. These results are consistent with the inferences of parallel self-terminating processing for CHY and CHC but coactivation for TYC and HLM.

Results of the C(t) (thick solid lines), race-model bound (dashed lines), and Grice bound (dash-dot lines) for each participant in Experiment 1. Thin solid lines above and below the C(t) represent the 95 % CI for C(t)

Discussion

In Experiment 1, both locations had equal probability of containing the target dot. The results showed that for participants CHY and CHC, MIC was larger than 0 and SIC values were positive for all times t, C(t) was less than or equal to 1, indicating they adopted a parallel, self-terminating strategy with unlimited to limited capacity to process redundant signals at multiple locations. On the other hand, results showed that participants TYC and HLM had a positive MIC and a negative-to-positive S-shape SIC, and their C(t) exceeded 1 at some faster time points, suggesting that they adopted coactive processing with supercapacity to detect the redundant targets. In general, all the participants processed information from multiple locations in parallel during the information accumulation stage, and the processing capacity ranged from limited capacity to supercapacity.

The present results were partially consistent with the previous findings that the processing for target detection where no attentional cue is directed to the presence or absence of a target is parallel, self-terminating with limited capacity (Townsend & Eidels, 2011; Townsend & Nozawa, 1995). However, two participants in the present experiment adopted different processing strategies (i.e., coactive processing with supercapacity). This difference in processing is reminiscent of Townsend and Nozawa’s (1995) results using a similar task in which they also found both limited-capacity, parallel processing and supercapacity, coactive processing. Having established how each individual processes the locations when targets appear with equal probability, we next examined changes to this processing in the subsequent experiments. That is, this initial experiment acts an individual baseline with which to compare the performance when the target location probability is unequal across locations.

Experiment 2

In Experiment 2, we examined the redundant-target processing when a target appears at one location more often than another (hereafter, termed the unequal-probability condition) without any explicit instructions that the target location probability of each location is unequal. We hypothesized that if the decision process is sensitive to the target location probability, the processing capacity should be more limited, relative to observed capacity in Experiment 1, because redundant information at the low-probability location may slow down the processing for the high-probability location. This also may be reflected in the decision strategy in, for instance, a shift from parallel or coactive processing (i.e., in the first experiment) to serial or parallel processing, respectively, mirroring the change in capacity.

Method

Participants

All of the participants who had participated in Experiment 1 also participated in Experiment 2. Each participant completed five sessions and each session lasted for about 40 minutes. The participants signed a written informed consent before the experiment and received NTD 120 per hour after they completed the experiment.

Design, stimuli, and procedure

The design, stimuli, and procedure were similar to those in Experiment 1, except for the trial frequencies. Table 1 shows the trial frequencies adopted in Experiment 2. A target dot appeared at the left location five times more frequent than at the right location in the single-target conditions, resulting in unequal target location probability of the two locations. Throughout the experiment, a block consisted of 96 trials and 10 blocks comprised a session.

Results

Accuracy was high in Experiment 2. Across all conditions, the mean accuracy was 0.98 with a standard deviation of 0.02. We used the same criterion as in Experiment 1 to extract data for further analysis. Table 4 presents the mean reaction times of the redundant-target conditions and MIC for each participant.

Tests for the selective-influence assumption

As in Experiment 1, we first tested the selective-influence assumption. Results of t tests on the mean single-target reaction times showed statistically significant differences in mean reaction times between the HX and LX conditions, as well as in mean reaction times between the XH and XL conditions (ps < 0.001), suggesting the brightness manipulation in the single-target trials is effective. The same conclusion was drawn from the results of the two-way ANOVA. There were significant main effects of the brightness manipulation at both locations (ps < 0.001). Altogether, these results confirmed that the selective-influence assumption was satisfied at the mean reaction time level.

We then tested the selective-influence assumption at the reaction time distribution level. For all of the participants, the survivor functions of the four redundant-target conditions were plotted individually (Fig. 7). From visual inspection, we observed an ordinal shift from the HH, HL, LH conditions to LL condition; the four distributions were ordered. Second, results from the eight two-sample K-S tests showed that, for most participants, the former four tests (HH > HL, HH > LH, HL > LL, and LH > LL) were significant (ps < 0.01), and the latter four tests (HH < HL, HH < LH, HL < LL, and LH < LL) were not significant (ps > 0.9). Only for participant CHY and HLM, the test for HH > LH did not reach the significance level (ps > 0.07). Third, we tested the marginal distributions of the redundant-target conditions. Results showed significant differences between the marginal distributions between the left, high brightness condition and left, low-brightness condition and between the right, high brightness condition and right, low-brightness condition (ps < 0.001), suggesting the brightness manipulation was also effective at factor level. The selective-influence assumption was verified at the reaction time distribution level.

Plots for the survivor functions of the four redundant-target conditions in Experiment 2

Processing architecture and stopping rule

MIC and SIC were analyzed to make inferences regarding the processing architecture and stopping rule. For all the participants, the observed MIC was greater than 0 (Table 4). Results of the two-way ANOVA showed that the interaction between the two factors was statistically significant for each participant (ps < 0.001). The significance of interaction for all the participants was confirmed by the adjusted rank transform test (ps < 0.001). Results from the nonparametric bootstrapping also showed that the 95 % CI for MIC did not include 0 for all the participants (Fig. 8). We therefore concluded that all the participants had a positive MIC. Moreover, the 95 % CI for SIC was positive for all times t (Fig. 9). Results of testing the largest positive and negative values of SIC confirmed that for all the participants D+ was significantly different from 0 (ps < 0.001) and D– was not significantly different from 0 (ps > 0.48). Combining MIC and SIC, we inferred that all the participants adopted parallel processing and followed a self-terminating stopping rule (Table 2).

Results of the simulated MIC and the 95 % CI for MIC for each participant in Experiment 2

Results of the SIC (thick solid lines) for each participant in Experiment 2. Dotted lines above and below the SIC represent the 95 % CI for SIC

Processing capacity

Figure 10 shows the estimated C(t) and the bootstrapped 95 % CI for C(t), along with race-model bound and Grice bound. Results showed that for all the participants, the 95 % CI for C(t) included 1 at the faster reaction times but was less than 1 at the slower reaction times, suggesting that they processed information with a system of unlimited to limited capacity. Results of the test of Cz showed that all the participants had a negative Cz (ps < 0.001), except that participant TYC had a Cz, which was not significantly different from 0 (p = 0.17), supporting that the participants processed redundant information with unlimited to limited capacity.

Results of the C(t) (thick solid lines), race-model bound (dashed lines), and Grice bound (dash-dot lines) for each participant in Experiment 2. Thin solid lines above and below the C(t) represent the 95 % CI for C(t)

Discussion

In Experiment 2, the target appeared at the left location five times as frequent as at the right location, such that the target location probability across locations was unequal. Results showed that for all the participants, MIC was positive and SIC was greater than 0 for all times t, indicating that all the participants adopted parallel processing and followed a self-terminating stopping rule to process redundant signals. C(t) for each participant was less than or equal to 1, suggesting a system of unlimited to limited capacity. Regardless of the unequal target location probability across locations, processing for redundant information across multiple locations remained parallel during the stage of information accumulation. Interestingly, two participants (TYC and HLM) changed their processing strategies, compared with Experiment 1, from coactive processing to parallel processing, with the processing capacity shifting from supercapacity to unlimited-to-limited capacity. These changes suggested that the manipulation of the target location probability resulted in a change to processing architecture and capacity consistent with the theoretical link between coactivity and supercapacity and independent parallel processing and unlimited capacity (see Townsend & Nozawa, 1995).

After the participants completed Experiment 2, we asked them to report their processing strategies. All the participants reported that they adopted the same strategy as they used in Experiment 1. In addition, none of the participants reported noticing that the target location probability varied across the experiments. These reports suggested that the strategy change (coactive to parallel processing) from Experiment 1 to Experiment 2 was not subjectively perceived.

Experiment 3

In Experiment 2, none of the participants were aware of the unequal target location probability across locations. In addition, despite the decrease in some of the participants’ processing capacity, we did not observe a substantive change from parallel to serial processing as expected from prior experiments manipulating exogenous cues (Yang, Little & Hsu, 2014). We speculated that explicit awareness of the target location probability may play an important role in selecting a decision strategy. Therefore, in Experiment 3, we informed the participants that a left dot would appear more often than a right dot, and asked them to pay more attention to the left location. We expected that the participants would explicitly select a decision strategy to process the high-probability location first, leading to a serial processing strategy. This would also result in a system of much more limited capacity because processing for the low-probability location may slow down the processing for the high-probability location.

Method

Participants

All the participants who had participated in Experiments 1 and 2 participated in Experiment 3. Each participant completed five sessions. Each session lasted for about 40 minutes. The participants signed a written informed consent prior to the experiment and received NTD 120 per hour after they completed the experiment.

Design, stimuli, and procedure

The design, stimuli, and procedure were identical to those used in Experiment 2. The critical difference between Experiments 2 and 3 was that the participants were informed that the target would appear at the left location more often than at the right location, and they were required to pay more attention to the left location.

Results

All the participants had high accuracy in detecting dots in Experiment 3. Across all conditions, the mean accuracy was 0.97 with a standard deviation of 0.03. We used the same criterion as in Experiment 1 to extract data for further analysis. Table 5 presents the participants’ mean reaction times of the redundant-target conditions and MIC individually.

Tests for the selective-influence assumption

We first examined the selective-influence assumption at the mean reaction time level. From the results of t tests, we found that mean reaction times between the HX and LX conditions and between the XH and XL conditions were significantly different (ps < 0.001). Results of the two-way ANOVA on mean reaction time showed significant main effects of the two factors (ps < 0.05), indicating that brightness manipulations at both locations were effective. Altogether, these results confirmed that the selective-influence assumption was satisfied at the mean reaction time level.

We then tested the selective-influence assumption at the reaction time distribution level. Figure 11 shows the plots of survivor functions in the four redundant-target conditions for all of the participants. From visual inspection, we observed an ordinal shift from the HH, HL, LH conditions to LL condition; the four distributions were ordered. Second, results from the eight two-sample K-S tests showed that, for all the participants, the former four tests were significant (ps < 0.01) except for HH > HL, and the latter four tests were not significant (ps > 0.06). Third, we tested the marginal distributions of the redundant-target conditions. Results showed significant differences between the marginal distributions between the left, high brightness condition and left, low-brightness condition and between the right, high brightness condition and right, low-brightness condition (ps < 0.05), suggesting the brightness manipulation was also effective at factor level. The four reaction time distributions of the redundant-target trials were ordered. These results suggest that selective-influence assumption was verified at the reaction time distribution level.

Plots for the survivor functions of the four redundant-target conditions in Experiment 3

Processing architecture and stopping rule

First, individual differences in MIC were observed. Although all the participants had a positive MIC (Table 5), results of the two-way ANOVA on mean reaction times showed that the interaction effects between the two factors differed across participants. Specifically, the interaction was significant for participants TYC and HLM (ps < 0.01), whereas for participants CHY and CHC, the interaction was not significant (ps > 0.05). The same results were observed by the adjusted rank transform test, showing that the interaction effect was significant for participants TYC and HLM (ps < 0.001), but not significant for participants CHY and CHC (ps > 0.05). Moreover, results of nonparametric bootstrapping (Fig. 12) showed that the 95 % CI for MIC included 0 for participants CHY and CHC, but did not include 0 for participants TYC and HLM. Based on these findings, the results converged to suggest that participants CHY and CHC had a zero MIC, and that participants TYC and HLM had a positive MIC.

Results of the simulated MIC and the 95 % CI for MIC for each participant in Experiment 3

Individual differences in SIC were also observed. From the results of nonparametric bootstrapping (Fig. 13), we found that the 95 % CI for SIC included 0 for all times t for participants CHY and CHC, whereas the 95 % CI for SIC was positive for participants TYC and HLM. Results of testing the largest positive and negative values of SIC confirmed the observations. For participants CHY and CHC, neither D+ nor D– was found to be significantly different from 0 (ps > 0.1); by contrast, for participants TYC and HLM, D+ was significantly different from 0 (ps < 0.05) and D– was not significantly different from 0 (ps > 0.8). Combining the results of MIC and SIC (refer to Table 2), we inferred that participants TYC and HLM adopted parallel processing and followed a self-terminating stopping rule, and that participants CHY and CHC changed their decision strategy and processed redundant signals with a serial, self-terminating strategy.

Results of the SIC (thick solid lines) for each participant in Experiment 3. Dotted lines above and below the SIC represent the 95 % CI for SIC

Processing capacity

Figure 14 shows the estimated C(t) and the bootstrapped 95 % CI for C(t), along with the race-model bound and Grice bound. Results showed that, for all the participants, the estimated C(t) was approximately equal to the Grice bound, and the bootstrapped 95 % CI for C(t) included the Grice bound for all times t. These results suggested that all the participants processed information with a system of limited capacity. Results of the test of Cz showed that all the participants had a negative Cz (ps < 0.05), except that participant CHC had a Cz, which was not significantly different from 0 (p = 0.13), supporting that the participants processed redundant information with unlimited to limited capacity.

Results of the C(t) (thick solid lines), race-model bound (dashed lines), and Grice bound (dash-dot lines) for each participant in Experiment 3. Thin solid lines above and below the C(t) represent the 95 % CI for C(t)

Discussion

In Experiment 3, participants were informed that the target location probability was unequal across locations. Results showed that for participants TYC and HLM, MIC was greater than 0 and SIC values were positive for all times t, indicating that they adopted parallel processing and follow a self-terminating stopping rule as they adopted in Experiment 2. On the other hand, for participants CHY and CHC, MIC was equal to 0 and SIC values were equal to 0 for all times t, indicating that they altered their decision strategies from parallel, self-terminating processing to serial, self-terminating processing. For three of the participants (TYC, HLM, and CHY), the processing capacity was limited, and for participant CHC, the processing capacity was unlimited to limited. After the participants completed all the experimental sessions, they were required to report their processing strategies. Self-reports showed that they found that the left location contained higher target location probability and they tended to attend to the high-probability location in order to quickly detect the target.

Interestingly, individual differences in the processing strategy were observed: two participants adopted serial processing and two participants continued to adopt parallel processing. We speculated that the individual differences may be related to the processing capacity in processing redundant information when the target probability was equal. The participants who altered their processing strategies had a system of more limited capacity whereas those participants who persisted with parallel processing had a system of supercapacity. These results implied that processing capacity is linked to processing strategy in a theoretically consistent manner with changes in attention.

General Discussion

In the present study, three experiments were conducted to investigate information processing in target detection with a focus on the effect of target location probability. A redundant-target detection task was used with manipulations of the target location probability and probability instruction. SFT was utilized to design the experiments, analyze data, and diagnose the characteristics of the decision processes. Table 6 shows a summary table of the inferred processing architecture and processing capacity for all the participants in each experiment.

In summary, target location probability affected the processing architecture and the processing capacity, but not the decisional stopping rule. The influence of the target location probability on the processing architecture may be related to individual differences in the processing capacity. When targets were equally distributed across two locations in Experiment 1, two participants adopted coactive processing with supercapacity, and the other two participants adopted a parallel, self-terminating strategy with unlimited to limited capacity. When the participants did not notice that the targets were unequally distributed in Experiment 2, all the participants adopted a parallel, self-terminating strategy with unlimited to limited capacity. When the participants were informed that the target location probability was unequal in Experiment 3, two participants who were of supercapacity in Experiment 1 adopted a parallel, self-terminating strategy while the others who had unlimited- to limited-capacity system adopted a serial, self-terminating strategy, and the processing capacity was unlimited to limited.

The results were in line with previous research: people are sensitive to target location probability in visual search, and target location probability serves as an attentional cue which biases spatial attention toward a location where the target most likely appears (Geng & Behrmann, 2002, 2005; Jones & Kaschak, 2012; Walthew & Gilchrist, 2006), which in turn affects the decision process in detecting the redundant targets. These results supported the previous findings that selective attention affects the decision processes in two ways (Donkin et al., 2013; Eidels et al., 2010; Yang, 2011; Yang et al., 2013; Yang et al., 2011; Yang et al., 2014): (1) The processing order for multiple signals varied from parallel to serial when the participants were explicitly aware that the targets were unequally distributed across space. Specifically, parallel processing was adopted during the information accumulation stage when targets were equally distributed and when participants did not notice the unequal target location probability. Serial processing for the participants of a more limited-capacity system was adopted when participants noticed the unequal target location probability. (2) The processing capacity was more limited when targets were unequally distributed than when they were equally distributed especially with explicit awareness of the unequal distributions of targets. This is because the unequal distributions of targets made the participants allocate more attention to the high-probability location. The processing for the low-probability location may thereby interfere the processing for the high-probability location, leading to less efficient processing.

Decision processes in detecting redundant targets

The present results hold implications for existing theories of multiple signal processing. For example, feature integration theory (Treisman & Gelade, 1980) would predict that processing should be parallel throughout all of the present experiments because searching for dots across multiple locations is regarded as a feature search. Thus, feature integration theory cannot easily explain why participants shifted from a parallel processing strategy to a serial processing strategy after they were informed of the unequal target location probability.

Signal detection theory (Green & Swets, 1966) is another typical account of how participants detect multiple signals. According to signal detection theory, signals are processed simultaneously and in parallel, and multiple signals are summed together into a single accumulator (Ashby, 2000). When the accumulated information exceeds a decision criterion, a positive response would be made. Thus, it is assumed that participants would adopt coactive processing for multiple-signal processing. However, from the current results, only two participants in Experiment 1 adopted coactive processing; the signal detection account also fails to explain the current findings.

Previous studies that have used SFT found that participants adopted parallel, self-terminating processing with limited capacity to detect the redundant dots (Townsend & Eidels, 2011; Townsend & Nozawa, 1995; Yu et al., 2014). This processing strategy is assumed in an independent race model (see Van Zandt, 2002 for a review); multiple signals are processed in parallel, and they compete for the decision. A decision follows a “winner-takes-all” rule; that is, when either signal is enough for decision making, a positive response is made. In contrast to the prediction from feature integration theory, limited-capacity, parallel processing suggests a limited pool of resources such that processing efficiencies on the individual channels suffer when the system simultaneously work on more than one object (Townsend & Ashby, 1983) or that there are inhibitory interactions across multiple channels during the stage of information accumulation (Eidels et al., 2011). Our current findings of individual variation in processing architecture and processing capacity suggest that there is flexibility in the degree of these interactions.

Based on the present findings, we considered that the results were best explained by the attentional window hypothesis (Belopolsky, Zwaan, Theeuwes, & Kramer, 2007; Theeuwes, 1994, 2004), which has been discussed in the Yang et al. (2014) to relate attention to the decision making process. Yang et al. (2014) manipulated the validity of the exogenous cue in a redundant-target detection task. Results showed that when the cue did not provide any information regarding the target location, participants may make their attentional window to be wide enough to cover all the target locations, leading to parallel processing for both locations. By contrast, when the cue can perfectly predict the target location, participants would narrow their attentional window to the cued location and follow the cue to detect the target, leading to a serial processing strategy. These results emphasize the flexibility of a decision mechanism and highlighted that attentional focusing affects the decision process. To extend this hypothesis to explain the current findings, we considered that target location probability affects the allocation of attention, which in turn affects the attentional focusing. When the target was equally distributed across spatial locations (Experiment 1) or when a participant did not notice the unequal target location probability (Experiment 2), the participant would widen their attentional window to encompass all the possible target locations in order not to miss any target. Thus, the large and diffuse attentional window resulted in simultaneous processing for all the possible locations, forming a parallel processing strategy. On the contrary, when the target was distributed unequally across locations and the participant was explicitly aware (Experiment 3), the attentional window was narrowed to process the location that most likely contains the target first.

In addition to pointing out the flexibility of the decision mechanism, the present study also demonstrates the role of subjective control in strategy selection. When the participants did not know the manipulation of target location probability in Experiments 1 and 2, parallel processing was used to accumulate information from multiple locations. When the participants were informed about the unequal target location probability and were instructed to pay attention to the high-probability location, two participants switched their processing strategies from parallel processing to serial processing. Furthermore, all of the participants’ processing capacity became much more limited in comparison to Experiments 1 and 2, suggesting the role of subjective control over spatial attention. Although it is likely that target probabilities are acquired without attention via statistical learning (Chun & Jiang, 1998; Jiang & Song, 2005; Jones & Kaschak, 2012; Turk-Browne, Jungé, & Scholl, 2005), some participants may acquire these probabilities better than others, which may have led to changes in the processing capacity that we observed from Experiment 1 to Experiment 2. The change from coactive to parallel processing might reflect changes in more automatic processing whereas the change from parallel to serial processing might reflect the use of a more controlled attentional strategy (Shiffrin & Schneider, 1977). These controlled strategies implicate serial processing as an explanation for the role of selective attention in more cognitive tasks such as categorization (Blunden, Wang, Griffiths, & Little, 2015; Fific et al., 2010; Lamberts, 2000; Little, Nosofsky, & Denton, 2011; Little, Nosofsky, Donkin, & Denton, 2013).

Processing capacity may constrain strategy flexibility

It is worthwhile to note that the individual differences in strategy selection revealed in this study suggest that the processing capacity of a system may constrain its process architecture. Participants whose processing architecture changed from parallel to serial (i.e., from Experiment 2 to 3) were those had a system of unlimited to limited capacity in Experiment 1, whereas participants who did not changed their processing architecture were those whose system was supercapacity in Experiment 1. These findings go beyond the theoretical suggestions that some multiple-signal processing strategies are related to a system’s processing capacity. For example, a coactive system usually has supercapacity, whereas the processing capacity of a standard serial system is limited (Colonius and Townsend, 1997; Eidels et al., 2011; Townsend, 1972, 1974; Townsend & Eidels, 2011; Townsend & Nozawa, 1997; Wenger & Gibson, 2004; Wenger & Townsend, 2001).

Implications for endogenous and exogenous cues

Evidence suggests that probability cuing modulates visual behavior in a way that involves both exogenous and endogenous factors (Geng & Behrmann, 2002, 2005; Zhao et al., 2013). Comparing the present study with Yang et al. (2014), it seems that exogenous cues and spatial probability cues influence the processing architecture when subjective attentional control controls the strategy selection. In Yang et al.'s (2014) study, the participants were informed about the cue validity of predicting target location. Therefore, as the cue validity changed from uninformative to informative about target location, processing order shifted from parallel processing to serial processing. Nevertheless, in the current study, the participants changed their processing architecture from parallel to serial only when they were explicitly aware of the unequal probability information.

Furthermore, spatial probability cues and exogenous cues have similar influences on the processing capacity. In Yang et al.’s (2014) study, the participants’ processing systems became much more limited when the cue validity varied from uninformative to informative. Similarly, in the present study, target location probability also led to a processing system of less capacity. Apart from the individual differences in processing capacity that were observed in the present study, we found that processing capacity became more limited with an unequal target location probability (Experiment 1 to Experiment 2) and even more limited when they had extensive information about the probability manipulation (Experiment 3). However, in comparison of the overall processing capacity that we reported in this study and Yang et al.’s (2014) study, we found a difference in processing capacity when these attentional cues were not informative (i.e., Experiment 1 of the present study vs. the uninformative-cue condition of Yang et al., 2014). Supercapacity was observed in Experiment 1 of the present study; yet unlimited to moderately limited capacity was observed in the uninformative-cue condition of Yang et al.’s (2014) study. It might be that an exogenous cue can modulate the attentional resources over the space in a bottom-up fashion even though it does not mandatorily result in serial processing. Because the participants were unable to extract any regularity in the target-presence patterns, they tended to equate their visual attention across the space and thereby maintain a system of higher processing capacity. Taken together, these results led us to hypothesize exogenous cues and spatial probability cues may operate in a common way. However, further efforts are needed to understand how probability cuing effect can be related to the traditional dichotomy between exogenous and endogenous attention in decision making.

Conclusions

This study suggests that target location probability influences the process architecture and the processing capacity of the decision processes and that this influence is modulated by controlled attention as well as individual differences in the capacity of a decision system. Moreover, the present study demonstrates that the decision mechanism is flexible in terms of how learned probability information biases the spatial attention.

References

Ashby, F. G. (2000). A stochastic version of general recognition theory. Journal of Mathematical Psychology, 44(2), 310–329.

Baker, C. I., Olson, C. R., & Behrmann, M. (2004). Role of attention and perceptual grouping in visual statistical learning. Psychological Science, 15, 460–466.

Belopolsky, A., Zwaan, L., Theeuwes, J., & Kramer, A. (2007). The size of an attentional window modulates attentional capture by color singletons. Psychonomic Bulletin & Review, 14(5), 934–938. doi:10.3758/BF03194124

Blackwell, H. R. (1953). Psychophysical thresholds: Experimental studies of methods of measurement: Engineering Research Institute, Univ. of Michigan.

Blunden, A. G., Wang, T., Griffiths, D., & Little, D. R. (2015). Logical-rules and the classification of integral dimensions: Individual differences in the processing of arbitrary dimensions. Frontiers in Psychology, 5. doi:10.3389/fpsyg.2014.01531

Burbeck, S. L., & Luce, R. D. (1982). Evidence from auditory simple reaction times for both change and level detectors. Perception & Psychophysics, 32(2), 117–133.

Chun, M. M., Golomb, J. D., & Turk-Browne, N. B. (2011). A taxonomy of external and internal attention. Annual Review of Psychology, 62, 73–101. doi:10.1146/annurev.psych.093008.100427

Chun, M. M., & Jiang, Y. (1998). Contextual cueing: Implicit learning and memory of visual context guides spatial attention. Cognitive Psychology, 36, 28–71. doi:10.1006/cogp.1998.0681

Cleland, B., Dubin, M., & Levick, W. (1971). Sustained and transient neurones in the cat's retina and lateral geniculate nucleus. The Journal of Physiology, 217(2), 473–496. Retrieved from http://www.ncbi.nlm.nih.gov/pmc/articles/PMC1331787/pdf/jphysiol01016-0215.pdf

Colonius, H., & Townsend, J. T. (Eds.). (1997). Activation-state representation of models for the redundant-signals-effect. Mahwah, NJ: Lawrence Erlbaum Associates Publishers.

Corbetta, M., & Shulman, G. L. (2002). Control of goal-directed and stimulus-driven attention in the brain. Nature Reviews Neuroscience, 3, 201–215.

Donkin, C., Little, D. R., & Houpt, J. W. (2013). Assessing the speed-accuracy trade-off effect on the capacity of information processing. Journal of Experimental Psychology: Human Perception and Performance.

Dzhafarov, E. N. (1999). Conditionally selective dependence of random variables on external factors. Journal of Mathematical Psychology, 43, 123–157.

Eidels, A., Houpt, J. W., Altieri, N., Pei, L., & Townsend, J. T. (2011). Nice guys finish fast and bad Guys finish last: Facilitatory vs. inhibitory interaction in parallel systems. Journal of Mathematical Psychology, 55, 176–190. doi:10.1016/j.jmp.2010.11.003.Nice

Eidels, A., Townsend, J. T., & Algom, D. (2010). Comparing perception of Stroop stimuli in focused versus divided attention paradigms: Evidence for dramatic processing differences. Cognition, 114(2), 129–150.

Fiedler, A., Schröter, H., & Ulrich, R. (2011). Coactive processing of dimensionally redundant targets within the auditory modality? Experimental Psychology (formerly Zeitschrift für Experimentelle Psychologie), 58, 50–54.

Fific, M., Little, D. R., & Nosofsky, R. M. (2010). Logical-rule models of classification response times: A synthesis of mental-architecture, random-walk, and decision-bound approaches. Psychological Review, 117(2), 309.

Fiser, J., & Aslin, R. N. (2001). Unsupervised statistical learning of higher-order spatial structures from visual scenes. Psychological Science, 12, 499–504.

Geng, J. J., & Behrmann, M. (2002). Probability cuing of target location facilitates visual search implicitly in normal participants and patients with hemispatial neglect. Psychological Science, 13, 520–525.

Geng, J. J., & Behrmann, M. (2005). Spatial probability as an attentional cue in visual search. Perception & Psychophysics, 67(7), 1252–1268.

Green, D. M., & Swets, J. A. (1966). Signal detection theory and psychophysics. John Wiley and Sons.

Grice, G. R., Canham, L., & Boroughs, J. M. (1984). Combination rule for redundant information in reaction time tasks with divided attention. Perception & Psychophysics, 35, 451–463.

Grice, G. R., Canham, L., & Gwynne, J. W. (1984). Absence of a redundant-signals effect in a reaction time task with divided attention. Perception & Psychophysics, 36, 565–570.

Hoffmann, J., & Kunde, W. (1999). Location-specific target expectancies in visual search. Journal of Experimental Psychology: Human Perception and Performance, 25, 1127.

Hopfinger, J. B., Buonocore, M. H., & Mangun, G. R. (2000). The neural mechanisms of top-down attentional control. Nature Neuroscience, 3, 284–291.

Houpt, J. W., Blaha, L. M., McIntire, J. P., Havig, P. R., & Townsend, J. T. (2014). Systems factorial technology with R. Behavior Research Methods, 46, 307–330. doi:10.3758/s13428-013-0377-3

Houpt, J. W., & Townsend, J. T. (2011). An extension of SIC predictions to the Wiener coactive model. Journal of Mathematical Psychology, 55(3), 267–270.

Houpt, J. W., & Townsend, J. T. (2012). Statistical measures for workload capacity analysis. Journal of Mathematical Psychology, 56, 341–355.

Hughes, H. C., & Townsend, J. T. (1998). Varieties of binocular interaction in human vision. Psychological Science, 9(1), 53–60. Retrieved from http://pss.sagepub.com/content/9/1/53.full.pdf

Hughes, H. C., & Zimba, L. D. (1985). Spatial maps of directed visual attention. Journal of Experimental Psychology: Human Perception and Performance, 11, 409.

Jiang, Y., & Song, J.-H. (2005). Spatial context learning in visual search and change detection. Perception & Psychophysics, 67(7), 1128–1139. doi:10.3758/BF03193546

Jones, J. L., & Kaschak, M. P. (2012). Global statistical learning in a visual search task. Journal of Experimental Psychology: Human Perception and Performance, 38, 152.

Jonides, J. (1981). Voluntary versus automatic control over the mind’s eye’s movement. Attention and Performance IX, 9, 187–203.

Kinchla, R. A. (1977). The role of structural redundancy in the perception of visual targets. Perception & Psychophysics, 22, 19–30.

Kingstone, A., & Klein, R. (1991). Combining shape and position expectancies: Hierarchical processing and selective inhibition. Journal of Experimental Psychology: Human Perception and Performance, 17, 512.

Kujala, J. V., & Dzhafarov, E. N. (2008). Testing for selectivity in the dependence of random variables on external factors. Journal of Mathematical Psychology, 52, 128–144.

Lambert, A. J., & Hockey, R. (1986). Selective attention and performance with a multidimensional visual display. Journal of Experimental Psychology: Human Perception and Performance, 12, 484.

Lamberts, K. (2000). Information-accumulation theory of speeded categorization. Psychological Review, 107(2), 227.

Little, D. R., Nosofsky, R. M., Donkin, C., & Denton, S. E. (2013). Logical rules and the classification of integral-dimension stimuli. Journal of Experimental Psychology: Learning, Memory, and Cognition, 39(3), 801.

Little, D. R., Nosofsky, R. M., & Denton, S. E. (2011). Response-time tests of logical-rule models of categorization. Journal of Experimental Psychology: Learning, Memory, and Cognition, 37(1), 1.

Livingstone, M., & Hubel, D. (1988). Segregation of form, color, movement, and depth: Anatomy, physiology, and perception. Science, 240(4853), 740–749. Retrieved from http://www.sciencemag.org/content/240/4853/740

Luce, R. D. (1986). Response times. Oxford University Press.

Miller, J. (1982). Divided attention: Evidence for coactivation with redundant signals. Cognitive Psychology, 14(2), 247–279.

Miller, J. (1988). Components of the location probability effect in visual search tasks. Journal of Experimental Psychology: Human Perception and Performance, 14(3), 453.

Miller, E. K., & Cohen, J. D. (2001). An integrative theory of prefrontal cortex function. Annual Review of Neuroscience, 24(1), 167–202.

Mordkoff, J. T., & Yantis, S. (1991). An interactive race model of divided attention. Journal of Experimental Psychology: Human Perception and Performance, 17, 520–538.

Pashler, H., Johnston, J. C., & Ruthruff, E. (2001). Attention and performance. Annual Review of Psychology, 52, 629–651.

Peterson, W. W., Birdsall, T. G., & Fox, W. C. (1954). The theory of signal detectability. Information Theory, Transactions of the IRE Professional Group, 4(4), 171–212. Retrieved from http://ieeexplore.ieee.org/ielx5/4547596/22771/01057460.pdf?tp=&arnumber=1057460&isnumber=22771

Posner, M. I. (1980). Orienting of attention. Quarterly Journal of Experimental Psychology, 32, 3–25.

Posner, M. I., Snyder, C. R., & Davidson, B. J. (1980). Attention and the detection of signals. Journal of Experimental Psychology: General, 109, 160.

Reder, L. M., Weber, K., Shang, J., & Vanyukov, P. M. (2003). The adaptive character of the attentional system: Statistical sensitivity in a target localization task. Journal of Experimental Psychology: Human Perception and Performance, 29, 631.

Robson, J. (1966). Spatial and temporal contrast-sensitivity functions of the visual system. Journal of the Optical Society of America, 56(8), 1141–1142.

Saffran, J. R. (2002). Constraints on statistical language learning. Journal of Memory and Language, 47, 172–196.

Schneider, W., Eschman, A., & Zuccolotto, A. (2002). E-Prime: User's Guide.

Sewell, D. K., & Smith, P. L. (2012). Attentional control in visual signal detection: Effects of abrupt-onset and no-onset stimuli. Journal of Experimental Psychology: Human Perception and Performance, 38(4), 1043.

Shaw, M. L., & Shaw, P. (1977). Optimal allocation of cognitive resources to spatial locations. Journal of Experimental Psychology: Human Perception and Performance, 3, 201.

Shiffrin, R. M., & Schneider, W. (1977). Controlled and automatic human information processing: II. Perceptual learning, automatic attending and a general theory. Psychological Review, 84(2), 127.

Shomstein, S., & Yantis, S. (2004). Configural and contextual prioritization in object-based attention. Psychonomic Bulletin & Review, 11, 247–253.

Smith, P. L. (1995). Psychophysically principled models of visual simple reaction time. Psychological Review, 102(3), 567.

Sternberg, S. (1969). The discovery of processing stages: Extensions of Donders' method. Acta Psychologica, 30, 276–315.

Summerfield, J. J., Lepsien, J., Gitelman, D. R., Mesulam, M., & Nobre, A. C. (2006). Orienting attention based on long-term memory experience. Neuron, 49, 905–916.

Theeuwes, J. (1992). Perceptual selectivity for color and form. Perception & Psychophysics, 51, 599–606.

Theeuwes, J. (1994). The effects of location cuing on redundant-target processing. Psychological Research, 57(1), 15–19.

Theeuwes, J. (2004). Top-down search strategies cannot override attentional capture. Psychonomic Bulletin & Review, 11(1), 65–70.

Townsend, J. T. (1972). Some results concerning the identifiability of parallel and serial processes. British Journal of Mathematical and Statistical Psychology, 25, 168–199.

Townsend, J. T. (1974). Issues and models concerning the processing of a finite number of inputs. In B. H. Kantowitz (Ed.), Human Information Processing: Tutorials in Performance and Cognition (pp. 133–168). Hillsdale, NJ: Erlbaum

Townsend, J. T., & Ashby, F. G. (1983). Stochastic modeling of elementary psychological processes. CUP Archive.

Townsend, J. T., & Eidels, A. (2011). Workload capacity spaces: A unified methodology for response time measures of efficiency as workload is varied. Psychonomic Bulletin & Review, 18, 659–681. doi:10.3758/s13423-011-0106-9