Abstract

This study was designed to determine the feasibility of using self-paced reading methods to study deaf readers and to assess how deaf readers respond to two syntactic manipulations. Three groups of participants read the test sentences: deaf readers, hearing monolingual English readers, and hearing bilingual readers whose second language was English. In Experiment 1, the participants read sentences containing subject-relative or object-relative clauses. The test sentences contained semantic information that would influence online processing outcomes (Traxler, Morris, & Seely Journal of Memory and Language 47: 69–90, 2002; Traxler, Williams, Blozis, & Morris Journal of Memory and Language 53: 204–224, 2005). All of the participant groups had greater difficulty processing sentences containing object-relative clauses. This difficulty was reduced when helpful semantic cues were present. In Experiment 2, participants read active-voice and passive-voice sentences. The sentences were processed similarly by all three groups. Comprehension accuracy was higher in hearing readers than in deaf readers. Within deaf readers, native signers read the sentences faster and comprehended them to a higher degree than did nonnative signers. These results indicate that self-paced reading is a useful method for studying sentence interpretation among deaf readers.

Similar content being viewed by others

Learning to read proceeds in different ways for deaf and hearing readers. The most obvious difference, associating sounds with letters, is just the tip of the iceberg. Deaf readers typically do not have proficiency in the language represented by print prior to learning to read. Signed languages, such as American Sign Language (ASL), are not simply visual analogues of spoken languages, but fully independent languages that arise naturally in deaf communities (Klima & Bellugi, 1978; Padden & Humphries, 1988; Stokoe, 1980). Thus, the visual–auditory mappings argued to be a key route to breaking the orthographic code for hearing readers may be of little help to deaf readers (Coltheart, Rastle, Langdon, & Ziegler, 2001; Gough, Hoover, & Peterson, 1996; Harm & Seidenberg, 2004; Perfetti & Sandak, 2000).Footnote 1 To develop literacy skill, deaf readers must master the vocabulary and grammatical principles of a novel language, one that is distinct from their primary means of communication. They must simultaneously master the orthographic code that maps visual symbols onto meaning. This contrasts with hearing readers, who have already developed a great deal of knowledge of the language, and whose primary task is to discover how the system of visual symbols maps onto this well-developed knowledge base. To capitalize on visual skills among deaf students, teachers have attempted to use visual analogues of spoken English (manually coded English, or MCE) to promote reading skill (Goldin-Meadow & Mayberry, 2001). However, little or no evidence has indicated that such training methods actually promote acquisition of literacy skill in deaf readers (Luckner, Sebald, Cooney, Young, & Goodwin Muir, 2005; Schick & Moeller, 1992).

Deaf readers vary greatly in reading proficiency (for reviews, see Goldin-Meadow & Mayberry, 2001; Kelly, 2003; Mayberry, 2010; Musselman, 2000; Schirmer & McGough, 2005), possibly because different deaf readers apply different strategies to map orthographic forms to meaning, because of differences in instruction methods, differences in first-language experience, or other factors. Although some deaf readers attain high degrees of skill, the average deaf student gains only one third of a grade equivalent each school year, and deaf students on average have a fourth-grade reading level at high school graduation; research indicates that this has been the case since the early twentieth century (Gallaudet Research Institute, 2002; Holt, 1993; Wolk & Allen, 1984). Despite 100 years of research and interventions, no approaches to reading instruction with deaf readers have been developed that have resulted in consistently high reading levels.

To fully comprehend English sentences, readers (including deaf readers) must undertake morphosyntactic processing. Those morphosyntactic processes reveal aspects of lexical meaning, such as tense and aspect for verb interpretation, as well as revealing how words in sentences relate to one another. Morphosyntactic cues will often be supplemented by other kinds of cues (e.g., visual and semantic context, animacy, etc.), but some sentences can be successfully interpreted only after detailed syntactic computations have been undertaken (Altmann & Steedman, 1988; Ferreira, 2003; Nicol & Swinney, 1989; Tanenhaus, Spivey-Knowlton, Eberhard, & Sedivy, 1995; Zurif & Swinney, 1995). For example, syntactic cues must be analyzed to establish appropriate thematic relationships in reversible passives, such as Example (1a), whose meaning contrasts with the active-voice Example (1b):

-

(1a)

The girl was chased by the boy.

-

(1b)

The girl chased the boy.

Adult native speakers of English respond robustly to these morphosyntactic cues during online interpretation (Gilboy, Sopena, Clifton, & Frazier, 1995; MacDonald, Pearlmutter, & Seidenberg, 1994; Traxler, Pickering, & Clifton, 1998; see Pickering & van Gompel, 2006; Traxler, 2011, 2012, for reviews). Syntactic parsing theories differ with respect to the details of syntactic analysis, but nearly all of them subscribe to two general tenets: (1) Recovering sentence meaning involves syntactic computations, and (2) comprehenders eventually construct or recover a single description of the syntax of a sentence.

Only a handful of studies have attempted to determine whether difficulties in reading comprehension among deaf readers result from disruptions in syntactic processing (Kelly, 1996, 2003; Lillo-Martin, Hanson, & Smith, 1992; Miller, 2000, 2010; Robbins & Hatcher, 1981). These studies have produced conflicting results. Both Kelly (2003) and Lillo-Martin et al. (1992) found that deaf ASL–English bilinguals showed patterns of comprehending English relative-clause sentences similar to those of hearing native English speakers, whether they were skilled or poor readers. These authors suggested that reading comprehension difficulties are related to low levels of processing automaticity (Kelly, 2003) or a phonological processing deficit (Lillo-Martin et al., 1992). By contrast, Miller (2010) compared performance on phonological and orthographic awareness and sentence interpretation tasks by Israeli deaf and hearing readers, and concluded that reading deficits among Israeli deaf students are a product of syntactic processing and not phonological decoding. In his study, deaf participants were compared with controls on a phonological awareness task and a sentence comprehension task involving sentences of differing complexity and plausibility. The results suggested that, by college age, reading comprehension outcomes did not correlate with scores on the phonological awareness task. Adult deaf readers were less accurate than hearing readers on the sentence comprehension task, especially for sentences expressing implausible outcomes. Although syntactic complexity varied across the set of sentences, the relationship between syntactic complexity and comprehension outcomes was not reported. Furthermore, no processing-time data were reported.

In the present study, we investigated how deaf readers respond to syntactic structure cues as they read English sentences. Prior studies have often conflated changes in sentence structure with changes in lexical content, thus making it difficult to disentangle the independent contributions of the multiple sources of meaning in sentence understanding (e.g., Miller, 2010). In the present study, we manipulated structural complexity without changing lexical content. This is particularly important in investigating sentence processing in a population that is heterogeneous in language experience and that may exhibit processing differences in multiple domains (phonological, lexical, and syntactic). Our study is unique in measuring the time course of comprehension in order to gain insights to parsing. This study was designed to begin to answer two very basic questions: (1) Does syntactic complexity affect deaf readers in the same way that it affects hearing readers? (2) Do deaf readers respond to helpful semantic cues that supplement syntactic structure cues? Answering these two questions can help us begin to develop an account of the online parsing processes that deaf readers undertake while reading sentences in English.

The influence of syntactic complexity and semantic cues in hearing readers

Psycholinguistic research on parsing processes in hearing readers has relied heavily on chronometric (reaction time) methods. One such line of research has focused on the comparison between sentences that are more complex syntactically versus those that are less complex (in light of representational assumptions that are supported by linguistic analysis; Gordon, Hendrick, & Johnson, 2001; King & Just, 1991; MacWhinney & Pleh, 1988; Mak, Vonk, & Schriefers, 2002; Traxler et al., 2002; Wanner & Maratsos, 1978). Several studies in this line have focused on the contrast of sentences with subject-relative clauses [e.g., Example (2a)] and object-relative clauses [e.g., Example (2b)]:

-

(2a)

The lawyer that phoned the banker filed a lawsuit. (subject relative)

-

(2b)

The lawyer that the banker phoned filed a lawsuit. (object relative)

In (2a), the subject of the sentence (lawyer) also serves as the subject of the relative clause that (trace) phoned the banker, as in the lawyer phoned the banker.Footnote 2 In (2b), the subject of the sentence serves as the direct object of the verb inside the relative clause that the banker phoned (trace), as in the banker phoned the lawyer.

Generally, sentences with object-relative clauses take longer to read than sentences with subject-relative clauses, with difficulty manifesting during reading of the relative clause and continuing during reading of the main verb.Footnote 3 Processing accounts variously attribute this difficulty to the dual grammatical function of the subject noun in object relatives (Keenan & Comrie, 1977; parallel function hypothesis); changes in the reader’s perspective at various points in the sentence (MacWhinney & Pleh, 1988); the potential for confusion between the different role-players in the sentence (Gordon et al., 2001); the number of discourse referents that intervene between a head and the trace, or other factors that may increase working memory load (Gibson, 1998; Wanner & Maratsos, 1978; but see Traxler et al., 2012; Traxler et al., 2005); or a general tendency to treat the subjects of sentences as subjects of embedded clauses (Traxler et al., 2002).Footnote 4

The difficulty of object-relative clauses can be reduced when helpful semantic cues are available to the reader (Mak et al., 2002; Traxler et al., 2002; Traxler et al., 2005). In particular, object relatives are processed almost as quickly as subject relatives when the subject of the sentence is inanimate and the noun within the relative clause is animate, as in Example (3a):

-

(3a)

The pistol that the cowboy dropped remained in the saloon.

When the subject of the sentence is animate and the noun within the relative clause is inanimate, as in Example (3b), the sentence is much more difficult to process:

-

(3b)

The cowboy that the pistol injured remained in the saloon.

In subject relatives, such as Examples (3c) and (3d), the positions of animate and inanimate nouns have little or no effect on processing difficulty:

-

(3c)

The pistol that injured the cowboy remained in the saloon.

-

(3d)

The cowboy that dropped the pistol remained in the saloon.

Reducing the semantic confusability of the critical nouns does not, by itself, eliminate processing difficulty that attaches to object-relative clauses. If it did, (3a) and (3b) would be equally difficult to process. For the same reason, integration across intervening discourse elements does not provide a complete explanation for the object-relative penalty.

Given the multiple factors involved in object- and subject-relative clause interpretations, it remains an open question whether deaf readers respond to sentences with subject- or object-relative clauses in the same way that hearing readers do. One goal of the present study was to determine whether deaf readers experience the object-relative penalty and, if so, whether the penalty decreases when helpful semantic cues are present.

Experiments investigating syntactic complexity have also assessed comprehenders’ responses to passive-voice and active-voice sentences such as those in (1a) and (1b) (Christianson, Williams, Zacks, & Ferreira, 2006; Ferreira, 2003):

-

(1a)

The girl was chased by the boy.

-

(1b)

The girl chased the boy.

Some linguistic analyses treat passive-voice sentences as being essentially equivalent to adjectives (e.g., The girl was tall; Townsend & Bever, 2001). Other analyses treat them as examples of extraction and movement (Chomsky, 1981, 1995; see also Jackendoff, 2002). If so, passive-voice sentences like (1a) entail more complex representations and potentially more complicated processes than do active-voice sentences like (1b). For example, Jackendoff (p. 47) described a generative account under which passive voice involves an extracted element [girl in (1a)], a trace located at a gap site [between chased and boy in (1a)]. The passive voice is comprehended via a derivational process, involving the application of transformations. These transformations build a mental representation in which the surface element girl ends up in its canonical location, immediately adjacent to and following chased. On this account, Example (1b) does not entail such covert transformations, and should impose a lower processing load.

Comprehension of passive-voice sentences is more susceptible to impairment by brain damage (Grodzinsky, 1986; Zurif, Swinney, Prather, Solomon, & Bushell, 1993). Furthermore, interpretations based on fully specified syntactic structure representations are more likely to be overruled by lexical–semantic features in passives than in actives (Ferreira, 2003). As a result, readers are more likely to assign an incorrect interpretation to The mouse was eaten by the cheese than The cheese ate the mouse.

We do not have much information about how deaf readers interpret passive-voice sentences (Quigley, 1982). Power and Quigley (1973) showed deaf middle school and high school students index cards with a written English sentence. Participants were asked to demonstrate the meanings of sentences, such as “The car was pushed by the tractor,” using toys to represent the subject and object. Even the oldest age group, 17–18 year olds, completed only three or four of the six trials correctly. In agent-deleted passives, such as “The car was pushed,” participants completed only a third of the trials correctly, on average. Performance on a production task was poorer than on the comprehension task. In this case, participants were shown a picture and then asked to fill in a blank from a set of provided words. The 17- to 18-year-olds completed only two or three of six trials correctly. Quigley and colleagues, who systematically investigated a range of syntactic structures, concluded that deaf school-aged children are not sensitive to many syntactic cues, but rely instead on a general assumption that English sentences follow SVO word order (Quigley, 1982; Quigley, Wilbur, Power, Montanelli, & Steinkamp, 1976). This poses a particular problem for passives, since the order of the subject and object are reversed. Power and Quigley reported that students who were most successful in their study appeared to rely on the presence of the word “by” to identify the structure as a passive (p. 9). This word was more consistently included in the production task than were the appropriate auxiliary and past tense form of the main verb, and comprehension performance was below chance on agent-deleted passives, suggesting that the students did not detect the passive when the word “by” was not present as a cue. Note that these investigators did not assess language proficiency in ASL or English, nor did they report the hearing status of the deaf students’ parents. Thus, there is no way to know what proportion of the students had been exposed to a first language in early childhood.

The present study comprised two experiments assessing deaf readers’ responses to two sentence types. In the first experiment, we tested responses to sentences containing subject- and object-relative clauses. In the second, we tested responses to active-voice and passive-voice sentences. If deaf readers make early syntactic commitments similar to those of hearing readers, object relatives should take longer to read than subject relatives. If deaf readers are sensitive to semantic cues to sentence structure, the object-relative penalty would be reduced when the subject of the sentence was inanimate and the noun within the relative clause was animate. If deaf readers interpret passives via a transformation-like process of affiliating the subject of the sentence with a gap located after the main verb, they should take longer to read passives than to read active-voice sentences.

Experiment 1

In Experiment 1, we assessed deaf readers’ responses to sentences like those in Examples (3a)–(3d):

-

Object Relative, Inanimate Subject

-

(3a) The pistol that the cowboy dropped remained in the saloon.

-

Object Relative, Animate Subject

-

(3b) The cowboy that the pistol injured remained in the saloon.

-

Subject Relative, Inanimate Subject

-

(3c) The pistol that injured the cowboy remained in the saloon.

-

Subject Relative, Animate Subject

-

(3d) The cowboy that dropped the pistol remained in the saloon.

If deaf participants respond like hearing, native English readers do, then sentences containing object-relative clauses [(3a) and (3b)] should be read slower than sentences containing subject relatives [(3c) and (3d)]. Furthermore, if deaf readers are sensitive to semantic cues, the object-relative disadvantage should be reduced when the sentence has an inanimate subject [e.g., (3a)].

Method

Participants

Three groups of participants were recruited: deaf readers (deaf), monolingual hearing English speakers (English), and hearing bilingual readers whose first language was not English (bilingual). A total of 68 participants were included in the deaf group, 31 in the English group, and 34 in the bilingual group. The deaf participants were recruited from the Sacramento, San Francisco, and Los Angeles regions through community contacts and community service centers for the deaf. The present experiments were conducted as part of a larger project investigating language processing as a function of age of exposure to ASL. In the deaf group, 22 deaf readers were classified as native signers if they had begun to learn ASL from birth. These readers’ median age was 34 years old (range: 22–45). Another 24 early signers had learned ASL before puberty, but not from birth (median: 35, range 21–65). The 22 late signers had learned ASL after puberty (median age: 47, range 27–69).

The hearing monolingual and bilingual readers were recruited from the UC Davis Psychology Department participant pool. The bilingual participants all had English as their second language and had a wide variety of first languages. Their self-reported English proficiency averaged 3.0 on a scale from 1 (poor) to 7 (native proficiency), with a range of 1–6. They averaged 66 % correct on the Nelson–Denny vocabulary test (range 24 % to 100 % correct). The monolingual English group provided us with a baseline. The bilingual group resembled the deaf group, in that they were reading the experimental sentences in their second language. Hence, the bilingual group would provide an indication whether differences in performance between the deaf and English groups were due to language status (first vs. second language) or hearing status.

Stimuli

The stimuli consisted of 28 quadruplets of sentences similar to those in Examples (3a)–(3d). These stimuli were the same as those used in previous experiments with hearing readers (Traxler et al., 2002; Traxler et al., 2005).

Each participant also read 24 sentences from Experiment 2, as well as 50 filler sentences with a variety of simple sentence structures (active-voice sentences, active intransitives, etc.). One version from each quadruplet was assigned to one of four lists of items using a Latin square design. Each participant saw only one version of each item, and every participant read equal numbers of items from each of the four subtypes. Each participant read a total of 102 sentences: 28 sentences from the present experiment, 24 from Experiment 2, and 50 filler items (Appendix).

Apparatus and procedure

Participants read each test sentence one word at a time. The participants were instructed to read at a normal, comfortable pace in a manner that would enable them to answer comprehension questions.Footnote 5 Sentences were presented with a self-paced moving window procedure using a PC running custom software. Each trial began with a series of dashes on the computer screen in place of the letters in the words. Any punctuation marks appeared in their exact position throughout the trial. The first press of the spacebar replaced the first set of dashes with the first word in the sentence. With subsequent spacebar presses, the next set of dashes were replaced by the next word, and the preceding word was replaced by dashes. Yes-or-no questions followed 45 of the sentences, and participants did not receive feedback on their answers. The computer recorded the time from when a word was first displayed until the next press of the spacebar.

Analyses

We analyzed reading times for the relative-clause region (the three words between the word “that” and the main verb of the sentence) and the main-verb region (the main verb of the sentence) using hierarchical linear modeling (Blozis & Traxler, 2007; Raudenbush & Bryk, 2002; Traxler et al., 2005). Separate models were run for each scoring region. At the first level of the model, reading times were considered as a function of clause type (subject vs. object relative), animacy (the subject of the sentence was inanimate or animate), and the interaction of clause type and animacy. Group (deaf vs. English vs. bilingual) was entered into the second level of the model. At this level, parameter slopes and error terms were allowed to vary freely between individuals.Footnote 6 The Level 1 and Level 2 parameters were estimated simultaneously.

Results and discussion

Comprehension question results

Comprehension questions were asked after a subset of the sentences in the experiment, with most questions being presented after filler sentences. Due to the sparse data, it was not possible to compare comprehension outcomes across the different conditions. A multilevel model testing for between-group accuracy differences (deaf vs. English vs. bilingual) showed that the English group had the highest accuracy, at 89 % [significantly higher than the deaf group, at 72.3 %; t(130) = 6.52, SE = 021, p < .001]. The bilingual group (84 %) also had higher accuracy than the deaf group [t(130) = 3.24, SE = .024, p < .01]. A multilevel model testing for between-group effects that divided the deaf readers into three subgroups (native, early, and late signers) showed that the native signer group had greater accuracy than did the early [81 % (range: 51 %–97 %) vs. 68 % (range: 47 %–90 %); t(128) = 2.66, SE = .037, p < .01] and late [68 % (range: 46 %–96 %); t(128) = 2.52, SE = .040, p = .01] signer groups, and lower accuracy than the English group [t(128) = 2.71, SE = .026, p < .01], but that they did not differ from the bilingual group [t(128) < 1, SE = 0.028, n.s.].

Reading time results

Table 1 shows the mean self-paced reading times and standard errors for the relative-clause and main-verb scoring regions by condition for Experiment 1.

Relative-clause region

Multilevel models for the relative-clause region suggested that baseline reading time (in the inanimate, subject-relative condition) was greater in the deaf group than in the English group [significant by participants, with t 1(130) = 2.30, SE = 90.5, p < .05; but not by items, t 2(25) = 0.55, SE = 90.2, n.s.]. The analyses also produced an animacy by sentence type interaction, indicating that sentences with animate subjects and object-relative clauses were harder to process than the other three sentence types [t 1(130) = 1.83, SE = 27.8, p = .06; t 2(25) = 2.13, SE = 50.3, p < .05]. Follow-up analyses conducted separately for the deaf, English, and bilingual groups showed a significant interaction of animacy and clause type in the deaf group [t 1(67) = 2.43, SE = 49.1, p < .05; t 2(27) = 2.36, SE = 82.2, p < .05] but not the bilingual group [t 1(33) = 1.06, n.s.; t 2(33) < 1] or the English group [all ts < 1, n.s.].

Main-verb region

Models of data from the main-verb region also produced an interaction of animacy and sentence type [t 1(130) = 5.26, SE = 17.4, p < .001; t 2(25) = 2.44, SE = 42.5, p < .05]. Follow-up analyses conducted separately for the deaf, English, and bilingual groups showed a significant interaction of animacy and clause type in all three groups [deaf, t 1(67) = 2.66, p = .01; t 2(27) = 3.83, p < .001; English, t 1(30) = 2.90, SE = 29.2, p < .01; t 2(27) = 2.87, SE = 25.7, p < .01; bilingual, t 1(33) = 3.11, SE = 31.8, p < .01; t 2(27) = 1.95, SE = 65.1, p = .06].

Individual-differences analysis for deaf readers

A further set of multilevel models were conducted to assess group differences between native, early, and late signers. To assess these differences, group (native vs. early vs. late signer) was added as a categorical variable at the second level of a two-level model. The same text variables from the preceding analyses were entered at Level 1. Cross-level interactions indicate that Level 1 parameters differ significantly across the three groups.

These multilevel models indicated that baseline reading time (reading time in subject relatives with inanimate subjects) differed across the three groups in both the relative-clause and main-verb regions. Native signers read the relative clauses at a faster rate than early signers [1,306 vs. 1,622 ms, t(65) = 2.06, p < .05], and late signers [1,306 ms vs. 1,748 ms, t(65) = 2.24, p < .05]. Similar results occurred in the main-verb region [native vs. early, 424 vs. 513 ms, t(65) = 1.75, p = .09; native vs. late, 424 vs. 593 ms, t(65) = 3.50, p < .001]. Group (native vs. early vs. late) did not interact with clause type (object vs. subject relative). Similarly, group did not moderate the size of the clause type by animacy interaction. Thus, the results do not indicate that the three groups responded differently to the clause type and animacy manipulations.

The comprehension data indicate that the deaf group comprehended the sentences to a lesser degree than the English or bilingual group. Comprehension in the native signer group, however, was comparable to the bilingual group, and higher than the other two groups of deaf participants. A cross-language transfer hypothesis, according to which English sentences are comprehended by mapping them to ASL equivalents could account for the difference between the deaf reader subgroups. Such an account would appeal to differences in the quality of ASL representations. One possibility is that native signers have more robust and more finely differentiated conceptual representations. Another possibility is that English-to-ASL mapping processes are more robust and reliable in native signers. Alternatively, it is possible that these comprehension differences reflect different degrees of mastery of English syntax. However, such an account would predict differences in sensitivity to English morphosyntactic cues across the native, early, and late signers. If such differences were present, they did not lead to different patterns of reading time results in the three groups.

For the reading time results, multilevel models in which participant group (deaf vs. English vs. bilingual) was included as a predictor did not produce cross-level interactions (the exception: one model indicated an overall reading speed difference between the deaf and English groups). Thus, no strong evidence emerged that patterns of processing time differed between the three groups. All groups showed the commonly found interaction of animacy and sentence type. All three also experienced more difficulty processing object relatives with animate subjects, as compared with the other three sentence types.

The absence of the animacy-by-clause type interaction in the relative-clause region for the hearing readers is a departure from previous findings (e.g., Traxler et al., 2002; Traxler et al., 2005). Given the robust nature of this interaction in English readers and cross-linguistically, one plausible possibility is a Type II error.

The three subgroups of deaf readers responded to the syntactic and semantic manipulations very much like hearing readers do. Previous studies have established that English object relatives are relatively difficult to process. Previous studies have also shown that semantic cues can reduce the object-relative penalty (in English and other western European languages). Specifically, having an inanimate sentence-subject and an animate relative-clause subject greatly reduces processing load. This pattern occurred to about the same degree in all three groups of deaf readers, indicated by the significant clause type by animacy interaction in the multilevel models in combination with the absence of by-group interactions (native vs. early vs. late signers). Overall, the results indicate that deaf readers undertake similar parsing processes to hearing readers to interpret subject and object-relative clauses. Deaf readers respond differently to different relative-clause types, mediated by the specific semantic properties of the nouns in the sentence. This result contradicts prior accounts suggesting that deaf readers are insensitive to English syntax or that they ignore word order as a cue to syntactic structure.

Experiment 2

Experiment 2 assessed deaf and hearing readers’ response to active-voice and passive-voice sentences. We measured participants’ reading times on the main verb and the following noun phrase. These are two areas where influences of syntactic complexity would be expected to emerge. In the test sentences used here, the main verb codes for two argument slots, but the thematic roles assigned to the pre-verbal and post-verbal arguments differ. In the active voice, the preverbal argument also serves as a thematic agent, whereas in the passive voice, that same constituent would serve as thematic patient. The unusual ordering of thematic roles could induce greater load during processing of the main verb. Similarly, the postverbal argument in the passive-voice sentences would constitute a thematic agent occupying a position more normally occupied by a patient, theme, or experiencer. Assigning the postverbal argument an agent thematic role could require additional syntactic computations (relative to those undertaken for the active-voice sentences; see, e.g., Jackendoff, 2002). However, this assumes that prior syntactic cues (the presence of an auxiliary verb, the preposition by) have not sufficiently prepared the participants to cope with unusual ordering of thematic roles by the time they encounter the postverbal noun phrase. If participants predicted a passive after encountering the auxiliary verb was, then we might observe little or no processing difficulty in the passive-voice sentences.

Method

Participants

The participants were the same individuals who participated in Experiment 1.

Stimuli

The test sentences included 24 pairs of active- and passive-voice sentences, such as those in Examples (4a) and (4b):

-

(4a)

The farmer tricked the cowboy into selling the horse. (Active voice)

-

(4b)

The farmer was tricked by the cowboy into selling the horse. (Passive voice)

Plausibility norming

Because the lexical content of the scoring regions was kept the same between the active- and passive-voice conditions, the meanings of the two sentences differed. Although we intended the test sentences to be fully reversible, it is possible that the active-voice sentences were significantly more plausible than the passive-voice sentences. To see whether this was the case, we had 16 participants rate the plausibility of the active-voice sentences [e.g., (4a)] and active-voice paraphrases of the meanings of the passive-voice sentences (e.g., The cowboy tricked the farmer). One version of each item (either the original active-voice sentence or the paraphrase of the passive counterpart) was assigned to one of two lists of items. The critical items were randomly interspersed with three other types of items: implausible, impossible, and plausible sentences. The implausible sentences were active-voice sentences like The burglar arrested the policeman, which expressed an unlikely event. The impossible sentences were active-voice sentences like The student believes the stairwell, which expressed impossible events. The plausible sentences were included to fill out the lists to a total of 44 items and to provide a further comparison to assess the plausibility of the experimental items. The plausible sentences were active-voice sentences that expressed common activities (The mother fed the baby, The doctor treated the patient, etc.). Participants were instructed to read the sentences and to indicate, on a 1 (plausible) to 7 (impossible) scale, how likely the events expressed by the sentences were. The rating data were submitted to a one-way, repeated measures analysis of variance (condition: active-voice experimental items vs. passive-voice paraphrases vs. implausible vs. impossible vs. plausible). This analysis revealed an overall effect of condition [F(1, 15) = 39.1, MSE = 0.22, p < .0001]. Sentences like (4a) received a mean rating of 2.1 out of 7. Active-voice paraphrases of sentences like (4b) received a mean rating of 2.3. These two conditions did not differ (t < 1, n.s.). These two conditions [(4a) and (4b)] both differed from the implausible (mean rating = 5.7) and impossible (mean rating = 6.6; all ts > 15; all ps < .0001) conditions. The two experimental conditions were rated as being less plausible than the plausible norming sentences (mean rating = 1.3; all ts > 6.4, all ps < .0001). Thus, although the meanings of the experimental sentences [e.g., (4a) and (4b)] were not at ceiling in plausibility, they did not differ from each other.

Apparatus and procedure

The apparatus and procedure were identical to those of Experiment 1.

Analyses

We analyzed data from three scoring regions. The main-verb region consisted of the main verb of the sentence (e.g., tricked). The determiner consisted of the determiner following the main verb. The noun region consisted of the head of the postverbal noun phrase (e.g., cowboy). The data were analyzed using multilevel models. The first level of the model included the sentence type variable (active vs. passive). Group (deaf, English, bilingual) was entered at the second level of the model. Participant- and item-based analyses were conducted separately.

Results and discussion

Table 2 presents the mean reading times by scoring region and sentence type (active vs. passive voice).

Reading time results

Verb

Multilevel models with sentence type (active vs. passive) at Level 1 and group (deaf vs. English vs. bilingual) at Level 2 indicated a main effect of sentence type in the deaf group [passive faster than active, t 1(130) = 3.02, SE = 19.3, p < .01; t 2(23) = 2.87, SE = 11.8, p < .01]. The hearing bilingual group had longer reading times in the passive than in the active condition [significant by participants t 1(130) = 2.14, SE = 14.6, p < .05; but not by items; t 2(23) < 1, n.s.]. In the English group, reading times at the verb were numerically shorter in the passive than the active condition. As a result, the magnitude of the sentence type effect in the English group did not differ from the sentence type effect in the deaf group (both ts < 1, n.s.). However, the numerical advantage in the English group for passive voice at the verb was not statistically significant (both ts < 1, n.s.).

Determiner

At the determiner, reading times for the passive were shorter than those for the active-voice sentences for the deaf group [t 1(130) = 8.37, SE = 5.48, p < .01; t 2(23) = 3.92, SE = 10.7, p < .01]. The hearing bilingual group also had shorter reading times in the passive-voice sentences [t 1(130) = 2.77, SE = 13.0, p < .01; t 2(23) = 5.04, SE = 14.5, p < .01]. The data from the English group did not produce an effect of sentence type (active vs. passive; both ts < 1, n.s.).

Noun

The data from the noun region produced null results for the sentence type effect in all three groups of participants (all ts < 1.4, n.s.). Reading times in the active-voice sentences were longer in the bilingual group than in the deaf group [t 1(130) = 2.22, SE = 38.8, p < .05; t 2(23) = 7.29, SE = 14.1, p < .01].

Individual-differences analysis for deaf readers

The multilevel models indicated that native signers read the noun faster than early or late signers [native vs. early, 413 vs. 494 ms, t(65) = 1.97, p = .05; native vs. late, 413 vs. 502 ms, t(65) = 2.04, p < .05]. We observed a nonsignificant trend in the same direction at the verb [native vs. early, 433 vs. 501 ms, t(65) = 1.68, p < .10; native vs. late, 433 vs. 498 ms, t(65) = 1.68, p < .10].

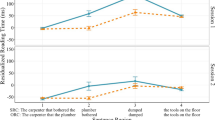

Figure 1 represents the reading time differences between the active- and passive-voice conditions for the three groups of deaf readers at the main verb. Positive values indicate that the participant read the passive condition faster than the active condition. All three groups of readers had shorter reading times in the passive- than in the active-voice conditions (differences ranging from 34 ms, in the native group, to 11 ms, in the late signer group). Although the biggest numerical effect was in the native signer group, the model failed to detect differences in the magnitudes of the sentence type effect between the three groups.

Reading times in the active-voice condition minus reading times in the passive-voice condition at the main verb in Experiment 2

Some results in the deaf and bilingual groups could reflect “spillover” effects. For the deaf group, the verb and determiner regions were read faster when they followed function words. For the bilingual group, only the determiner followed that pattern.

For all of the readers (deaf, bilingual, and English), the multilevel models did not produce cross-level interactions in any of the scoring regions. These results indicate qualitatively similar responses across the three groups of participants to the sentence type manipulation. The fact that none of the groups produced slower reading times in the passive-voice sentences was surprising. Further experiments will be required to determine whether this pattern is reliable.

General discussion

In Experiment 1, we tested deaf bilingual, hearing bilingual, and native English readers’ response to sentences containing object- and subject-relative clauses. In some of the stimuli, the animacy of the critical nouns offered potentially useful cues to sentence structure and interpretation. Deaf readers exhibited both a general object-relative penalty and a reduction in that penalty when animacy cues pointed toward the correct interpretation while reading the relative clause and the main verb. Both groups of hearing readers showed similar effects of sentence type and animacy, but those effects emerged most strongly at the main verb. These results resemble results for hearing readers from previous studies (Traxler et al., 2002; Traxler et al., 2005). The absence of clear results in the relative clause for the hearing readers may reflect a more “risky” reading strategy on their part, or an ordinary Type II error.

In Experiment 2, we tested deaf bilingual, hearing bilingual, and native English readers’ response to sentences containing active-voice and passive-voice sentences. Tests for simple effects indicated that deaf readers, but not hearing readers, processed the main verb faster in passive-voice than in active-voice sentences. However, because the multilevel models did not produce cross-level interactions based on group membership (deaf vs. hearing bilingual vs. native English), we found no strong evidence supporting qualitative differences in processing between the three groups. Deaf and hearing bilingual readers exhibited evidence of lexically based spillover effects at the determiner, as reading times were shorter in the passive than in the active condition (i.e., determiner reading times were shorter after the word by than after a semantically weighty main verb). Relatively short reading times in the deaf group at the main verb could be similarly interpreted as reflecting a spillover effect.

These results provide an initial glimpse into real-time sentence interpretation in deaf readers. These are the first studies that we know of that have used self-paced reading methods to assess sentence processing in deaf readers while controlling first-language experience (cf. Kelly, 1995, 2003). Comprehension in all three groups of signers was lower than in native-English-speaking college-aged readers. However, comprehension was indistinguishable between deaf and hearing bilinguals who had both acquired a first language from birth, and then acquired English as a second language. Furthermore, whereas comprehension performance was lower in deaf readers as a group than in the other two groups, their performance was well above chance. These results demonstrate that deaf readers comprehend English sentences while undertaking the self-paced reading task. Hence, it appears that this technique is a feasible chronometric method for testing hypotheses relating to deaf readers’ online interpretive processing. Future studies should probe readers’ comprehension more systematically, as our experience suggests that adding additional comprehension questions will not unduly tax deaf readers’ endurance. Moreover, these results underscore the importance of considering deaf readers’ first-language experience in evaluating reading ability in the second language. Prior studies have generally disregarded first-language proficiency when evaluating reading outcomes.

Future studies should also explore the extent to which knowledge of ASL grammar is activated and used by deaf readers during the interpretation of English sentences. Previous studies of hearing bilingual readers have shown cross-language transfer from first-language grammars during second-language tasks (e.g., Hartsuiker, Pickering, & Veltkamp, 2004). Furthermore, evidence indicates that deaf signers activate ASL signs when making semantic decisions about pairs of English print words (Morford, Wilkinson, Villwock, Piñar, & Kroll, 2011). English sentence processing may be affected by transfer from ASL grammar, especially for highly proficient native signers.

Finding such cross-language transfer effects is not guaranteed, however. What makes the situation with signers unique is that morphosyntactic features in ASL are signaled by visuospatial cues. Although some of the underlying syntactic relationships between words in ASL and words in English could be captured in a similar fashion (by phrase-structure or dependency diagrams), some aspects of ASL grammar (e.g., spatial verb agreement) do not have analogues in English. A detailed program of research will be required in order to determine the extent to which deaf readers activate and use ASL syntactic representations while interpreting sentences in English. Our working hypothesis is that ASL syntactic representations are activated.

Continuing to keep in mind that deaf readers are operating in a second-language context, Experiment 1 provides the first demonstration that we are aware of that the object-relative penalty can be reduced by animacy cues in bilinguals operating in their second language (but see Jackson & Roberts, 2010). We know very little about relative-clause structures and processing in ASL, except that these are among the most difficult structures to process for native signers of ASL (Boudreault & Mayberry, 2006). However, no evidence to date has indicated whether or not animacy influences relative-clause interpretation strategies in ASL. Thus, it is not yet clear whether deaf second-language learners of English transfer first-language strategies for the processing of relative clauses to second-language contexts, or whether they discover the utility of this cue solely from exposure to the second language. This state of affairs points toward the strong need for syntactic parsing studies of ASL itself. It also points toward the need for studies of English reading experience as a potential influence on the parsing of ASL.

The clause type by animacy interaction from Experiment 1 has been obtained in monolingual English (Traxler et al., 2002; Traxler et al., 2005), Dutch (Mak et al., 2002), Spanish (Betancourt, Carreiras, & Sturt, 2009), and French (Baudiffier, Caplan, Gaonac’h, & Chesnet, 2011) speakers, all of whom speak Western European languages that have similar typological roots. ASL is typologically distinct from all of these languages. Thus, the interaction of clause type and animacy may reflect something fundamental about constituent embedding and the canonical ordering of thematic roles. This conclusion is based on consistent patterns in the way that hearing readers respond across these several languages. Both subject- and object-relative clauses involve an embedding within a larger structure, which could lead to increased processing load relative to nonembedded expressions, because the contents and interpretation of a main clause must be held in abeyance, whereas the contents and interpretation of an embedded clause are worked out. The relative ease of processing object-relative clauses when the sentence has an inanimate subject and the relative clause has an animate subject indicates either that embedding in and of itself is not problematic or that the animacy cues somehow allow a comprehender to bypass potentially costly syntactic computations.

Conclusions

These experiments provide an initial snapshot of deaf readers’ online parsing and sentence comprehension performance. Deaf readers’ comprehension, on the whole, was somewhat lower than hearing readers’, although native signers performed as well as the other group of bilingual readers. Within the deaf readers, early and late signers read somewhat more slowly than native signers, and comprehended the sentences at a lower degree of accuracy. Deaf readers responded in much the same way as hearing readers to semantic cues in interpreting subject- and object-relative clauses. The differences in comprehension performance that we found across native, early, and late deaf signers illustrate the potential value of an individual-differences approach to building a theory of reading performance in deaf ASL–English bilinguals.

Notes

Hansen and Fowler (1987) found evidence that proficient college-aged deaf readers were capable of performing accurately on phonological judgment tasks, indicating some knowledge of auditory phonology. However, it is unknown whether knowledge of auditory phonology promotes reading skill among the deaf or develops as a byproduct of increases in literacy skill (Goldin-Meadow & Mayberry, 2001; Mayberry, 2010).

A trace is a hypothetical mental element that serves as a placeholder when a constituent is in an unusual position in the verbatim form of the sentence (Chomsky, 1981). See Pickering and Barry (1991) and Traxler and Pickering (1996) for an alternative syntactic analysis, under which constituents are directly linked to lexical heads, rather than being associated with traces that are linked to lexical heads.

The universality of the object-relative penalty is a current topic in sentence-processing research. Chinese and Basque are two languages that may pattern differently from English and other Western European languages (Carreiras, Duñabeitia, Vergara, de la Cruz-Paviae, & Lakae, 2010; Chen, Aihua, Hongyan, & Dunlap, 2008), although this is not yet firmly established.

Extended overviews of relative-clause processing accounts, including those appealing to effects of discourse elements that intervene between dependent elements, can be found in Mak et al. (2002), Traxler et al. (2002; Traxler et al., 2005), and Gordon et al. (2001). A full discussion of these accounts and their empirical basis is beyond the scope of this article.

For completeness, the following describes how the multilevel models were configured:

Level 1: RT for person i, item j = B 0i + B 1i (clause type) j + B 2i (animacy) j + B 3i (Clause Type × Animacy) j + e ij

Level 2: B 0i = g 00 + g 01 (English) + g 02 (bilingual) + u 0

\( \begin{array}{c}\hfill {B}_{1\mathrm{i}}={g}_{10}+{g}_{11}\left(\mathrm{English}\right)+{g}_{12}\left(\mathrm{bilingual}\right)+{u}_1\hfill \\ {}\hfill {B}_{2\mathrm{i}}={g}_{20}+{g}_{21}\left(\mathrm{English}\right)+{g}_{22}\left(\mathrm{bilingual}\right)+{u}_2\hfill \\ {}\hfill {B}_{3\mathrm{i}}={g}_{30}+{g}_{31}\left(\mathrm{English}\right)+{g}_{32}\left(\mathrm{bilingual}\right)+{u}_3\hfill \end{array} \)

B 0i , B 1i , B 2i , and B 3i represent baseline reading times (inanimate, subject-relative condition), the effect of changing from subject- to object-relative in the inanimate condition, the effect of changing from inanimate to animate in the subject-relative condition, and the effect of changing to the animate, object-relative condition, respectively. e ij represents random error in the Level 1 outcomes. g 00, g 10, g 20, and g 30 represent the mean values of the corresponding Level 1 parameters in the native signers. The other g parameters reflect deviations in the Level 1 parameters associated with membership in the English and bilingual groups. The u parameters reflect random error in the Level 2 outcomes.

Each participant had up to 28 responses. A second set of models was run in which RTs were considered as being nested within items rather than participants. For the by-items models, transpose the person and item.

References

Altmann, G. T. M., & Steedman, M. J. (1988). Interaction with context during human sentence processing. Cognition, 30, 191–238.

Baudiffier, V., Caplan, D., Gaonac’h, D., & Chesnet, D. (2011). The effect of noun animacy on the processing of unambiguous sentences: Evidence from French relative clauses. Journal of Experimental Psychology, 64, 1896–1905.

Betancort, M., Carreiras, M., & Sturt, P. (2009). The processing of subject and object relative clauses in Spanish: An eye-tracking study. Experimental Psychology, 62, 1915–1929.

Blozis, S. A., & Traxler, M. J. (2007). Analyzing individual differences in sentence processing performance using multilevel models. Behavior Research Methods, 39, 31–38.

Boudreault, P., & Mayberry, R. I. (2006). Grammatical processing in American Sign Language: Age of first-language acquisition effects in relation to syntactic structure. Language & Cognitive Processes, 21, 608–635.

Carreiras, M., Duñabeitia, J. A., Vergara, M., de la Cruz-Paviae, I., & Lakae, I. (2010). Subject relative clauses are not universally easier to process: Evidence from Basque. Cognition, 115, 79–92.

Chen, B., Aihua, N., Hongyan, B., & Dunlap, S. (2008). Chinese subject-relative clauses are more difficulty to process than the object-relative clauses. Acta Psychologica, 129, 61–65.

Chomsky, N. (1981). Lectures on government and binding. Dordrecht, The Netherlands: Kluwer.

Chomsky, N. (1995). The minimalist program. Cambridge, MA: MIT Press.

Christianson, K., Williams, C. C., Zacks, R. T., & Ferreira, F. (2006). Younger and older adults’ “good-enough” interpretations of garden-path sentences. Discourse Processes, 42, 205–238. doi:10.1207/s15326950dp4202_6

Coltheart, M., Rastle, K., Perry, C., Langdon, R., & Ziegler, J. (2001). DRC: A dual route cascaded model of visual word recognition and reading aloud. Psychological Review, 108, 204–256. doi:10.1037/0033-295X.108.1.204

Ferreira, F. (2003). The misinterpretation of noncanonical sentences. Cognitive Psychology, 47, 164–203.

Gallaudet Research Institute. (2002). Regional and national summary report of data from the 2000–2001 Annual Survey of Deaf and Hard of Hearing Children and Youth. Washington, DC: GRI, Gallaudet University.

Gibson, E. (1998). Linguistic complexity: Locality of syntactic dependencies. Cognition, 68, 1–76.

Gilboy, E., Sopena, J. M., Clifton, C., Jr., & Frazier, L. (1995). Argument structure and association preferences in Spanish and English complex NPs. Cognition, 54, 131–167.

Goldin-Meadow, S., & Mayberry, R. I. (2001). How do profoundly deaf children learn to read? Learning Disabilities Research and Practice, 16, 222–229.

Gordon, P. C., Hendrick, R., & Johnson, M. (2001). Memory interference during language processing. Journal of Experimental Psychology: Learning, Memory, and Cognition, 27, 1411–1423.

Gough, P. B., Hoover, W. A., & Peterson, C. L. (1996). Some observations on a simple view of reading. In C. Cornoldi & J. V. Oakhill (Eds.), Reading comprehension difficulties: Processes and intervention (pp. 1–13). Mahwah NJ: Erlbaum.

Grodzinsky, Y. (1986). Language deficits and the theory of syntax. Brain and Language, 27, 135–159.

Hansen, V. L., & Fowler, C. A. (1987). Phonological coding in word reading: Evidence from hearing and deaf readers. Memory & Cognition, 15, 199–207.

Harm, M. W., & Seidenberg, M. S. (2004). Computing the meanings of words in reading: Cooperative division of labor between visual and phonological processes. Psychological Review, 111, 662–720. doi:10.1037/0033-295X.111.3.662

Hartsuiker, R. J., Pickering, M. J., & Veltkamp, E. (2004). Is syntax separate or shared between languages? Cross-linguistic syntactic priming in Spanish–English bilinguals. Psychological Science, 15, 409–414. doi:10.1111/j.0956-7976.2004.00693.x

Holt, J. (1993). Stanford Achievement Test, 8th ed.: Reading comprehension subgroup results. American Annals of the Deaf, 138, 172–175.

Jackendoff, R. (2002). Foundations of language. Oxford, UK: Oxford University.

Jackson, C. N., & Roberts, L. (2010). Animacy affects the processing of subject–object ambiguities in the second language: Evidence from self-paced reading with German second language learners of Dutch. Applied PsychoLinguistics, 31, 671–691.

Keenan, E., & Comrie, B. (1977). Noun phrase accessibility and Universal Grammar. Linguistic Inquiry, 8, 63–99.

Kelly, L. P. (1995). Processing of bottom-up and top-down information by skilled and average deaf readers and implications for whole language instruction. Exceptional Children, 61, 318–334.

Kelly, L. P. (1996). The interaction of syntactic competence and vocabulary during reading by deaf students. Journal of Deaf Studies and Deaf Education, 1, 75–90.

Kelly, L. P. (2003). The importance of processing automaticity and temporary storage capacity to the differences in comprehension between skilled and less skilled college-age deaf readers. Journal of Deaf Studies and Deaf Education, 8, 230–249.

King, J. W., & Just, M. A. (1991). Individual differences in syntactic parsing: The role of working memory. Journal of Memory and Language, 30, 580–602.

Klima, E. S., & Bellugi, U. (1978). Poetry and song in a language without sound. Cognition, 4, 45–97.

Lillo-Martin, D. C., Hanson, V. L., & Smith, S. T. (1992). Deaf readers’ comprehension of relative clause structures. Applied PsychoLinguistics, 13, 13–30. doi:10.1017/S0142716400005403

Luckner, J. L., Sebald, A. M., Cooney, J., Young, J., & Goodwin Muir, S. (2005). An examination of the evidence-based literacy research in deaf education. American Annals of the Deaf, 150, 443–456.

MacDonald, M. C., Pearlmutter, N. J., & Seidenberg, M. S. (1994). Lexical nature of syntactic ambiguity resolution. Psychological Review, 101, 676–703. doi:10.1037/0033-295X.101.4.676

MacWhinney, B., & Pleh, C. (1988). The processing of restrictive relative clauses in Hungarian. Cognition, 29, 95–141.

Mak, W. M., Vonk, W., & Schriefers, H. (2002). The influence of animacy on relative clause processing. Journal of Memory and Language, 47, 50–68.

Mayberry, R. I. (2010). Early language acquisition and adult language ability: What sign language reveals about the critical period for language. In P. Nathan, M. Marschark, & P. E. Spencer (Eds.), The Oxford handbook of deaf studies, language, and education (Vol. 2, pp. 281–291). Oxford, UK: Oxford University Press.

Miller, P. F. (2000). Syntactic and semantic processing in Hebrew readers with prelingual deafness. American Annals of the Deaf, 145, 436–448.

Miller, P. (2010). Phonological, orthographic, and syntactic awareness and their relation to reading comprehension in prelingually deaf individuals: What can we learn from skilled readers? Journal of Developmental and Physical Disabilities, 22, 549–580.

Morford, J. P., Wilkinson, E., Villwock, A., Piñar, P., & Kroll, J. F. (2011). When deaf signers read English: Do written words activate their sign translations? Cognition, 118, 286–292.

Musselman, C. (2000). How do children who can’t hear learn to read an alphabetic script? A review of the literature on reading and deafness. Journal of Deaf Studies and Deaf Education, 5, 9–31.

Nicol, J., & Swinney, D. (1989). The role of structure in coreference assignment during sentence comprehension. Journal of Psycholinguistic Research, 18, 5–19.

Padden, C., & Humphries, T. (1988). Deaf in America: Voices from a culture. Cambridge, MA: Harvard University Press.

Perfetti, C., & Sandak, R. (2000). Reading optimally builds on spoken language: Implications for deaf readers. Journal of Deaf Studies and Deaf Education, 5, 32–50.

Pickering, M. J., & Barry, G. (1991). Sentence processing without empty categories. Language & Cognitive Processes, 6, 229–259.

Pickering, M. J., & van Gompel, R. P. G. (2006). Syntactic parsing. In M. J. Traxler & M. A. Gernsbacher (Eds.), The handbook of psycholinguistics (2nd ed., pp. 455–504). Amsterdam, The Netherlands: Elsevier.

Power, D., & Quigley, S. (1973). Deaf children’s acquisition of the passive voice. Journal of Speech and Hearing Research, 16, 5–11.

Quigley, S. P. (1982). Reading achievement and special reading materials. Volta Review, 84, 95–106.

Quigley, S., Wilbur, R., Power, D., Montanelli, D., & Steinkamp, M. (1976). Syntactic structures in the language of deaf children. Urbana, IL: Institute for Child Behavior and Development.

Raudenbush, S. W., & Bryk, A. S. (2002). Hierarchical linear models: Applications and data analysis methods (2nd ed.). Newbury Park, CA: Sage.

Robbins, N. L., & Hatcher, C. W. (1981). The effects of syntax on the reading comprehension of hearing impaired children. Volta Review, 83, 105–115.

Schick, B., & Moeller, M. P. (1992). What is learnable in manually coded English sign systems? Applied PsychoLinguistics, 13, 313–340.

Schirmer, B. R., & McGough, S. M. (2005). Teaching reading to children who are deaf: Do the conclusions of the national reading panel apply? Review of Educational Research, 75, 83–117.

Stokoe, W. C. (1980). Sign language structure. Annual Review of Anthropology, 9, 365–390.

Tanenhaus, M. K., Spivey-Knowlton, M. J., Eberhard, K. M., & Sedivy, J. C. (1995). Integration of visual and linguistic information in spoken language comprehension. Science, 268, 1632–1634. doi:10.1126/science.7777863

Townsend, D. J., & Bever, T. G. (2001). Sentence comprehension: The integration of habits and rules. Cambridge, MA: MIT Press.

Traxler, M. J. (2011). Parsing. Wiley Interdisciplinary Reviews: Cognitive Science, 2, 353–364.

Traxler, M. J. (2012). Introduction to psycholinguistics: Understanding language science. Boston, MA: Wiley-Blackwell.

Traxler, M. J., Johns, C. L., Long, D. L., Zirnstein, M., Tooley, K. M., & Jonathan, E. (2012). Individual differences in eye-movements during reading: Working memory and speed-of-processing effects. Journal of Eye Movement Research, 5(1):5, 1–16.

Traxler, M. J., Morris, R. K., & Seely, R. E. (2002). Processing subject and object-relative clauses: Evidence from eye-movements. Journal of Memory and Language, 47, 69–90.

Traxler, M. J., & Pickering, M. J. (1996). Plausibility and the processing of unbounded dependencies: An eye-tracking study. Journal of Memory and Language, 35, 454–475.

Traxler, M. J., Pickering, M. J., & Clifton, C., Jr. (1998). Adjunct attachment is not a form of lexical ambiguity resolution. Journal of Memory and Language, 39, 558–592.

Traxler, M. J., Williams, R. S., Blozis, S. A., & Morris, R. K. (2005). Working memory, animacy, and verb class in the processing of relative clauses. Journal of Memory and Language, 53, 204–224.

Wanner, E., & Maratsos, M. (1978). An ATN approach to comprehension. In M. Halle, J. Bresnan, & G. A. Miller (Eds.), Linguistic theory and psychological reality. Cambridge, MA: MIT Press.

Wolk, S., & Allen, T. E. (1984). A 5-year follow-up of reading-comprehension achievement of hearing-impaired students in special education programs. Journal of Special Education, 18, 161–176.

Zurif, E., & Swinney, D. (1995). The neuropsychology of sentence comprehension. In M. A. Gernsbacher (Ed.), Handbook of psycholinguistics. San Diego, CA: Academic Press.

Zurif, E., Swinney, D., Prather, P., Solomon, J., & Bushell, C. (1993). An on-line analysis of syntactic processing in Wernicke’s aphasia. Brain and Language, 45, 448–464.

Author Note

The authors thank three anonymous reviewers for helpful comments on previous drafts. They also thank all of the participants in the study. This research was supported by awards from the National Science Foundation (Grant No. 1024003) and the National Institutes of Health (Grant No. R01HD073948) to the first author. This research was also supported by the National Science Foundation Science of Learning Center Program, under Cooperative Agreement Nos. SBE-0541953 and SBE-1041725. Any opinions, findings, and conclusions or recommendations expressed are those of the authors and do not necessarily reflect the views of the National Institutes of Health or the National Science Foundation.

Author information

Authors and Affiliations

Consortia

Corresponding author

Appendix: Experimental items

Appendix: Experimental items

Experiment 1: Subject and object relatives with animate and inanimate subjects

The musician that witnessed the accident angered the policeman a lot.

The musician that the accident terrified angered the policeman a lot.

The accident that terrified the musician angered the policeman a lot.

The accident that the musician witnessed angered the policeman a lot.

The contestant that misplaced the prize made a big impression on Mary.

The contestant that the prize delighted made a big impression on Mary.

The prize that delighted the contestant made a big impression on Mary.

The prize that the contestant misplaced made a big impression on Mary.

The cowboy that carried the pistol was known to be unreliable.

The cowboy that the pistol injured was known to be unreliable.

The pistol that injured the cowboy was known to be unreliable.

The pistol that the cowboy carried was known to be unreliable.

The scientist that studied the climate did not interest the reporter.

The scientist that the climate annoyed did not interest the reporter.

The climate that annoyed the scientist did not interest the reporter.

The climate that the scientist studied did not interest the reporter.

The director that watched the movie received a prize at the film festival.

The director that the movie pleased received a prize at the film festival.

The movie that pleased the director received a prize at the film festival.

The movie that the director watched received a prize at the film festival.

The student that attended the school was visited by the governor.

The student that the school educated was visited by the governor.

The school that educated the student was visited by the governor.

The school that the student attended was visited by the governor.

The teacher that watched the play upset a few of the students.

The teacher that the play angered upset a few of the students.

The play that angered the teacher upset a few of the students.

The play that the teacher watched upset a few of the students.

The woman that reported the accident caused a number of serious injuries.

The woman that the accident bothered caused a number of serious injuries.

The accident that bothered the woman caused a number of serious injuries.

The accident that the woman reported caused a number of serious injuries.

The plumber that dropped the wrench was found near the back door.

The plumber that the wrench bruised was found near the back door.

The wrench that bruised the plumber was found near the back door.

The wrench that the plumber dropped was found near the back door.

The banker that refused the loan created a problem for the mayor.

The banker that the loan worried created a problem for the mayor.

The loan that worried the banker created a problem for the mayor.

The loan that the banker refused created a problem for the mayor.

The lawyer that reviewed the trial was covered by the national media.

The lawyer that the trial confused was covered by the national media.

The trial that confused the lawyer was covered by the national media.

The trial that the lawyer reviewed was covered by the national media.

The psychologist that printed the notes got lost somewhere in the basement.

The psychologist that the notes annoyed got lost somewhere in the basement.

The notes that annoyed the psychologist got lost somewhere in the basement.

The notes that the psychologist printed got lost somewhere in the basement.

The child that loaded the revolver injured the teenage babysitter.

The child that the revolver scared injured the teenage babysitter.

The revolver that scared the child injured the teenage babysitter.

The revolver that the child loaded injured the teenage babysitter.

The golfer that mastered the game was ignored by most sports writers.

The golfer that the game excited was ignored by most sports writers.

The game that excited the golfer was ignored by most sports writers.

The game that the golfer mastered was ignored by most sports writers.

The salesman that examined the product was mentioned in the newsletter.

The salesman that the product excited was mentioned in the newsletter.

The product that excited the salesman was mentioned in the newsletter.

The product that the salesman examined was mentioned in the newsletter.

The fireman that fought the fire caused only a small amount of damage.

The fireman that the fire burned caused only a small amount of damage.

The fire that burned the fireman caused only a small amount of damage.

The fire that the fireman fought caused only a small amount of damage.

The fish that attacked the lure impressed the fisherman quite a lot.

The fish that the lure attracted impressed the fisherman quite a lot.

The lure that attracted the fish impressed the fisherman quite a lot.

The lure that the fish attacked impressed the fisherman quite a lot.

The farmer that purchased the tractor arrived at the store late last night.

The farmer that the tractor impressed arrived at the store late last night.

The tractor that impressed the farmer arrived at the store late last night.

The tractor that the farmer purchased arrived at the store late last night.

The gardener that trimmed the plants helped make the house more attractive.

The gardener that the plants pleased helped make the house more attractive.

The plants that pleased the gardener helped make the house more attractive.

The plants that the gardener trimmed helped make the house more attractive.

The pilot that crashed the plane was grounded by the safety board.

The pilot that the plane worried was grounded by the safety board.

The plane that worried the pilot was grounded by the safety board.

The plane that the pilot crashed was grounded by the safety board.

The elephant that drank the water was located in the heart of Africa.

The elephant that the water cooled was located in the heart of Africa.

The water that cooled the elephant was located in the heart of Africa.

The water that the elephant drank was located in the heart of Africa.

The actor that rehearsed the play was given first prize at the awards dinner.

The actor that the play delighted was given first prize at the awards dinner.

The play that delighted the actor was given first prize at the awards dinner.

The play that the actor rehearsed was given first prize at the awards dinner.

The student that practiced the instrument had been around for a few months.

The student that the instrument frustrated had been around for a few months.

The instrument that frustrated the student had been around for a few months.

The instrument that the student practiced had been around for a few months.

The spy that encoded the message was smuggled out of the country in a crate.

The spy that the message alarmed was smuggled out of the country in a crate.

The message that alarmed the spy was smuggled out of the country in a crate.

The message that the spy encoded was smuggled out of the country in a crate.

Experiment 2: Actives and passives

The policeman found the lost child at the airport.

The policeman was found by the lost child at the airport.

The farmer tricked the cowboy into selling the horse.

The farmer was tricked by the cowboy into selling the horse.

The basketball player helped the coach to put away the equipment.

The basketball player was helped by the coach to put away the equipment.

The teacher criticized the principal before the school board meeting.

The teacher was criticized by the principal before the school board meeting.

The professor admired the students in the biology class.

The professor was admired by the students in the biology class.

The lion found the zebras near the watering hole.

The lion was found by the zebras near the watering hole.

The baker hired the woman to help out with the wedding.

The baker was hired by the woman to help out with the wedding.

The painter recruited the model after the art show.

The painter was recruited by the model after the art show.

The accountant visited the banker before the audit.

The accountant was visited by the banker before the audit.

The mechanic phoned the customer after the car was repaired.

The mechanic was phoned by the customer after the car was repaired.

The old lady ran over the drunk last Saturday night.

The old lady was run over by the drunk last Saturday night.

The interpreter confused the diplomat during the treaty negotiations.

The interpreter was confused by the diplomat during the treaty negotiations.

The scientist frightened the assistant during the thunderstorm.

The scientist was frightened by the assistant during the thunderstorm.

The tourist photographed the tour guide in front of the museum.

The tourist was photographed by the tour guide in front of the museum.

The comedian liked the agent with the shiny black shoes.

The comedian was liked by the agent with the shiny black shoes.

The judge smiled at the defense attorney before the trial started.

The judge was smiled at by the defense attorney before the trial started.

The cheerleader asked the football player for his phone number.

The cheerleader was asked by the football player for her phone number.

Two ducks approached the old woman who had a bag of bread crumbs.

Two ducks were approached by the old woman who had a bag of bread crumbs.

The pilot saluted the ground crew before the plane took off.

The pilot was saluted by the ground crew before the plane took off.

The salesman amused the customers at the used car dealership.

The salesman was amused by the customers at the used car dealership.

The mayor approached the councilman about the new library.

The mayor was approached by the councilman about the new library.

The coal miner pushed the bartender and the people at the bar laughed.

The coal miner was pushed by the bartender and the people at the bar laughed.

The neighbors upset the college students living next door.

The neighbors were upset by the college students living next door.

The child upset the nurse at the clinic this morning.

The child was upset by the nurse at the clinic this morning.

Rights and permissions

About this article

Cite this article

Traxler, M.J., Corina, D.P., Morford, J.P. et al. Deaf readers’ response to syntactic complexity: Evidence from self-paced reading. Mem Cogn 42, 97–111 (2014). https://doi.org/10.3758/s13421-013-0346-1

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13421-013-0346-1