Abstract

As people learn a new skill, performance changes along two fundamental dimensions: Responses become progressively faster and more accurate. In cognitive psychology, these facets of improvement have typically been addressed by separate classes of theories. Reductions in response time (RT) have usually been addressed by theories of skill acquisition, whereas increases in accuracy have been explained by associative learning theories. To date, relatively little work has examined how changes in RT relate to changes in response accuracy, and whether these changes can be accounted for quantitatively within a single theoretical framework. The current work examines joint changes in accuracy and RT in a probabilistic category learning task. We report a model-based analysis of changes in the shapes of RT distributions for different category responses at the level of individual stimuli over the course of learning. We show that changes in performance are determined solely by changes in the quality of information entering the decision process. We then develop a new model that combines an associative learning front end with a sequential sampling model of the decision process, showing that the model provides a good account of all aspects of the learning data. We conclude by discussing potential extensions of the model and future directions for theoretical development that are opened up by our findings.

Similar content being viewed by others

The effect of learning on performance is twofold. As people become more proficient with a task, their responses become faster and more accurate. Traditionally, changes in these two facets of performance have been addressed by different classes of theories. On the one hand, theories of practice and automaticity have focused on reductions in response time (RT) that occur over the course of learning (e.g., Logan, 1988, 2002). On the other hand, associative learning theories have addressed changes in choice behavior with experience (e.g., Rescorla & Wagner, 1972). Perhaps surprisingly, there is relatively little theoretical work bridging these classes of theories and attempting to explain changes in choice behavior and RT simultaneously. It is therefore an open question whether the changes in RT that arise during learning can be accounted for by associative learning processes that have explained changes in choice behavior. In this article, we investigate whether an error-driven associative learning mechanism can characterize changes in choice-RT data from a simple probabilistic category learning task. We apply Ratcliff’s (1978; Ratcliff & McKoon, 2008) diffusion model to choice-RT data spanning the entire course of learning—covering rapid early changes in performance through to stable asymptotic performance once cue–outcome contingencies have been learned. We show that learning-related changes in choice behavior and the shapes of RT distribution data can be described solely in terms of changes in the quality of information driving the decision process. Conceptually, we identify changes in the quality of information with learned changes in relative associative strength linking representations of cues to different category outcomes. In this article, we develop a new formal model that combines elements of associative learning models of categorization with evidence accumulation models of decision-making. We show that this new integrated model—a synthesis of Kruschke’s (1992) ALCOVE model of category learning and Ratcliff’s (1978) diffusion decision model—provides a close account of the data from our task, and that the quality of the fit is on par with that of a flexible implementation of the diffusion model.

Two approaches to studying learning

Our theoretical starting point is the instance theory of automaticity developed by Logan (1988). The instance theory provides a detailed account of the speed-ups in performance that occur during learning (i.e., the ubiquitous practice effect; Heathcote, Brown, & Mewhort, 2000; Newell & Rosenbloom, 1981). According to instance theory, reductions in RT during learning are due to a shift from responding being controlled by a relatively slow algorithmic process to a more efficient process that is based on the retrieval of instances—and response-relevant information associated with those instances—from memory. Because instances are assumed to race independently to be retrieved, the speed of retrieval-based responding increases as more instances are accumulated in memory through repeated encounters with task-relevant stimuli (Townsend & Ashby, 1983). Logan (1988) showed that his original theory provided a good account of practice effects on mean RT in a variety of task settings. Subsequently, it was shown that the instance theory could also account for changes in the shapes of more detailed RT distribution data as a function of practice (Logan, 1992).

An important property of Logan’s (1988, 2002) instance theory is its close theoretical relationship with exemplar-based theories of categorization (e.g., Medin & Schaffer, 1978; Nosofsky, 1986). According to exemplar theories, people assign stimuli to categories on the basis of their similarity to previously encountered exemplars. Nosofsky and Palmeri (1997a, 2015) combined exemplar-based stimulus representation with the memory retrieval assumptions of Logan’s instance theory to develop the exemplar-based random walk (EBRW) model of speeded classification. In the EBRW model, presentation of a stimulus triggers a race among exemplars in memory to be retrieved. Exemplars race at a rate that is determined by their similarity to the presented item, with similar exemplars being retrieved at a faster rate than dissimilar exemplars. Once retrieved, the category label associated with the retrieved exemplar is used to drive a random walk decision process, which accumulates relative evidence about category membership to a response boundary. When a sufficient quantity of evidence has been accumulated, the corresponding category response is made. The EBRW model extended instance theory by providing a detailed account of how interitem similarity affects classification RTs as well as choice probabilities. Like instance theory, the EBRW model explains speed-ups in RT in terms of the accumulation of exemplars in memory. As learning progresses, people have access to a larger pool of exemplars to retrieve, which naturally produces faster RTs as learning progresses. Palmeri (1997, 1999) showed that the EBRW model provided an excellent account of changes in mean RTs that occurred during the development of automaticity in perceptual classification.

A key ingredient to the success of the EBRW model and instance theory is their ability to explain changes in performance via learning. Both the EBRW model and instance theory view learning as the accumulation of exemplars in memory. The assumption that exemplars race to be retrieved naturally accounts for practice effects in RT. To account for changes in categorization decisions during learning, pure exemplar-storage accounts typically need to include additional assumptions, such as the presence of “background” elements in memory that add noise to the decision process (e.g., Nosofsky, Kruschke, & McKinley, 1992). Nosofsky and Alfonso-Reese (1999) showed that by allowing retrieval of background noise elements—which become less influential over the course of learning as more exemplars are accumulated—the EBRW model was able to account for variation in both accuracy and mean RT changes during perceptual category learning. Investigation of this pure exemplar-storage view of learning is, however, somewhat limited, as relatively few studies have examined learning-related changes in accuracy in this way.

An alternative to the pure exemplar-storage view of learning is that of incremental adjustment of associations between cues and outcomes.Footnote 1 Formal models of the associative learning process have a long history in cognitive psychology (e.g., Bush & Mosteller, 1951; Estes, 1950), with the error-driven model of Rescorla and Wagner (1972) being the most prominent example. Le Pelley (2004) provided a historical overview of some of the major theoretical frameworks, noting their relative strengths and limitations. In the domain of categorization, associative learning theories are perhaps most readily identified with Kruschke’s (1992) influential ALCOVE model, which combines the exemplar-based representational assumptions of Nosofsky’s (1986) generalized context model (GCM) with an error-driven mechanism for learning both associations between exemplars and category outcomes as well as attention weights that affect computation of interitem similarity. According to association-based models of category learning, corrective feedback encountered during learning drives changes in the network of associations relating exemplars in memory to different category outcomes with the goal of minimizing prediction error. Because association weights are adjusted incrementally on a trial-by-trial basis, these models provide a natural explanation for why choice probabilities change over the course of learning. Indeed, an important benchmark for evaluating category learning models has been their ability to account for the relative rates at which category structures of differing complexity can be learned (Kruschke, 1992; Love, Medin, & Gureckis, 2004; Nosofsky, Gluck, Palmeri, McKinley, & Gauthier, 1994). Although error-driven learning is unlikely to be the only mechanism that drives changes in categorization performance (Bott, Hoffman, & Murphy, 2007; Kurtz, Levering, Stanton, Romero, & Morris, 2013), the principle has proved remarkably successful, forming the backbone of many formal models (Kruschke, 2008). Given that exemplar-retrieval theories and associative learning theories based on the ALCOVE framework share common representational assumptions (see Logan, 2002, for formal details of the relationships among specific models), it is perhaps surprising that learning models have not been rigorously tested against RT data. There are, however, practical challenges that have limited use of RT data to evaluate learning models. Chief among them, as discussed by Maddox, Ashby, and Gottlob (1998), is the problem of collecting enough observations to obtain stable estimates of RT during the early stages of learning, as performance tends to improve rapidly when people receive corrective feedback. Consequently, studies investigating categorization RTs have often focused on asymptotic performance after the relationships between stimuli and category outcomes have been learned. Given the lack of detailed RT data during the learning process itself, it is somewhat unclear whether error-driven learning mechanisms can account for changes in RTs that might arise during this time. A complete account of category learning performance requires a model that can, at minimum, (1) produce appropriate learning curves that reflect changes in the rates at which different stimuli are assigned to different category outcomes, (2) characterize changes in the time course of decisions resulting in assignment of each stimulus to different category outcomes, and (3) show that the changes in the rates of different categorization responses are commensurate with the changes in RT for those responses during learning.

The discussion so far has identified two theoretical approaches to studying the effects of learning on performance, one based on exemplar-retrieval theories (e.g., instance theory and the EBRW model) and another based on associative learning theories (e.g., ALCOVE). Because the different approaches emphasize different facets of performance, their limitations are complementary: Exemplar-retrieval perspectives tend to emphasize changes in RT more so than accuracy, whereas associative learning perspectives tend to emphasize changes in accuracy over RT. The goal of the current article is to address these limitations by establishing whether an error-driven learning process can simultaneously account for changes in choice probabilities as well as detailed RT distribution data over the course of learning, and whether relating these two facets of performance requires additional theoretical assumptions. In doing so, we seek to strengthen the existing theoretical connections between these frameworks. We structure the rest of the article as follows. First, we introduce a probabilistic category learning task that permits collection of detailed RT distribution data at the level of individual stimuli over the entire course of learning, overcoming the principal challenge to studying RT dynamics during early learning. We argue that probabilistic learning environments are ideal for testing associative learning models against RT data because they produce patterns of behavior that happen to impose strong constraints on models of choice RT. We then describe the diffusion model of Ratcliff (1978; Ratcliff & McKoon, 2008), discussing how a key parameter of the model—namely, the drift rate of the diffusion process—can be linked conceptually with associations relating cues to different category outcomes. We then briefly review recent work that has used choice-RT models to investigate learning dynamics. After presenting our experiment and summarizing the main empirical results, we conduct a diffusion model analysis of our data to determine the extent to which changes in associative strength are responsible for driving changes in categorization performance during learning. We then develop a model that uses an ALCOVE-inspired associative learning model as a front end to drive a diffusion decision model, and test this integrated model against our data. We conclude the article by discussing directions for future research and possible theoretical extensions of the new model.

Probabilistic category learning

In probabilistic category learning tasks, the mapping relating cues to different category outcomes is not deterministic, meaning that the same stimulus, presented on different trials, will not always be assigned to the same category outcome. This endows the task with several desirable properties for studying learning. First, the consistency of the feedback for each individual stimulus is under strict experimental control. For any number of individual stimuli, the probability with which they are assigned to different category outcomes is determined by the experimenter. This means that both the modal category outcome as well as the consistency of the feedback can be varied on an item-by-item basis. If the stimuli are highly discriminable and nonconfusable with one another, then changes in the way people respond to individual stimuli can be unambiguously attributed to the information provided by trial-by-trial feedback. In this way, probabilistic categorization provides an ideal setting for studying the underlying learning process. The second important property about probabilistic categorization tasks is that participants tend to respond in a way that deviates from what would be expected from an optimal response policy. Historically, people’s performance has been described in terms of probability matching, where cues are assigned to different category outcomes at a rate that approximates the relative probabilities of the different category outcomes. For example, if a cue is paired with Category A feedback on 80% of trials and Category B feedback on the remaining 20% of trials, people will tend to assign the cue to Category A 80% of the time and to Category B 20% of the time. This can be contrasted with an optimal maximizing strategy. Under maximizing, a stimulus is always assigned to Category A if the probability of Category A feedback is greater than 0.5; otherwise, the stimulus is assigned to Category B. In reality, neither probability matching nor maximizing provides a completely accurate picture of performance, as people tend to “overshoot” the feedback probabilities while not strictly maximizing either (e.g., Friedman & Massaro, 1998; Nosofsky & Stanton, 2005; Shanks, Tunney, & McCarthy, 2002). The tendency to overshoot, but not maximize, persists even in highly practiced individuals (Edwards, 1961; Sewell et al., 2018); learners are expected to assign stimuli to multiple category outcomes across all stages of learning. The variability in people’s responding permits collection of RT distribution data for both frequently and infrequently reinforced category outcome responses for different stimuli. This imposes strong constraints on models, which must account for the changes in both the proportion of each kind of categorization response and how the shapes of the underlying RT distributions for those responses change with learning (cf. Ratcliff & Rouder, 1998).

A further benefit of using a probabilistic category learning task is that it allows the same logical task structure to be repeatedly presented to the learner. This overcomes the practical challenge of measuring early leaning RTs identified by Maddox et al. (1998), as it enables collection of a large number of observations at each stage of learning, permitting stable measurement of detailed RT distribution data. For concreteness, consider a set of four highly discriminable cues (e.g., red, yellow, green, and blue color patches; see Fig. 1). Each cue is paired with a unique feedback probability, which determines the relative frequency with which the cue is paired with a Category A or Category B outcome during learning. In tasks using a small number of nonconfusable cues, performance typically stabilizes after each cue has been presented around 30–40 times (e.g., Craig, Lewandowsky, & Little, 2011; Sewell et al., 2018). Because learning proceeds rapidly, it is possible for people to complete multiple runs through the learning task within a single experimental session. Each run through the task comprises a fixed number of trials—enough to ensure that learning is achieved and performance stabilizes—where the mapping between cues and outcomes is randomly determined. Across different runs, the mapping between cues and outcomes can be rerandomized, or an entirely new set of perceptual cues can be introduced. To avoid interference effects, participants are explicitly informed when each run begins and ends. Participants are therefore aware of when new cue–outcome contingencies need to be learned and previously learned information is no longer applicable (cf. Craig et al., 2011; Kruschke, 1996). By repeatedly resetting the learning environment in this way, participants are forced to learn the mappings between cues and outcomes anew for each run. Combining observations across runs result in a large number of observations at the level of individual stimuli during each stage of learning. These data can be used to measure changes in the shapes of RT distributions for different category responses. We adopt this method of repeated task presentation in the current study.

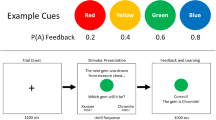

Illustration of the randomized mapping between discrete cues and feedback probabilities across different runs of a learning experiment. For each run of the task, a set of Category A feedback probabilities are randomly assigned to a set of cues. The feedback probability determines how frequently a cue will be paired with Category A feedback during learning. Because each run involves the same set of feedback probabilities, the logical structure of the learning environment is repeatedly presented. However, learning must begin anew in each run because different perceptual cues appear in each run. This allows data from different runs to be combined, permitting analysis of RT distribution data at the level of individual stimuli, defined by their feedback probability, in each learning block

Diffusion model

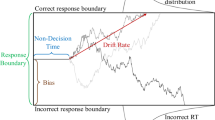

The diffusion model (Ratcliff, 1978; Ratcliff & McKoon, 2008) is a member of the class of sequential sampling models of two-choice decision-making and is among the most rigorously tested in cognitive psychology (Ratcliff & Smith, 2004; Sewell & Smith, 2016). The model conceptualizes decision-making as a noisy evidence accumulation process and decomposes empirical RTs into two components: One that reflects the time course of decision-making and another that summarizes the time required for other processes not involved in decision-making (e.g., stimulus encoding and response execution). According to the model, decisions are made by repeatedly sampling stimulus information and accumulating that information through time. Evidence accumulation begins at some point z, which is situated between two decision boundaries, located at a and 0. Each sample provides some quantity of evidence favoring one of the response alternatives over the other, moving the accumulated evidence total toward one of the two decision boundaries. Once a sufficient quantity of evidence has been accumulated and the process reaches a decision boundary, the corresponding behavioral response is initiated. Figure 2 provides a schematic illustration of the model.

Schematic illustration of the diffusion model. Empirical RTs are the sum of two independent components. The first summarizes the time course of the decision process; the second summarizes the time course of processes not related to decision-making (e.g., stimulus encoding and response execution). Decisions are the product of noisy accumulation of stimulus information. The rate of evidence accumulation is controlled by the drift rate of the diffusion process, which reflects the quality of information entering the decision process. The time course of decision-making is jointly determined by the drift rate and the evidence threshold set by the individual. Higher drift rates lead to faster and more accurate decisions, whereas a higher decision threshold results in slower but more accurate decisions. The time course of other processing stages not related to decision-making is summarized by the nondecision time parameter

In the diffusion model, the time course of decision-making is determined jointly by the quality of the information provided by the stimulus, which controls the rate of evidence accumulation, and the decision threshold, which determines how much evidence is required before a choice is made. The former is reflected in the drift rate of the diffusion process (v), which indexes the quality of information driving decision-making. When the drift rate is high, decisions will be faster and more accurate; when the drift rate is low, decisions will be slower and more prone to error. The decision threshold is controlled by the boundary separation parameter (a). High values reflect cautious decision-making, resulting in slow but accurate responses; low values reflect a greater emphasis on response speed, resulting in faster responses but more errors. The time course of encoding and response processes not related to decision-making is summarized by the nondecision time parameter (Ter). The diffusion model allows drift rate and nondecision time to vary on a trial-by-trial basis, reflecting the presence of noise in the processes involved in stimulus representation (η, capturing drift variability) and variability in the efficiency of encoding and response processes (st, capturing nondecision time variability; see Ratcliff, 2013, for further discussion about parameter variability across trials).

The diffusion model has accounted for a wide range of data at the level of choice probabilities and the shapes of RT distributions for correct and error responses (see Ratcliff, Smith, Brown, & McKoon, 2016, for a recent review). Due to its success in accounting for data at this fine-grained level of analysis, the model is often used in a measurement capacity as a meeting point between theory and data. Diffusion model analysis permits identification of experimental factors that affect specific aspects of the decision process. Typically, differences in drift rates are of most theoretical interest, as these reflect differences in the quality of information used to make decisions across experimental conditions. While a pure diffusion model analysis suffices to identify which aspects of the decision process differ across conditions, it does not explain how those differences came to be. Ideally, the changes in drift rates—or any other parameters that are required to vary across conditions in order to account for data—would be explained by a psychological theory of the representational processes that support decision-making. With respect to probabilistic category learning, we are interested in how association-based representations support category learning, and how learning processes that modify these associations incrementally adjust the drift rates that determine choice behavior. The EBRW model of Nosofsky and Palmeri (1997a, 2015) is one way of relating category representations to a decision process, which relies on the race model assumptions that define memory retrieval in Logan’s (1988) instance theory to explain changes in RT. Here, we investigate a complementary theoretical approach that explores the adequacy of using learned association-based representations to produce changes in both choice and RT data simultaneously. A key question is whether speed-ups in RT can also be explained in terms of changes in associative strength. Showing that an associative framework can account for combined choice-RT data sets the stage for future research to more directly compare association-based theories with exemplar-retrieval theories.

Relating learning and decision-making with the diffusion model

In the context of category learning theories (e.g., Kruschke, 1992; Love et al., 2004), relative support for different category responses is indexed by the strength of associations relating the stimulus to different category outcomes. Conceptually, drift rates in the diffusion model index the same kind of information (i.e., relative support for different decision outcomes, given the stimulus), and so it is straightforward to identify changes in drift rates in learning tasks with changes in relative associative strength. The idea that associative learning enhances drift rates has found support from several studies that have used the diffusion model to investigate learning effects. For example, Petrov, Van Horn, and Ratcliff (2011) observed systematic increases in drift rates across trial blocks in a perceptual learning task, reflecting improvements in people’s ability to extract information from a stimulus. Liu and Watanabe (2012) reported similar practice-related changes in a motion discrimination task. Analogous increases in drift rates have been observed in other perceptual discrimination tasks involving brightness and letter stimuli in both older and younger adults (Ratcliff, Thapar, & McKoon, 2006). Practice-related increases in drift rates have also been observed in higher order cognitive tasks, such as lexical decision (Dutilh, Vandekerckhove, Tuerlinckx, & Wagenmakers, 2009), and have been shown to reflect both task-general and stimulus-specific components (Dutilh, Krypotos, & Wagenmakers, 2011; Petrov et al., 2011). Stimulus-specific effects on drift rates are of particular relevance, as these are consistent with learned strengthening of associations between stimulus-representations and response outcomes.

Recent work by Frank and colleagues has provided a more detailed investigation of trial-by-trial learning effects on decision-making. Much of this work has used a reward learning paradigm that is different from probabilistic category learning, but shares many important characteristics. In their probabilistic selection task (Frank, Seeberger, & O’Reilly, 2004), participants are presented with pairs of stimuli that each have unique probabilities of being associated with a reward. On each trial, participants must choose one of the two presented stimuli and are rewarded according to the probability associated with the chosen stimulus. The task is well suited for examining choice under uncertainty. Depending on the stimuli presented on a given trial, decisions can vary in terms of whether a reward is more or less likely (e.g., by presenting two stimuli with reward probabilities both greater than or less than 0.5), and the level of conflict created by the choice alternatives (e.g., when both stimuli have similar reward probabilities, conflict is higher than when the presented stimuli have divergent reward probabilities). Ratcliff and Frank (2012) investigated the relationship between a neurally inspired reinforcement learning model (Frank, 2005, 2006) and the diffusion model via simulation. They found that data simulated by the learning model could be accommodated by a version of the diffusion model that allowed time-dependent decision boundaries for high-conflict trials as well as one that introduced a delayed decision onset for the high conflict trials with an unlikely reward outcome.

Pedersen, Frank, and Biele (2017) extended the work of Ratcliff and Frank (2012) by combining a reinforcement learning model with the diffusion model to account for changes in choice behavior of adults with attention-deficit/hyperactivity disorder in the probabilistic selection task. Pedersen et al. showed that a model that incorporated collapsing decision boundaries across trials as well as differential learning rates for correct and error trials was needed to completely account for performance of participants on and off medication. Taken together, the results of Pedersen et al. and Ratcliff and Frank highlight how the theoretical assumptions of learning models are quite compatible with decision models such as the diffusion model. A limitation of those studies, though, is that the nature of the experimental designs precluded a detailed examination of the changes in RT distribution data over the course of learning. We seek to overcome this limitation via repeated presentation of the logical task structure.

More closely related to the current work is a recent study by Frank et al. (2015), who used a probabilistic reward learning task involving presentation of only a single stimulus per trial. In this task, people learned to select a rewarded response alternative for each of three unique stimuli via trial-and-error learning. Frank et al. applied the diffusion model to the trial-by-trial learning data covering 40 presentations of each stimulus. Drift rates were related to the difference in expected value for choosing each response alternative, given the stimulus. Model parameters were further constrained by trial-by-trial electroencephalogram (EEG) and functional magnetic resonance imaging (fMRI) data. Frank et al. showed that combining a reinforcement learning model with the diffusion model produced good fits to the learning data, and that trial-by-trial variation in decision threshold could be predicted by variation in both EEG and fMRI signals.

The recent work reviewed above shows that changes in choice behavior can be explained in terms of systematic changes in diffusion model parameters as a function of learning. There is particularly strong support for increases in drift rate as a function of practice (e.g., Dutilh et al., 2009; Liu & Watanabe, 2012; Ratcliff et al., 2006). Importantly, these learning effects can at least partially be attributed to stimulus-specific learning effects, which imply a role for associative learning mechanisms (e.g., Dutilh et al., 2011; Petrov et al., 2011). More recent analyses of trial-by-trial learning performance have further shown that error-driven reinforcement learning models provide a good process account for how drift rates evolve over the course of learning (Frank et al., 2015; Pedersen, et al., 2017). However, changes in performance may not be solely driven by changes in drift rates, as reduced decision thresholds have often been observed during learning (Dutilh et al., 2011; Dutilh et al., 2009; Liu & Watanabe, 2012; Pedersen et al., 2017; Petrov et al., 2011; Ratcliff & Frank, 2012). The relative contributions of changes in drift rates and decision thresholds to changes in learning performance can only be clearly identified via detailed model-based analysis of changes in choice-RT distribution data.

Overview of the current study

In the current study, we seek to determine the extent to which changes in categorization performance are driven by learning-related changes in drift rates, and whether changes in other decision parameters are needed to account for the data. To ensure that sufficient observations are collected to reliably estimate RT distributions for different response alternatives for stimuli in each learning block, we have participants complete multiple sessions of a probabilistic category learning task. Each session of the experiment presents three different runs through the learning task (i.e., the same set of feedback probabilities are used, but the perceptual cues that define the stimuli differ across each run within a testing session).

To identify which aspects of the decision process are affected by learning, we conduct a diffusion model analysis of the complete set of choice-RT data. We report nested model comparisons to identify which parameters of the diffusion model are required to vary across stimuli and learning blocks in order to account for the data. After identifying the version of the diffusion model that provides the best balance between fit and parsimony, we develop a new model that combines a front-end associative learning model that uses a standard error-driven learning rule to adjust association weights relating cues to different category outcomes. We show that relating associative strengths to drift rates using an adaptation of Luce’s (1959) choice rule successfully produces changes in drift rates that allow the model to account for learning data. We show that the fit of this integrated category learning model is comparable with that of the best performing diffusion model, and is simpler in terms of the number of freely estimated parameters. The success of the model shows that changes in drift rates required to account for detailed choice-RT distribution data in a simple probabilistic learning task can be predicted by a standard error-driven associative learning rule. The model provides a complete account of the data from this task, and given its relationship to established exemplar models of category learning, can potentially be extended to more complex tasks involving multidimensional stimuli with variable interitem similarity.

Materials and methods

Participants

Six participants (five female) from the University of Melbourne were recruited for the experiment. One participant was excluded from the study after the first session, due to failing to understand the task and responding randomly. The final sample comprised five females between the ages of 19 and 30 years (M = 24.4, SD = 3.97), each of whom completed six sessions of testing. Each session lasted approximately 40 minutes. Participants were remunerated A$12 per session.

Apparatus

The experiment was programmed in MATLAB, using the Psychophysics Toolbox (Brainard, 1997; Pelli, 1997), and was run on a Windows PC. Responses were collected using a Cedrus RB-540 response box.

Design and stimuli

Each participant completed six sessions of a probabilistic category learning task. Separate sessions were scheduled on different days, at the convenience of the participants. Each session was divided into three separate runs. The runs were functionally identical copies of a five-block learning task. Runs differed in terms of the perceptual cues that defined the stimuli, thereby avoiding interference from prior learning, and requiring cue–outcome contingencies to be learned anew. Within each run, people had to learn the probabilistic associations between four discrete-valued stimuli and two category outcomes via trial-by-trial feedback. The Category A outcome probabilities were 0.20, 0.40, 0.60, and 0.80. The three stimulus sets used in the experiment are shown in Fig. 1 and consisted of color patches (red, yellow, green, and blue), mathematical symbols (plus, minus, divide, and multiply), and card suits (hearts, clubs, diamonds, and spades). For each participant, in each session, the order in which people encountered the different stimulus sets was randomly determined. Within each run, the mapping of individual stimuli to category outcome probabilities was determined randomly. Having participants complete three runs through a short learning experiment across multiple testing sessions allowed us to aggregate data across runs and testing sessions, permitting analysis of data at the level of RT distributions for both Category A and Category B responses for each stimulus, in each of the five learning blocks. This fine-grained level of analysis is essential for developing and testing models that can address changes in choice-RT data over the course of learning.

Pilot testing was conducted to ensure that stimuli in each of the three sets were nonconfusable. This involved a series of identification studies, where participants had to identify each stimulus as quickly as possible while maintaining accuracy. Of the many stimulus sets we considered, the three shown in Fig. 1 produced comparable mean identification RTs and similar encoding latencies as indexed by the leading edge of the identification RT distributions (e.g., Ratcliff & Smith, 2010; Smith, Ratcliff, & Sewell, 2014). Given the distinctiveness of the individual stimuli, identification errors were extremely rare.

Procedure

Participants completed each session individually in a quiet testing booth. At the start of the first experimental session, participants were presented with a cover story introducing the task. Participants adopted the role of a treasure hunter exploring a cave that contained four treasure chests, identified by the different stimuli. Participants were instructed that each treasure chest contained a mixture of two types of fictitious gems (Chromite and Xanium), and on each trial, a single gem was extracted from one of the treasure chests. Participants were required to guess which type of gem was extracted on each trial. If they guessed correctly, they got to keep the gem; otherwise, it was destroyed. Participants were encouraged to make as many correct responses as possible, and were told that they could use the feedback they received to help determine which gem was more likely to be found in each treasure chest. Participants were also informed that the relationships between the stimuli and the category outcomes were randomly determined for each run and each session. Before beginning the task, participants were able to ask any questions about the task instructions.

For each session, before the start of each experimental run, participants were shown the complete set of stimuli that would be used in that run. Participants then began the category learning task. Each trial began with the presentation of a central fixation dot for 800 ms. One of the four stimuli being used in the run was then presented centrally until a category response was made. Participants responded by pressing either the left (Category A) or right (Category B) button of the response box. Immediately following a response, participants were presented with feedback indicating the correct category outcome for that trial. Feedback was presented directly beneath the stimulus and remained on-screen for a study period of 1,000 ms. To encourage timely responding on the task, trials with RTs slower than 2,000 ms resulted in additional speed feedback being presented. The speed feedback, “TOO SLOW!!,” was presented for 3,000 ms after the category outcome feedback was extinguished (i.e., the study period was always limited to 1,000 ms). Once all feedback had been presented, there was an 800 ms blank intertrial interval. The next trial began immediately afterwards. Figure 3 shows an example trial sequence.

Time course of events during an individual learning trial. All trials began with the presentation of a central fixation dot for 800 ms. The stimulus was then presented centrally along with category response options underneath. The stimulus remained on-screen until the participant made a response, after which category outcome feedback was immediately presented. Feedback appeared underneath the stimulus, reporting whether the response was correct or incorrect and the correct category outcome label. This information remained on-screen for a study period of 1,000 ms. For trials where a response was slower than 2000 ms, the feedback screen was followed by an otherwise blank screen displaying “TOO SLOW!!” for 3,000 ms. Each trial ended with an 800 ms blank intertrial interval

In each run of the experiment, participants completed five blocks of learning trials. Within each block, the four stimuli were presented 10 times each, resulting in 200 learning trials per run. Participants had the opportunity to take self-paced breaks after every 20 trials. Upon completion of the first run, participants were able to take a self-paced break before viewing the stimulus set to be used in the next run. Stimulus presentation order was determined randomly for each participant in each run of each session.

Results

For each participant, data from all six sessions were combined, resulting in a total of 3,600 trials per participant. This resulted in 180 observations per stimulus, per learning block, providing ample trials for estimating the shapes of RT distributions for both Category A and Category B responses. We present our analysis in three parts. In the first part, we present changes in response probabilities and mean RT over the course of the five learning blocks. In the second part, we report a model-based analysis of the group-averaged data using Ratcliff’s (1978; Ratcliff & McKoon, 2008) diffusion model. In the third part, we develop a model that combines error-driven associative learning principles with a sequential sampling decision mechanism, showing that the model is able to learn the pattern of drift rates identified by the diffusion model analysis, and provide an accurate and parsimonious account of the complete set of learning data.

Data screening

The diffusion model provides an account of “one-shot” decisions, where choice behavior is determined by a single-stage decision process. To avoid incorporating trials where other kinds of decision procedures may have been applied—such as more complex multi-stage decisions or responses based on fast anticipatory responses—we sought to remove trials that produced unusually fast or slow RTs. To this end, we screened out responses that had RTs faster than 200 ms, as these were likely to have been prepared prior to stimulus onset. We also removed trials that were slower than 2,000 ms, as these were trials that elicited “Too Slow” feedback during the task, and may have involved lapses of attention or application of idiosyncratic and more complex decision strategies. Out of a total of 18,000 trials, these criteria removed 2.3% of the total data set.

Empirical results

We first provide a summary of the data by analyzing group-averaged choice probability and mean RT data. These analyses serve to illustrate the major trends in the learning data, showing that our method of repeatedly testing individuals across multiple sessions produced data that are highly representative of results commonly produced by single-session probabilistic category learning studies.

Choice probability

Choice probabilities for the four stimuli—defined by the four levels of Category A feedback: 0.2, 0.4, 0.6, and 0.8—across the five learning blocks are shown in Fig. 4. The largest changes in choice probabilities occur between the first and second learning blocks, after which they remain relatively stable. Asymptotically, participants tend to assign stimuli to the different outcome categories in a way that overshoots the feedback probabilities while also not maximizing. For each stimulus, the average choice probability is more extreme than the feedback probability associated with that stimulus. This overshooting behavior is typical of responding under probabilistic feedback (e.g., Craig et al., 2011; Nosofsky & Stanton, 2005; Sewell et al., 2018; Shanks et al., 2002). Visual inspection of the individual response profiles confirmed that all participants exhibited the same probability matching behavior, responding similarly to each stimulus over the entire course of learning. This allays concerns that the pattern of responding we observe here is due to combining data from participants whose probability matched with other participants who employed a strict maximizing strategy (i.e., always responding Category A when the probability of Category A feedback was greater than 0.5, otherwise, responding Category B).

Choice probabilities, quantified as the proportion of Category A responses, averaged across participants for each learning block. Each line summarizes responding to a different stimulus, defined by its feedback probability (i.e., the proportion of trials the stimulus was paired with Category A feedback, which was either 0.2, 0.4, 0.6, or 0.8). Data for stimuli with more consistent feedback (i.e., the 0.2 and 0.8 stimuli) are plotted with circles. Data for stimuli with less consistent feedback (i.e., the 0.4 and 0.6 stimuli) are plotted with squares. Open symbols denote stimuli that were paired with Category A feedback on fewer than 50% of trials. Participants tended to overshoot the feedback probabilities, in line with existing literature. Error bars show the standard error of the mean

To provide statistical confirmation of learning, we conducted a 4 (stimulus, indexed by the four levels of Category A feedback: 0.2, 0.4, 0.6, and 0.8) × 5 (learning block) repeated-measures ANOVA on the choice probability data. The analysis revealed a significant main effect of stimulus, F(3, 12) = 117.72, MSe = .024, p < .001, ηp2 = .97, reflecting the different rates at which people assigned the four stimuli to Category A. There was also a significant interaction between stimulus and learning block, F(12, 48) = 10.05, MSe = .121, p < .001, ηp2 = .72, indicating the tendency for people to assign the 0.6 and 0.8 stimuli to Category A at an increasing rate across blocks, and to assign the 0.2 and 0.4 stimuli to Category A at a decreasing rate across blocks. The main effect of learning block was not significant, indicating the absence of any general response bias favoring one category response over another, F(4, 16) = 0.23, MSe = .04, p = .92.

Mean response time

Figure 5 shows mean RTs for each of the four stimuli across the five learning blocks. The figure shows a reduction in RT for all stimuli across learning blocks, suggesting a modest practice effect. It also appears that RTs for stimuli paired with more consistent feedback (i.e., the 0.2 and 0.8 stimuli, plotted in the figure using circles) are faster than those for stimuli with less consistent feedback (i.e., the 0.4 and 0.6 stimuli, which are plotted in the figure using squares). We investigated these differences via a 4 (stimulus, indexed by the four levels of Category A feedback: 0.2, 0.4, 0.6, and 0.8) × 5 (learning block) repeated-measures ANOVA. There was a main effect of stimulus, F(3, 12) = 5.48, MSe = .002, p = .013, ηp2 = .58, reflecting a RT advantage for stimuli with more consistent feedback. There was also a main effect of learning block, F(4, 16) = 3.86, MSe = .002, p = .022, ηp2 = .49, reflecting a 45-ms reduction in mean RT from the first learning block, M = 619 ms, to the last learning block, M = 574 ms. The interaction was not significant, F(12, 48) = 0.79, MSe < .001, p = .66.

Mean RTs averaged across participants for each learning block. Each line summarizes responding to a different stimulus, defined by its feedback probability (i.e., the proportion of trials the stimulus was paired with Category A feedback, which was either 0.2, 0.4, 0.6, or 0.8). For all stimuli, there were reductions in RT across learning blocks, consistent with practice effects. Data for stimuli with more consistent feedback (i.e., the 0.2 and 0.8 stimuli) are plotted with circles. Data for stimuli with less consistent feedback (i.e., the 0.4 and 0.6 stimuli) are plotted with squares. Open symbols denote stimuli that were paired with Category A feedback on fewer than 50% of trials. Error bars are the standard error of the mean

Summary of empirical results

Taken together, the pattern of results from our multisession probabilistic category learning experiment is consistent with results typically found in single-session studies. Participants in our study responded by overshooting the feedback probabilities, as is commonly found in the literature. Mean RTs were shown to be sensitive to how diagnostic feedback was. Stimuli with more consistent feedback (i.e., Category A feedback probabilities closer to 1 or 0) were, on average, responded to faster than stimuli with less consistent feedback. We also observed practice effects in the mean RT data. These effects, although relatively small, are striking given the high level of experience people had with the task (i.e., completion of 18 runs through a learning task involving the same set of feedback probabilities throughout). We now present more detailed model-based analyses of the choice-RT data, which seek to simultaneously characterize the changes in choice probabilities and RTs observed over the course of learning.

Diffusion model analysis of learning

The analyses reported above reveal two hallmarks of learning in our data: choice probabilities that adaptively change in light of feedback and progressively faster RTs with increasing task experience. Those traditional analyses, however, only provide limited insight into performance, as they do not address more detailed RT distribution data for different types of category responses, or how these data are affected by learning. More importantly, those analyses are not able to address whether the changes in choice probabilities are commensurate with the observed changes in RTs. That is, can a single learning mechanism jointly explain both facets of performance simultaneously? To address this, we conducted a diffusion model analysis of the learning data. The goal of this analysis is to determine whether the changes in performance we observed can be attributed to variation in a single model parameter—implying a singular mechanistic locus of learning—or if multiple parameters are required to account for changes in performance. If changes in drift rates suffice to explain learning-related changes in performance, this would strongly imply that the learning effects in our data can be attributed to learned changes in associative strengths relating cues to category outcomes.

Response time quantile data

To fit the diffusion model to the data, we summarized each individual’s RT distribution data for Category A and Category B responses for each stimulus in each learning block. Following convention, empirical RT distributions were summarized using the 0.1, 0.3, 0.5, 0.7, and 0.9 RT quantiles (Ratcliff & Smith, 2004). The individual RT distribution data were then averaged across participants to obtain quantile averaged data, which were fit by the diffusion model. Before presenting the model fits, we discuss some of the regularities in the RT distribution data and describe the method of presenting these data.

In this article, RT quantile data are shown using modified quantile probability plots (see Fig. 6; cf. Ratcliff & Smith, 2004). In these figures, RT quantiles for Category A and Category B responses are plotted against their respective choice probabilities for each stimulus in each learning block. Each panel of Fig. 6 depicts changes in choice probabilities and the shapes of the Category A and Category B RT distributions across the five learning blocks for a single stimulus. The numerical plotting symbols in each panel identify performance from the corresponding learning block. For each learning block, there are two columns of plotting symbols: one that summarizes the shape of the Category A RT distribution, and another that summarizes the shape of the Category B RT distribution. For each column of plotting symbols, moving upwards along the ordinate, the plotting symbols identify the 0.1, 0.3, 0.5, 0.7, and 0.9 RT quantiles for the relevant category response. The relative spacing between successive plotting symbols summarizes the shape of the corresponding RT distribution, reflecting how far apart successive RT quantiles are along the time axis. The position of each column along the abscissa reflects the probability of each category response within a learning block. Empirically, category responses that match the modal category outcome for each stimulus are more common than those that do not match the modal category response. To facilitate visual comparison of performance across different stimuli, we plot category responses that match the modal feedback outcome for that stimulus on the right-hand side of each panel. Category responses that do not match the modal feedback outcome for a given stimulus are plotted on the left-hand side of each panel. For example, for the P(A) = 0.2 stimulus, shown in the top left panel of Fig. 6, Category B responses are shown on the right-hand side of the figure, whereas Category A responses are shown on the left-hand side of the figure. For the P(A) = 0.8 stimulus, shown in the bottom right panel of the figure, the reverse is true. Given the probabilistic nature of feedback, responses on the right-hand and left-hand side of each panel can be viewed, in a normative sense, as corresponding to “correct” and “error” responses, respectively. For ease of communication, we use these terms to describe responses people make to different stimuli.

Modified quantile probability plot (QPP) for showing changes in the shapes of categorization RT distributions over the course of learning. Each panel in the figure shows response data for a different stimulus, as defined by its feedback probability. Data from each of the five learning blocks are indexed by the numerical plotting symbols. Responses that match the modal category outcome for each stimulus are displayed on the right-hand side of each panel, and are, in a normative sense, “correct” responses. Responses that do not match the modal category outcome for each stimulus are displayed on the left-hand side of each panel, and are, in a normative sense, “error” responses. Choice data from each block are therefore presented in two columns. The location of each column of data along the abscissa corresponds to the probability of each category responses. For example, for the P(A) = 0.8 stimulus, Category A responses in the first block are shown as the column of 1s on the right-hand side of the lower right panel in the figure. This shows that approximately 75% of responses to this stimulus, in the first learning block, were Category A responses. Category B responses in the first block are shown as the columns of 1s on the left-hand side of the same panel (i.e., approximately 25% of responses to this stimulus, in the first learning block, were Category B responses). Within each column of data, the five plotting symbols indicate, ascending upwards along the ordinate, the 0.1, 0.3, 0.5, 0.7, and 0.9 RT quantiles for the relevant category response. The relative spacing of plotting symbols within each column describes the shape of the corresponding RT distribution for the relevant category response in each learning block

One of the striking regularities in the RT quantile data is that correct responses appear consistently faster than error responses. This pattern is common in perceptual tasks that emphasize accuracy rather than speed (e.g., Luce, 1986; Swensson, 1972) and is consistent with our task instructions, which encouraged participants to maximize the proportion of correct responses. To gain a clearer picture about how stable this pattern of RT differences was, we conducted a regression analysis predicting the mean difference in RT quantiles (i.e., error RT minus correct RT) as a function of whether the stimulus was a strong or weak predictor of category outcome—that is, the P(A) = 0.2 and P(A) = 0.8 stimuli versus. the P(A) = 0.4 and P(A) = 0.6 stimuli—learning block, and RT quantile. These differences in the RT quantiles are shown in Fig. 7. The analysis showed that the RT difference for correct versus error responses was larger for strongly predictive stimuli compared with weakly predictive stimuli, β = 0.36, p < .001, increased as a function of RT quantile, β = 0.52, p < .001, but maintained a consistent size across learning blocks, β = −0.07, p = .36. These features of the RT distribution data suggest that feedback probability introduces an asymmetry in the speed with which different category responses are made, with more consistent feedback resulting in a greater RT advantage for the correct, more frequently occurring, category outcome. Differences in the shapes of the underlying RT distributions for correct and error responses are underscored by tail quantiles exhibiting a larger RT advantage for correct responses. Interestingly, the lack of any predictive effect of learning block suggests that the RT advantage for correct responses is not eliminated even in highly practiced participants, such as the ones in our study.

Group averaged difference in RTs (error RT – correct RT) for different distribution quantiles in each learning block. The two panels show the RT difference for data averaged across strongly predictive stimuli—that is,, P(A) = 0.2 and P(A) = 0.8—and weakly predictive stimuli—that is, P(A) = 0.4 and P(A) = 0.6—in the left and right panels, respectively. The difference in RTs gets larger in the tails of the RT distributions as error RT quantiles become progressively slower than the corresponding correct RT quantiles, reflected by the upward trend in the data. The effect is more pronounced for strongly predictive stimuli

Model-fitting procedure

We now report nested model comparisons to identify a version of the diffusion model that provides the best and most parsimonious account of the choice-RT data. Unless otherwise specified, for the models we tested, the values for boundary separation (a), nondecision time (Ter), and between-trial variability in drift rates (η), and nondecision time (st) were held constant across all stimuli and all learning blocks. Drift rates (v) were typically allowed to vary across different stimuli and different learning blocks in order to characterize the effects of associative learning on performance. For all models, we assumed an unbiased decision process, setting the start point of evidence accumulation to z = a/2. For each model we tested, parameters were estimated by minimizing the likelihood ratio statistic, G2, defined as

In Eq. 1, the outer summation over i indexes the 20 experimental conditions formed by factorial combination of the four stimuli across each of the five learning blocks. The inner summation over j indexes the 12 bins formed by the RT quantiles for Category A and Category B responses in each experimental condition. The p and π terms correspond respectively to the observed and predicted proportions of responses in each bin. The number of trials per condition is described by n, which was equal to 180. Model predictions were computed using the methods described by Tuerlinckx (2004).

Fixed drift rate model

To establish a baseline level of fit, we first considered a version of the diffusion model where drift rates for each of the four stimuli were fixed across the five learning blocks. Although this model cannot predict learning-related changes in performance, it is useful because it provides a way of quantifying the benefits of associative learning, which we discuss later on. To reduce the total number of free parameters that were estimated, drift rates for the two strongly predictive stimuli—that is, the P(A) = 0.2 and P(A) = 0.8 stimuli—were constrained to have equal values, but opposite signs.Footnote 2 The same restriction was applied for the two weakly predictive stimuli—that is, the P(A) = 0.4 and P(A) = 0.6 stimuli. The baseline model therefore required six free parameters, two drift rates (vStrong and vWeak), the boundary separation parameter (a), nondecision time (Ter), and between-trial variability parameters for drift rate and nondecision time (η and st, respectively). Due to the lack of any way to account for learning effects across trial blocks, the fit of the baseline model was quite poor, G2(214) = 126.03, and we do not show the predictions of this model against data.

Variable drift rate models

To examine the effects of associative learning on performance, we considered two alternatives to the baseline model that allowed drift rates to vary across learning blocks.Footnote 3 We first considered a constrained model where changes in drift rates across learning blocks for strongly and weakly predictive stimuli were controlled by separate functions, each describing an exponential approach to a limit,

In Eq. 2, the drift rate in learning block i, vi, is determined by the asymptotic drift rate, vMax, and an exponential rate parameter, c. As with the baseline model, drift rates for the two strongly predictive stimuli were constrained to have equal values, but opposite signs. The same was true for the two weakly predictive stimuli. This exponential model required two additional free parameters relative to the baseline model (i.e., two asymptotic drift rates and two exponential rate parameters replacing the two fixed drift rates of the baseline model), and provided a significantly better fit to the data, ΔG2(2) = 56.62, p < .001. In an absolute sense, the fit of the exponential model was quite good, G2(212) = 69.41, and is shown in Fig. 8. Best fitting parameter estimates for the exponential model are shown in Table 1.

Fit of the exponential model to the data. Data are the same as in Fig. 6. Diffusion model predictions are plotted as open circles connected by solid lines. Different lines connect model predictions for each of the five RT quantiles (e.g., the line that is lowest on the ordinate in the figure corresponds to the predicted 0.1 RT quantile, the line that is highest on the ordinate in the figure corresponds to the predicted 0.9 RT quantile). With drift rates changing according to an exponential function across blocks, the model is successfully able to capture all of the major trends in the data

Several comments apply to the fit of the exponential model. First, the model successfully describes the changes in choice probabilities for the four stimuli across learning blocks. In particular, the model correctly predicts relatively large changes in performance across the first two blocks, followed subsequently by only minor changes in performance across the remaining blocks. Second, the model successfully captures the changes in the shapes of RT distributions for both correct and error responses over the course of learning. Importantly, the model captures the consistent RT advantage for correct responses over errors across all distribution quantiles and all learning blocks. Although there are some deviations between model predictions and data—such as minor misses in accuracy for some of the stimuli and occasional underprediction of error RT quantiles in the first learning blockFootnote 4—the success of this relatively simple implementation of the diffusion model is notable. In our view, a successful learning model should be able to explain both relatively rapid early changes in performance that are followed by relatively stable performance. In addition, a successful model should be able to identify the point at which performance stabilizes. The exponential model is able to explain both of these facets of performance. In providing a close fit to the data, the model supports the idea that the associative learning process can be viewed as describing changes in drift rates driving decision-making.

Despite the convergence of predictions from the exponential model with the learning data, it is possible that a different set of theoretical assumptions could achieve a better fit. To get a sense of what an upper limit of good fit to our data would look like within a diffusion model framework, we also considered a more flexible implementation of the model. Unlike the exponential model, this flexible model does not impose any regularity upon block-by-block changes in drift rates. Instead, drift rates for strong and weakly predictive stimuli are freely estimated from the data for each learning block. Once again, drift rates for the strongly and weakly predictive pairs of stimuli were constrained to have equal values but opposite signs (i.e., a total of 10 drift rates were freely estimated from the data). Removing the exponential constraint on changes in drift rates resulted in an additional six free parameters being estimated from the data, compared to the exponential model. The additional flexibility—nearly doubling the number of free parameters in the model—did little to improve the quality of fit above that of the exponential model, ΔG2(6) = 5.53, p = .48. On balance then, we conclude that the fit of the exponential model provides as good a fit to the learning data as can reasonably be expected from the diffusion model when drift rate is the only parameter that can change across learning blocks. The exponential model provides a good account of all the major regularities in the data in a parsimonious way, accounting for 220 data degrees of freedom with only eight free parameters.

Alternatives to the exponential model

The success of the exponential model supports the idea that the effects of associative learning can be captured by changes in the drift rate of the diffusion model. This result is consistent with other diffusion model analyses of learning effects (e.g., Frank et al., 2015; Petrov et al., 2011; Ratcliff & Frank, 2012). We next consider whether learning selectively influences drift rates or if other decision parameters are also affected. If selective influence holds, and no other model parameters change across learning blocks, it would highlight compatibility between error-driven models of learning and the sequential sampling framework for modeling decision-making.

We therefore considered two other variations of the diffusion model that were extensions of the exponential model. The first version assumed that, in addition to exponential changes in drift rates across learning blocks, learning also resulted in changes in the nondecision time parameter, Ter. This would be tantamount to assuming increased efficiency in the encoding of stimuli—or potentially in the retrieval of specific cue–outcome associations—as a function of learning. This version of the model was identical to the exponential model, except that nondecision time was estimated on a block-by-block basis. This model failed to produce a significant improvement in fit over the exponential model, ΔG2(4) < 1, as there was little variation in the leading edge of the empirical RT distributions across blocks (i.e., the 0.1 RT quantile was quite consistent across learning blocks for all stimuli; see Fig. 8). The second model we considered was one where boundary separation, a, could change over the course of learning. The idea that people may reduce decision thresholds as they gain experience in a task was shown by Dutilh et al. (2009) in the context of practice effects in lexical decision. These authors observed consistent narrowing of boundary separation across trials (i.e., progressively lower decision thresholds). Although this would normally result in reduced accuracy, the changes in boundary separation were accompanied by increases in drift rates. The combination of these changes served to keep decision accuracy constant, while enabling faster RTs. We implemented a variable threshold model that was analogous to the variable nondecision time model considered above, estimating a unique boundary separation parameter for each learning block. Like the variable nondecision time model, though, the variable threshold model failed to produce a significant improvement in fit over the exponential model, ΔG2(4) < 1. We conclude that the only parameters that were systematically affected by learning were those that determined changes in drift rates.

Summary of diffusion model analysis

Our diffusion model analysis of the learning data strongly supports the idea that learned changes in associative strength can be successfully modeled as changes in the drift rate of the diffusion model. Specifically, we found changes in drift rates to be approximately exponential in form, which is consistent with error-driven learning algorithms that are commonly used in the category learning literature (e.g., Kruschke, 1992; Kruschke & Johansen, 1999; Love et al., 2004). During the initial learning blocks, changes in drift rates are relatively large from block to block. However, the changes become progressively smaller as learning proceeds and performance stabilizes. The analysis also showed that learning appeared to selectively influence drift rates. We failed to find any evidence that other model parameters (i.e., boundary separation or nondecision time) were affected by learning. In sum, the diffusion model analysis suggests that learning-related changes in categorization performance are driven solely by changes in drift rate. Because drift rates index relative support for competing category outcomes, their function is analogous to learned associative strengths in traditional category learning models (e.g., Kruschke, 1992; Kruschke & Johansen, 1999; Love et al., 2004). The next question is whether the changes in drift rates that are required to account for the data can be produced within an error-driven framework for modeling category learning.

An integrated model of learning and response time

In this section, we develop a category learning model that relates error-driven changes in associative strengths to changes in the drift rate driving a sequential sampling decision mechanism. The model we develop uses a standard error-driven learning rule to update changes in association weights linking cue representations to different category outcomes. We considered several variations of this model to test different assumptions about how patterns of association weights relate to drift rates. To preview, we find the relationship between learned associations and drift rates to be nonlinear, relying on an implementation of Luce’s (1959) choice rule in order to appropriately capture the dynamics of learning. Without the nonlinear scaling, the model fails to capture the rapid changes in choice probabilities that are observed in the early stages of learning, and prevents performance from stabilizing in the latter part of the task. These results dovetail with the recent analysis of Pedersen et al. (2017), who found support for a nonlinear relationship between drift rates and association strength in a reward prediction task.

Formal description of the learning model

The integrated model of learning and RTs assumes that presentation of a cue activates an exemplar-based representation of that cue in memory. Because our study involved nonconfusable discrete-valued stimuli, for simplicity, we assume that exemplar activation is achieved in an all-or-none way via a Boolean activation function. Formally, when cue i is presented, the activation of exemplar node i is set to ψi = 1; otherwise, ψi = 0. This Boolean activation function mimics the exponential similarity function of Nosofsky’s (1986) GCM and Kruschke’s (1992) ALCOVE model when exemplar specificity is high, and simplifies our model by removing a free parameter. Exemplar activation propagates forward through the exemplar network to nodes representing category-level information. The activation of category node j, ωj, is determined by the strength of the associative weight connecting exemplar node i with category node j, such that

Associations between category and exemplar nodes are updated on each trial in proportion to prediction error. We use the standard delta rule (Rescorla & Wagner, 1972), where changes in association weights are described by

where t is a teacher value determined by the feedback received on a given trial. Following Kruschke (1992), we use humble teachers, where

Equation 4 states that the change in the association weight connecting exemplar node i with category node j is proportional to the difference between the teacher value and the activation value for category node j, which quantifies prediction error. Changes in associative weights on each trial are scaled by a learning rate parameter, λ.

In many category learning models, the learning rate parameter is held constant across trials. However, in probabilistic learning environments, where it is impossible to completely eliminate prediction error, several authors have argued that people might progressively discount feedback as prediction error becomes less informative (Craig et al., 2011; Kruschke & Johansen, 1999; see also Sewell et al., 2018). Following Kruschke and Johansen (1999), we consider a version of the learning model that incorporates feedback discounting by multiplying the learning rate on trial n by a discounting factor,

where ρ is a nonnegative discounting parameter. When ρ > 0, prediction errors encountered later in the task result in smaller changes in associative weights compared with equivalent errors encountered earlier in the task.

Equations 3–6 describe how changes in associative weights are driven by prediction error. To make contact with choice-RT data, relative associative strengths in the learning model must be related to drift rates that drive decision-making. Here, we assume a diffusion decision process that is identical to Ratcliff’s model. In our model, drift rates are determined by a nonlinear transformation of relative activation of the two category nodes.Footnote 5 Given that changes in drift rates across learning blocks were consistent with an exponential function, we used a scaled softmax function to transform category activations to drift rates,

Equation 7 is closely related to the response rule used by ALCOVE, where relative category node activation determines response probabilities via an exponentiated version of Luce’s (1959) choice rule, producing output bounded in the interval [0, 1]. The rate at which activation ratios approach either the upper or lower limit of the interval is determined by ϕ, with larger values resulting in more rapid approach. In ALCOVE, the role of the ϕ parameter is to set the level of determinism in responding. As ϕ increases, smaller differences in category activations result in a stronger tendency to assign the stimulus to the category with the higher level of activation.Footnote 6 Although Eq. 7 does not control response outcomes directly, the functional significance of ϕ is similar, as it determines how relative category node activation maps onto relative evidence for different category outcomes during decision-making by setting drift rates. The drift threshold parameter, τ, ensures that relative category activations favoring each category outcome produce drift rates with the appropriate sign (i.e., v > 0 when ωA > ωB, and v < 0 when ωA < ωB). The value of τ determines the level of relative category node activation that results in a drift rate of zero (i.e., a drift rate that favors neither response alternative). In principle, τ can take on any value between zero and one. Logically, however, it is sensible to fix τ to an unbiased value of 0.5, so that v = 0 when ωA=ωB. Fixing τ in this way ensures symmetrical changes in drift rates as relative category activations deviate from a value of 0.5. The range of drift rates produced by Eq. 7 is controlled by the vr parameter, which is positive-valued and freely estimated from the data. This parameter scales relative category activations within the interval [−τvr, vr (1 – τ)]; for example, when vr = 1 and τ = 0.5, drift rates are scaled on the interval [−0.5, +0.5].

In total, the learning model has eight parameters that can be freely estimated from data. Two of these parameters govern the associative learning process. These are the learning rate parameter, λ, and the feedback discounting parameter, ρ. Four parameters govern the diffusion decision process: boundary separation, a, nondecision time, Ter, and trial-by-trial variability in drift rates, η, and nondecision time, st. The final two parameters link the learning and decision components of the model. These scaling constants, vr and ϕ, set the range of learnable drift rates and control how differences in category node activations map onto drift rates, respectively.

Fits of the learning model to data

To fit the learning model to data, we generated 30 unique sequences of training stimuli (i.e., 30 different orderings of 200 learning trials). The structure of the training sequences mirrored those used experimentally. Training sequences were divided into five 40-trial blocks. Within each block, each stimulus was presented 10 times. The presentation order of stimuli within a block was determined randomly. For each training sequence, we evaluated model predictions after every 40 learning trials (i.e., at the end of each learning block). We then averaged the block-by-block predictions across the 30 training sequences, which were used as the basis for parameter estimation. Model predictions were generated in this way to ensure generalizability across different sequences of learning trials (Lewandowsky, 1995). As with the diffusion model analysis of the data, learning model parameters were estimated by minimizing G2 (see Eq. 1).

We contrasted fits of two versions of the learning model to the data, which differed with regards to the inclusion of a feedback discounting mechanism (i.e., whether ρ in Eq. 6 was fixed to zero or freely estimated). The models therefore required either seven (no feedback discounting) or eight (feedback discounting) parameters to be freely estimated from the data.